Translating a data frame is the process of changing a data frame values’ language to another language without changing the context, meaning, or content. Translating a Pandas Data Frame with Python can be done with Google’s “googletrans” library.

In this article, I will crawl a website by taking its semantic content into a data frame to translate the content to another language. Translating a website for exploring the new topic and content ideas can be useful for especially the content publishers that don’t know a specific content language of a website. In this example, I will translate MyFitnessPal’s content into Turkish while using a Pandas Data Frame. I know both Turkish and English, but imagine that there is a Spanish website with more than 500000 creative food recipes. Wouldn’t you want to understand its content for finding new contexts and topic ideas?

In this example, MyFitnessPal has more than 320000 food nutrition information and also recipes. It has more than 4000 URLs for only the food recipes with egg. I will crawl only the URLs for egg recipes and translate them. You can use the same methodology for helping your clients who don’t know a specific language.

Since this article is a little bit longer, if you just want to see the data frame translation to another language section, you can check the necessary code blocks and sub-sections as below.

Translate a Sentence to Another Language with Python

Translating a sentence to another language with Python, you can use “google_trans_new” Python package.

from google_trans_new import google_translator

import google_trans_new

translator = google_translator()

translate_text = translator.translate('Hola mundo!', lang_src='es', lang_tgt='en')

OUTPUT>>>

Hello WorldTo translate a sentence with Python, you should follow the steps below:

- Import “google_translater” function from “google_trans_new” package.

- Create a variable, assign “google_translator()” to it.

- Use “translate()” method with the text to be translated.

- Use “lang_src” for specifying the text language.

- Use the “lang_tgt” parameter for specifying the language that text to be translated into.

Translate a Pandas Data Frame Column to Another Language

You can see the Python methodology for translating a column of a dataframe to another language.

for i in dataframe["column"][:row_number]:

translator = google_translator()

i = translator.translate(i, lang_src="auto", lang_tgt="tr")

dataframe["column"][i] = iIf you want to translate a data frame’s a column, you can use the Python function above. The explanation of the function is below.

- Start a Python for loop for the count of a number of rows to be translated.

- Use “google_translator()” method.

- Use the “translate()” method for every row in the selected column.

- Change the actual content of the data frame column with the translated column.

Translate a Pandas Data Frame Column without a For Loop

dataframe["column"][:row_number] = dataframe["column"][:row_number].apply(lambda x: translator.translate(x, lang_tgt="tr"))If you want to do the same thing without a for loop, you can use the “lambda” function with the “apply” method of Pandas Python Module as above.

Translate All Pandas Data Frame to Another Language with Python

If you want to translate all of the data frames, you can use the function block below.

dataframe = dataframe.apply(lambda x: translator.translate(x, lang_tgt="tr"))If you don’t specify a “lang_src” to a “translator.translate()” method, it will be figured out automatically. If you want to know which languages can be used for the “google_trans_new” package of Python for translation, you can use the methodology below.

print(google_trans_new.LANGUAGES)

OUTPUT>>>

{'af': 'afrikaans', 'sq': 'albanian', 'am': 'amharic', 'ar': 'arabic', 'hy': 'armenian', 'az': 'azerbaijani', 'eu': 'basque', 'be': 'belarusian', 'bn': 'bengali', 'bs': 'bosnian', 'bg': 'bulgarian', 'ca': 'catalan', 'ceb': 'cebuano', 'ny': 'chichewa', 'zh-cn': 'chinese (simplified)', 'zh-tw': 'chinese (traditional)', 'co': 'corsican', 'hr': 'croatian', 'cs': 'czech', 'da': 'danish', 'nl': 'dutch', 'en': 'english', 'eo': 'esperanto', 'et': 'estonian', 'tl': 'filipino', 'fi': 'finnish', 'fr': 'french', 'fy': 'frisian', 'gl': 'galician', 'ka': 'georgian', 'de': 'german', 'el': 'greek', 'gu': 'gujarati', 'ht': 'haitian creole', 'ha': 'hausa', 'haw': 'hawaiian', 'iw': 'hebrew', 'he': 'hebrew', 'hi': 'hindi', 'hmn': 'hmong', 'hu': 'hungarian', 'is': 'icelandic', 'ig': 'igbo', 'id': 'indonesian', 'ga': 'irish', 'it': 'italian', 'ja': 'japanese', 'jw': 'javanese', 'kn': 'kannada', 'kk': 'kazakh', 'km': 'khmer', 'ko': 'korean', 'ku': 'kurdish (kurmanji)', 'ky': 'kyrgyz', 'lo': 'lao', 'la': 'latin', 'lv': 'latvian', 'lt': 'lithuanian', 'lb': 'luxembourgish', 'mk': 'macedonian', 'mg': 'malagasy', 'ms': 'malay', 'ml': 'malayalam', 'mt': 'maltese', 'mi': 'maori', 'mr': 'marathi', 'mn': 'mongolian', 'my': 'myanmar (burmese)', 'ne': 'nepali', 'no': 'norwegian', 'or': 'odia', 'ps': 'pashto', 'fa': 'persian', 'pl': 'polish', 'pt': 'portuguese', 'pa': 'punjabi', 'ro': 'romanian', 'ru': 'russian', 'sm': 'samoan', 'gd': 'scots gaelic', 'sr': 'serbian', 'st': 'sesotho', 'sn': 'shona', 'sd': 'sindhi', 'si': 'sinhala', 'sk': 'slovak', 'sl': 'slovenian', 'so': 'somali', 'es': 'spanish', 'su': 'sundanese', 'sw': 'swahili', 'sv': 'swedish', 'tg': 'tajik', 'ta': 'tamil', 'te': 'telugu', 'th': 'thai', 'tr': 'turkish', 'tk': 'turkmen', 'uk': 'ukrainian', 'ur': 'urdu', 'ug': 'uyghur', 'uz': 'uzbek', 'vi': 'vietnamese', 'cy': 'welsh', 'xh': 'xhosa', 'yi': 'yiddish', 'yo': 'yoruba', 'zu': 'zulu'}In the next sections, you will see a practical example of pandas data frame translation with methods that include Python. In the future sections, you will see the processes below.

- How to crawl a website for translation purposes.

- Why a translated content shouldn’t be used for SEO purposes.

- How to find new topic ideas from a translated content of a similar and foreign site.

- How to perform content structure analysis from URLs for filtering the URLs for crawling purposes.

- How to visualize the content structure of a website for translation purposes within a data frame.

- How to translate a website’s content by making it into a data frame.

How to Make a Website’s Content into Data Frame for Translation with Python?

To take a website’s content into a data frame, you will need an SEO Crawler. You can use OnCrawl, JetOctopus, Ahrefs, Screaming Frog, or SEMRush for crawling a website but none of these crawlers won’t take the content of the web page. They will take the On-page SEO tags, title tags, canonical tags, or more, but they won’t take all of the content for every page into a data frame column. To take a website’s content entirely, you need to use Advertools’ “crawl()” function.

To learn how to crawl a website and perform an SEO Analysis, you can read the related guideline.

For translation of a data frame, you will need a semantic website. In other words, the website’s content should be of the same type so that translation can have a better purpose. In this pandas data frame translation with python tutorial, MyFitnessPal will be used. Below, you will see how to crawl MyFitnessPal.

Importing Necessary Python Modules and Libraries for Data Frame Translation via a Web Site

To crawl and take content of a website into a data frame for translating it, you will need to Python libraries below.

- Advertools, for taking and Crawling URLs

- Requests, for taking the sitemap URLs

- Pandas, for using the data frames

- Plotly, for visualizing the output

- Googletrans, for translating the data frame

- Google_trans_new, to overcome the bugs of Googletrans

import advertools as adv

import requests

import pandas as pd

import plotly.express as px

from google_trans_new import google_translator

import google_trans_new

adv.__version__

OUTPUT>>>

0.10.7Above, you will see the Python modules and libraries for taking a website’s content into a dataframe for the translation purposes through Python.

How to Take and Crawl URLs a Website for Extracting its Content into a Data Frame?

To crawl a website in an effective way, instead of crawling all sites, crawling only the URLs in the sitemap is more efficient since the quality URLs will be in the sitemap. But, MyFitnessPal doesn’t have a single sitemap or sitemap index file. In this case, we will need to find all of the necessary sitemap files and unite them. All of the sitemap files of the MyFitnessPal have the same URL pattern, it is “domain name, sitemap, sitemap number, sitemap extension”. Thus, we can use a simple Python for loop with range function as below.

list = []

for i in range(99):

r = requests.get(f"https://www.myfitnesspal.com/sitemap-{i}.xml")

if r.status_code == 200:

df = adv.sitemap_to_df(f"https://www.myfitnesspal.com/sitemap-{i}.xml")

list.append(df)

OUTPUT >>>

2021-03-14 14:09:56,703 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-0.xml

2021-03-14 14:10:00,173 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-1.xml

2021-03-14 14:10:03,754 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-2.xml

2021-03-14 14:10:07,474 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-3.xml

2021-03-14 14:10:10,062 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-4.xml

2021-03-14 14:10:12,615 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-5.xml

2021-03-14 14:10:15,681 | INFO | sitemaps.py:361 | sitemap_to_df | Getting https://www.myfitnesspal.com/sitemap-6.xmlThe explanation of the sitemap uniting for loop is below.

- Create an empty list.

- Start a for loop with the “range” function.

- Use the “requests.get” method for taking the first sitemap.

- Use the “f string” method for looping all sitemap URLs.

- Use an if-else statement.

- If the response code of the sitemap URL is 200, use “adv.sitemap_to_df” method.

- Append the output file to the list.

You will need to unite all of the created output files in one data frame so that all of the URLs to be crawled can be taken from single data frame.

united_df = pd.concat(list)

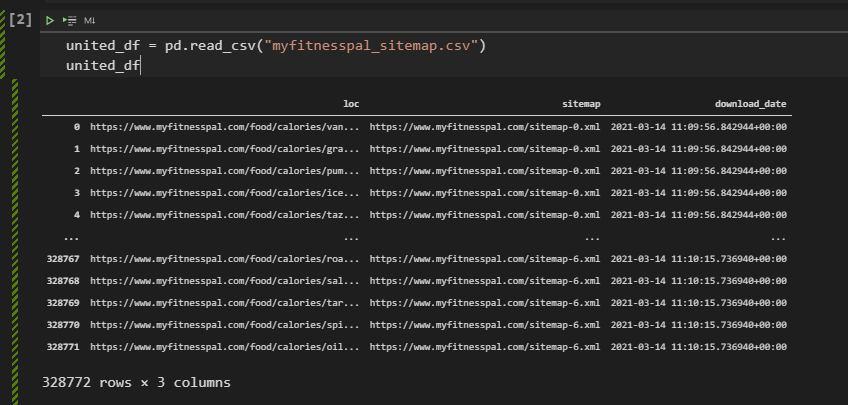

united_df.to_csv("myfitnesspal_sitemap.csv", index=False)Using “pd.concat()” for every element of the “list” variable will unite every file. After uniting every file, you will need to use the “to_csv” method for taking a permanent CSV output file that includes all URLs to be crawled.

united_dfYou can see the result below.

Analyzing the MyFitnessPal’s Semantic Content Network for Translation Purposes

MyFitnessPal is a website that includes all the ingredients, calories, nutrition of all types of food with a brief content structure. MyFitnessPal has a short introduction section for their content, and a simple HTML Table for the nutrition of food, and a couple of brief information for the specific food’s ingredients. In other words, MyFitnessPal’s site is semantic, and it makes it perfect for translation purposes. Since, the content is brief, short and mostly it is an HTML table, the translation will be used for only simple words and short sentences. This will increase the quality of the translation process with Python.

For SEO, using translated content is not useful. And, this script and Pandas Data Frame Language Translation with Python guideline are not for using another site’s content. It is for finding new topics, themes, and content ideas for conjugate sites from another language. Thus, analyzing MyFitnessPal’s content is important, because most of their content is about “restaurants’ food recipes” or “food brands’ specific products”. But also, their content is super specific for food types, they have more than 4000 recipes just for foods with eggs. And, this type of semantic content network can help you to create a better and firm topical map and content structure for your site.

We have 328772 URLs from MyFitnessPal, only from its sitemaps. Crawling all of these URLs would require more resources, thus via URLs, you can analyze their content structure and crawl only the necessary section for your own purposes. Below, you will see another Advertools method for doing this, “url_to_df”.

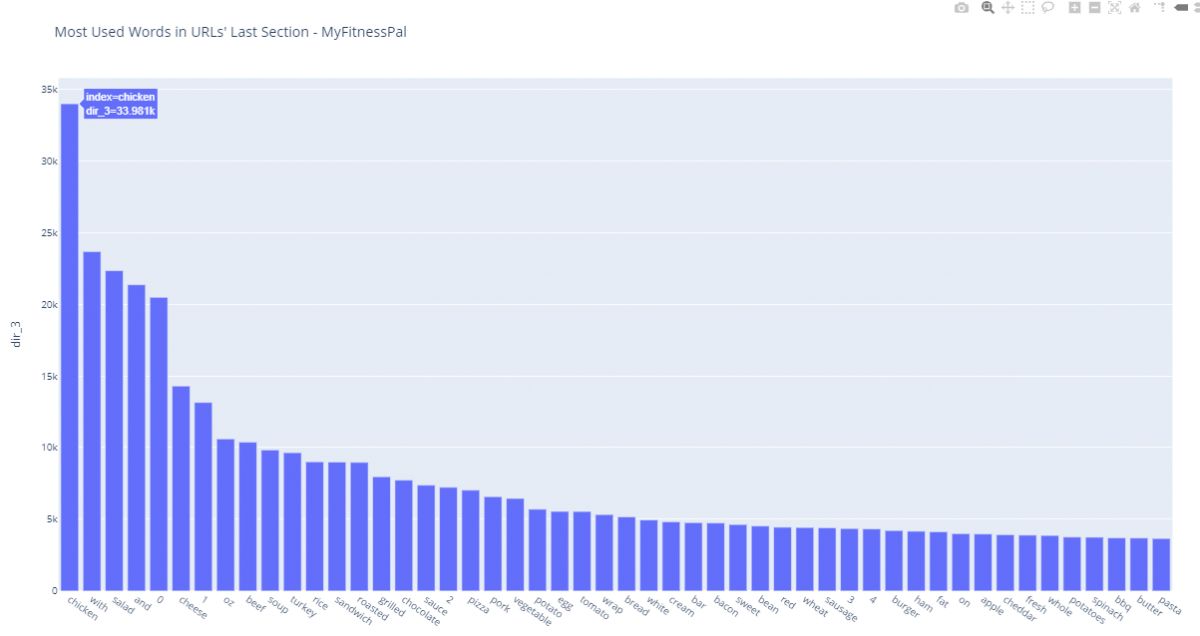

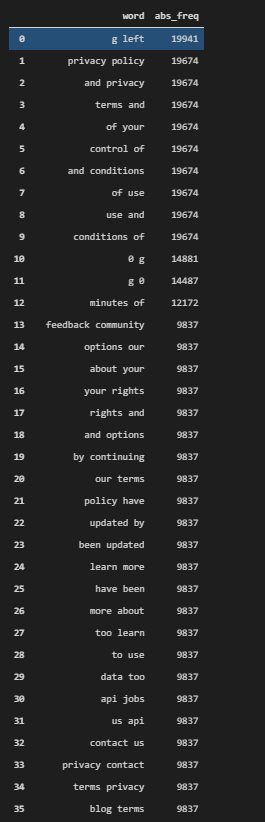

paths = adv.url_to_df(united_df["loc"])

paths["dir_3"].str.split("-").explode().value_counts().to_frame().head(50)You can see the result below.

- We have 33981 URLs that include the word “chicken” within it.

- We have 22342 URLs for only salad recipes.

- We have 10378 URLs for only beef recipes.

Now, you can understand what I mean with “new content ideas” for conjugate sites. We can visualize a website’s content structure via its URLs and Plotly as below.

How to Visualize a Website’s Content Structure with URL Breakdowns via Python (Plotly) for Translation Purposes

fig = px.bar(dir_3, x=dir_3.index, y=dir_3["dir_3"], title="Most Used Words in URLs' Last Section - MyFitnessPal", width=1500, height=800)

fig.update_xaxes(tickangle = 35)

fig.show()

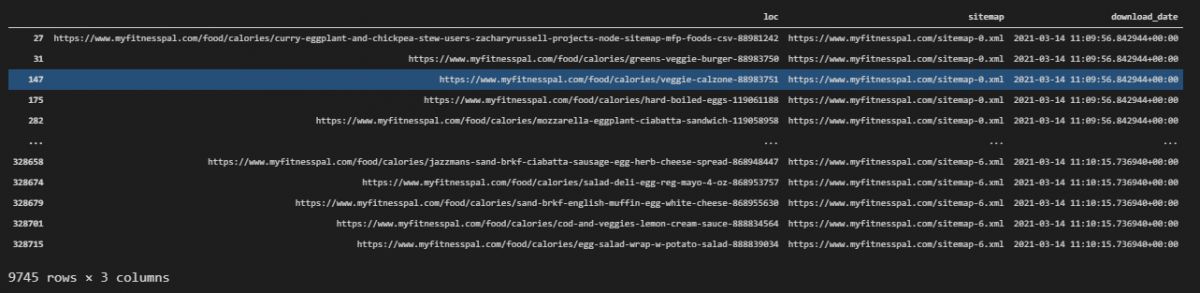

Filtering a Website’s URLs for Crawling and Translation Purposes

To filter a website’s URLs for crawling, we can use the “str.contains” method. For translating a data frame, we will use just the URLs for “eggs”. To do this, we will filter all the content from MyFitnessPal that are about eggs as below.

pd.set_option("display.max_colwidth",255)

pd.set_option("display.max_row",255)

united_df[united_df["loc"].str.contains("egg")]Explanation of URL filtering is below.

- We have set the maximum column width as 255.

- We have increased the maximum row count to 255.

- We have filtered and called all the URLs that include the word “egg” within them.

You can see the result below.

egg_content = united_df[united_df["loc"].str.contains("egg")]We have transfered all the URLs with “egg” string to “egg_content” variable.

Crawling a Website’s URLs for Extracting its Content for Translation within a DataFrame

To crawl a website or list of URLs, we can use “advertools’ crawl function” as below.

adv.crawl(egg_content["loc"], "egg_content_myfitnesspal.jl", follow_links=False)We have crawled all the URLs within the “egg_content” data frame so that we can extract their content for translating it.

egg_content_df = pd.read_json("egg_content_myfitnesspal.jl", lines=True)

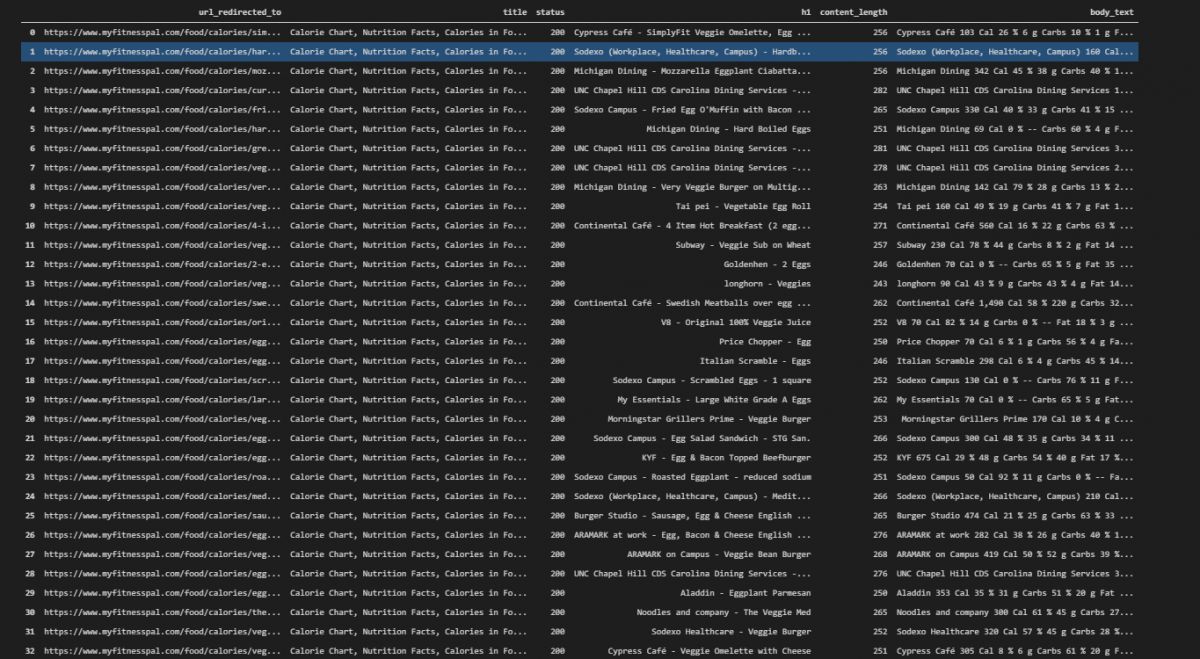

egg_content_df.head(2)We have used the “pd.read_json” command for reading all of the output files that include the crawl output. We have called the first two rows, you can see the result below.

Auditing the Content of the Website for a Quality Dataframe Translation with Python

Translating a website’s content within a data frame can be useful for finding content ideas but if the crawled URLs don’t have a proper content or status code, the translation process also will be problematic. To prevent this situation, and to understand the website’s general content structure, auditing the content of the website is useful for a quality data frame translation.

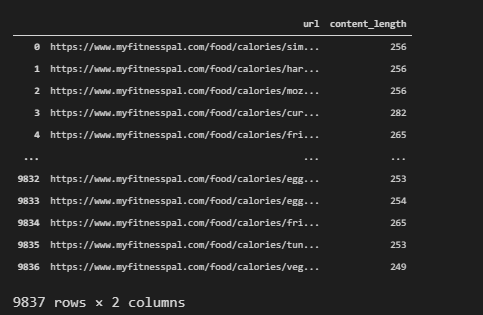

egg_content_df["content_length"] = egg_content_df["body_text"].str.split(" ").str.len()

egg_content_df[["url", "content_length"]]We have created a new column called “content_length” and used “str.split” with “body_text” column to take the word count per URL.

We have 240-260 words per URL. We can check the word pattern frequencies as below.

adv.word_frequency(egg_content_df["body_text"], phrase_len=2).head(50)We have used the “word_frequency” method with “phrase_len=2” and called the first 50 rows before translating the data frame so that we can see the translation material.

We can check the word frequencies for title for auditing the translation material.

adv.word_frequency(egg_content_df["title"], phrase_len=1).head(50)We have chosen “title” column instead of “body_text”.

Before starting the translation, you can check the all of the related columns for our translation process. Because, you might not want to translate a content that is not about “eggs” or an URL with non-200 status code.

egg_content_df.loc[:, ["url_redirected_to", "title", "status", "h1", "content_length", "body_text"]].head(60)You can see the filtered data frame with URL, status code, title, h1, content length and body text information.

We have checked that the content of the website is proper for a translation. It is brief and all the URLs, titles, heading tags and paragraphs are convenient for a healthy bulk translation.

Translating A Website’s Content within a Data Frame via Python

In this section, you will learn how to translate a dataframe with Python. We will use “google_translator()” function as below.

translator = google_translator()

translate_text = translator.translate('Hola mundo!', lang_src='es', lang_tgt='en') You should remember the “translator.translate()” function from the beginning of the dataframe translating guideline.

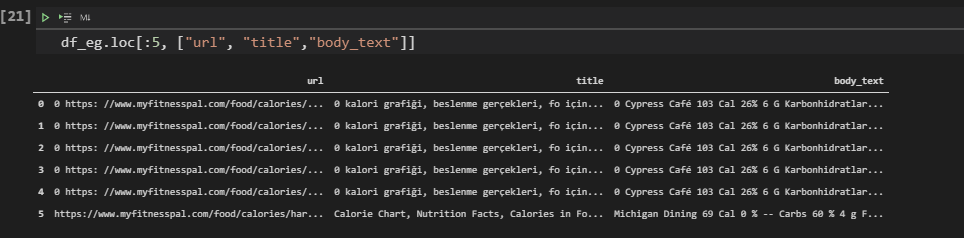

df_eg = egg_content_df.copy()We have copied the data frame with the help of the “copy” method. We have copied our data frame to be translated because we didn’t want to change the original data frame. You will see a practical translation example as below.

for i in df_eg["body_text"][:5]:

translator = google_translator()

i = translator.translate(i, lang_src="auto", lang_tgt="tr")

df_eg["body_text"][i] = iIn the example above, we have translated only the first five rows of the “df_eg” data frame. You can see the translated data frame below. With the “lang_src” and “auto” parameter value pair, we have let the Python detect the default language, with the “lang_tgt” and “tr” parameter value pair, we have translated our content to Turkish.

In the next section, we will use the “apply” method for translating the first 1000 rows of the dataframe to the Turkish.

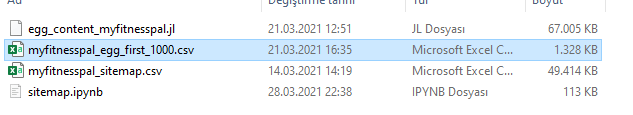

df_eg["body_text"][:1000] = df_eg["body_text"][:1000].apply(lambda x: translator.translate(x, lang_tgt="tr"))After translation of the dataframe, you can output the translated dataframe as below.

df_eg.loc[:1000, ["title", "url", "h1", "body_text"]].to_csv("myfitnesspal_egg_first_1000.csv")You can see the output below with 1328 KB.

Last Thoughts on Translating Dataframes with Python and Holistic SEO

Translation of data frames via Python can be used to find new content ideas, examining websites from different countries. If an SEO doesn’t know foreign languages, he or she won’t be able to examine their content structure, most of the things will be ambiguous for him or her. To prevent this situation, translating heading tags, titles, descriptions, content or URLs can help an SEO to understand sites from other cultures, countries, or geographies. Most of the time, mainstream SEOs think that all the planet lives in English, but this is not true. To understand SEO and Search Engine, helping clients from other cultures, and examining the Search Engine’s SERP, we will need to understand sites from other languages.

You might think to use Chrome’s own translation technology, but it won’t let you analyze hundreds of thousands of URLs in bulk like website translation with Python process does. Translating a website’s content within a data frame thanks to Python can help an SEO to overcome the language barriers for examining many more sites and Search Engine algorithms thanks to them.

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024

- Semantic HTML Elements and Tags - January 15, 2024

- What is Interaction to Next Paint (INP), and How to Optimize It - December 7, 2023

Thank you for this awesome article on the compression of SEO.