Reinforcement learning represents a prominent facet within the realm of machine learning, delving into the training of autonomous agents to discern optimal decisions through the maximization of cumulative rewards. Reinforcement learning involves an agent interacting with an environment, taking action, and receiving feedback in the form of rewards or penalties. The agent’s objective is to learn a policy that maximizes the long-term expected reward.

Reinforcement learning in AI employs a trial-and-error approach, where the agent explores different actions, observes the consequences, and updates its strategy based on the received feedback to achieve the process. The learning process involves estimating the value of different actions or states, either through value functions or policy gradients, and using these estimates to guide decision-making.

Reinforcement learning exhibits a versatile application across a myriad of tasks, encompassing domains as diverse as strategic gaming, such as chess or Atari games, autonomous vehicle control, energy system management, resource optimization, and the realm of robotics. Reinforcement learning enables agents to tackle challenging problems and achieve remarkable performance in various domains through their ability to learn from interactions and adapt to changing environments. One reinforcement learning example is training an autonomous robot to navigate through a maze by rewarding it for reaching the goal and penalizing it for hitting obstacles along the way.

Reinforcement learning offers several advantages that make it a powerful approach in the field of artificial intelligence. It enables agents to handle sequential decision-making tasks and learn optimal strategies over time, considering long-term consequences and maximizing cumulative rewards. The adaptability of reinforcement learning allows agents to learn from interactions with the environment, making it well-suited for dynamic and uncertain domains. Reinforcement learning in artificial intelligence has the potential to create autonomous systems that continuously improve their performance without explicit human guidance.

There are additional disadvantages to consider. One such challenge arises from the delicate balance between exploration and exploitation, as agents must navigate the trade-off between experimenting with novel actions and leveraging existing knowledge. The training of deep reinforcement learning models necessitates substantial computational resources and encounters complexities, prompting the meticulous fine-tuning of hyperparameters for optimal performance. The undeniable advantages inherent in reinforcement learning render it a promising avenue for unraveling intricacies in problem-solving and propelling advancements in the realm of artificial intelligence, thus warranting dedicated efforts to address and overcome these inherent challenges.

What Is Reinforcement Learning?

Reinforcement learning, a subfield of machine learning, focuses on developing algorithms and techniques that enable an agent to learn optimal behaviors through interactions with its environment. The concept of reinforcement learning has been traced back to the earliest days of artificial intelligence (AI) when researchers sought to develop intelligent systems capable of learning and adapting.

The origins of reinforcement learning date back to the realms of cybernetics and control theory, where seminal contributions by visionaries like Norbert Wiener and Richard Bellman in the mid-20th century laid the foundation. However, it was in the 1980s that reinforcement learning began to emerge as a distinct field, thanks to the pioneering work of scholars like Christopher Watkins and Richard Sutton.

The integration of AI vision, the transformative potential of deep learning, and the accessibility of unprecedented computing resources have all contributed to the field’s remarkable growth since then. Such convergence has propelled reinforcement learning to achieve unprecedented breakthroughs across a wide spectrum of applications, from the development of game-playing agents that surpass human performance to the realization of autonomous robots and decision-making systems capable of operating in complex real-world environments.

How Does Reinforcement Learning Work?

Reinforcement learning represents a paradigm within machine learning wherein an agent acquires the capacity to make decisions and take actions in an environment, all with the goal of maximizing cumulative rewards. The process commences as the agent perceptually grasps the present state of the environment, subsequently utilizing this information to select an action to undertake. The action triggers a transition to a novel state once executed, culminating in the agent receiving either a reward or penalty contingent on the action’s outcome.

The agent’s fundamental aim lies in acquiring an optimal policy, one that efficiently maps states to actions to maximize expected long-term rewards. Such a pursuit transpires through an iterative course of trial and error, as the agent constantly refines its policy based on observed rewards and action outcomes. The agent gradually discerns which actions yield more substantial rewards across diverse states by exploring various actions and experiencing their consequences. The agent’s policy improves, engendering a heightened capacity to select actions that yield greater rewards.

An array of algorithms is employed in reinforcement learning, including value-based methods, policy gradient methods, and hybrid approaches that combine both strategies to update the agent’s policy. These algorithms leverage mathematical techniques like Bellman equations and gradient descent to estimate the value or utility of different states, enabling policy optimization accordingly.

At the core of reinforcement learning lies the notion that the agent learns through direct interaction with the environment, sidestepping the need for pre-annotated data. It excels in scenarios characterized by dynamic and intricate environments where direct supervision or expert knowledge remains limited. Reinforcement learning has demonstrated tremendous success in diverse domains such as game playing (e.g., AlphaGo), robotics, autonomous driving, and resource management. It showcases its remarkable aptitude for addressing real-world challenges and acquiring effective strategies through the iterative process of trial and error.

What are the Functions Found in Reinforcement Learning Sytems?

Reinforcement learning systems consist of several key functions that work together to enable effective learning and decision-making. Firstly, the environment function represents the task or problem that the system interacts with. It provides feedback in the form of rewards or penalties based on the agent’s actions. The agent function is responsible for making decisions and taking actions in the environment. It learns from experiences and seeks to maximize long-term rewards through trial and error.

The policy function defines the agent’s behavior and determines the action to be taken in a given state. It maps states to actions and is deterministic or stochastic. The value function estimates the expected cumulative rewards associated with being in a particular state and following a specific policy. It guides the agent’s decision-making process by providing a measure of the desirability of different states or actions.

The model function captures the agent’s understanding of how the environment behaves. It is either explicit, representing the dynamics of the environment, or implicit, acquired through interactions. The model helps the agent simulate and plan future actions, which aids in learning and decision-making.

Lastly, the learning algorithm is responsible for updating the agent’s policy or value function based on its experiences. It leverages the feedback from the environment to improve the agent’s performance over time. Reinforcement learning systems combine these functions to create a closed-loop process where the agent interacts with the environment, learns from feedback, and refines its decision-making capabilities.

What is the Importance of Reinforcement Learning?

Reinforcement learning plays a crucial role in various real-world applications due to its ability to enable intelligent decision-making and adaptability in dynamic environments. One key importance of reinforcement learning lies in its capability to optimize complex systems and tasks that are difficult to solve using traditional programming or rule-based approaches. Reinforcement learning algorithms discover optimal strategies and policies that maximize long-term rewards by learning through trial and error through interactions with the environment.

Reinforcement learning, when applied to robotics, empowers autonomous agents to acquire and adapt their behavioral capabilities, thereby facilitating their adept navigation through intricate environments, execution of intricate tasks, and seamless interaction with both humans and objects. A noteworthy example resides in robots mastering the art of walking, manipulating objects, or even engaging in game-like scenarios, all through the refinement of their actions via the optimization of rewards and penalties.

Reinforcement learning in the realm of healthcare plays a pivotal role in fostering personalized treatment approaches and facilitating effective disease management. Leveraging medical data and clinical outcomes, reinforcement learning algorithms excel at discerning optimal treatment plans for individual patients. Treatment schedules, personalized therapies, and ascertaining the ideal drug dosages are algorithms that contribute to enhanced patient outcomes and diminished medical costs, thereby forging a path toward superior healthcare delivery.

Reinforcement learning finds application in finance, where it assists in algorithmic trading, portfolio management, and risk assessment. Reinforcement learning algorithms optimize trading strategies to maximize returns while managing risks by learning from historical market data and feedback signals.

Reinforcement learning in transportation and logistics enables efficient route planning, traffic optimization, and resource allocation. It allows autonomous vehicles to learn optimal driving behaviors, leading to reduced congestion, improved fuel efficiency, and safer transportation systems.

Reinforcement learning has proven valuable in gameplay, natural language processing, recommendation systems, and energy management, among other domains. Its ability to learn from interactions, adapt to changing circumstances, and discover novel solutions makes it a powerful tool for addressing complex problems in various real-world applications.

What are the basic components of a reinforcement learning system?

A reinforcement learning system consists of several fundamental components that work together to enable learning and decision-making processes. The first essential component is the agent, which is the entity responsible for interacting with the environment and making decisions. The agent receives input in the form of observations or states from the environment and takes actions based on a policy. The policy defines the agent’s strategy or behavior in selecting actions given specific states.

The second key component is the environment, which represents the external system or world in which the agent operates. The environment provides feedback to the agent in the form of rewards or penalties, indicating the desirability of its actions. These rewards serve as the basis for learning, guiding the agent to maximize cumulative rewards over time.

The environment is characterized by its state space, action space, and transition dynamics. The state space represents the possible conditions of the environment, whereas the action space represents the possible actions of the agent. The transition dynamics describe how the environment transitions from one state to another based on the agent’s actions.

The third critical component is the reward function, which quantifies the desirability of the agent’s actions. It assigns a scalar value to each state-action pair, indicating the immediate feedback or consequences of the agent’s decisions. The goal of the agent is to learn a policy that maximizes the expected cumulative rewards over time.

Lastly, the learning algorithm is the mechanism that enables the agent to update its policy based on the received rewards and observations. Reinforcement learning algorithms employ various techniques, such as value-based methods or policy gradient methods, to update the policy and improve decision-making.

What are the Advantages of Reinforcement Learning?

Listed below are some Advantages of Reinforcement Learning.

- Flexibility: Flexibility is achieved by an iterative process of trial and error, in which the agent interacts with the environment, receives feedback in the form of rewards or penalties, and adapts its behaviors in accordance with the results of those interactions.

- Potential for Autonomous Systems: One of the most critical features of autonomous systems is the ability of machines to learn and make judgments without human intervention or programming.

- Adaptability to Dynamic Environments: Algorithms improve their decision-making abilities by learning from their past experiences and adapting to new circumstances. Algorithms based on reinforcement learning are made to respond to and learn from their surroundings in real-time.

- Continuous Improvement: Algorithms that use reinforcement learning adjust to new conditions, discover and capitalize on opportunities, and fine-tune their actions to improve performance.

- Learning from Interaction: Agents using reinforcement learning interact with their surroundings, take action, and learn from the results (rewards or punishments).

- Integration with Deep Learning: Reinforcement learning is complemented by deep learning, which learns complicated patterns and extracts high-level characteristics from raw input data to create an accurate approximation of the underlying function.

- Decision-Making in Sequential Tasks: Reinforcement learning is useful in decision-making tasks because it helps learners navigate complex environments, adjust to new conditions, and achieve optimal performance over time.

- Generalization: Reinforcement learning generalizes from visible states and actions by using the learning algorithm’s capacity to uncover underlying structures.

- End-to-End Learning: End-to-end learning is a valuable characteristic of reinforcement learning because it enables the direct mapping of sensory inputs to desired actions, removing the need for human feature engineering.

- Ability to Discover Novel Solutions: Reinforcement learning benefits the ability to develop novel solutions by promoting originality, adaptability, and the possibility for breakthroughs in a variety of sectors where creative problem-solving is necessary.

1. Flexibility

Flexibility stands as a pivotal advantage inherent to reinforcement learning, endowing agents with the remarkable capacity to swiftly adapt and acquire knowledge amidst ever-changing and dynamic environments. These reinforcement learning agents possess the invaluable ability to modify their behavior in response to the informative feedback procured from the environment, thereby enabling them to deftly navigate the delicate balance between exploring novel strategies and exploiting their existing knowledge to attain optimal outcomes. The inherent flexibility is masterfully achieved through an iterative process of trial and error, wherein the agent ceaselessly engages with the environment, meticulously absorbs the rewards or penalties conveyed through the feedback, and meticulously fine-tunes its actions accordingly.

Reinforcement learning agents effectively adapt to new situations, learn from experience, and optimize their decision-making processes by continuously updating their policies based on observed outcomes. Such flexibility is particularly beneficial in scenarios where the environment is uncertain, complex, or subject to changes over time. Reinforcement learning’s adaptability and flexibility make it well-suited for applications in domains such as robotics, autonomous systems, and dynamic resource allocation, where the ability to adjust strategies and respond to changing conditions is crucial for achieving desired outcomes.

2. Potential for Autonomous Systems

One of the significant advantages of reinforcement learning is its potential for developing autonomous systems. Reinforcement learning algorithms enable machines to learn and make decisions without explicit programming, which is a critical aspect of autonomous systems. These algorithms learn optimal strategies to achieve specific goals and tasks by using rewards and penalties as feedback signals. It allows autonomous systems to operate in complex and dynamic environments, making real-time decisions and adapting to changing conditions.

Reinforcement learning provides a framework for training agents to interact with the environment, learn from their experiences, and continuously improve their performance over time. Such capability opens up a range of possibilities for autonomous systems in various domains, such as self-driving cars, unmanned aerial vehicles, and robotic assistants. These systems navigate, plan, and execute tasks independently, leading to increased efficiency, safety, and reliability by leveraging reinforcement learning. The potential for autonomous systems powered by reinforcement learning is particularly exciting as it promises advancements in automation, robotics, and the overall integration of intelligent machines into individuals’ daily lives.

3. Adaptability to Dynamic Environments

One of the notable advantages of reinforcement learning is its adaptability to dynamic environments. Reinforcement learning algorithms are designed to interact with and learn from the environment in real-time. Such a process allows them to continuously adjust their behavior and strategies based on the feedback they receive. Reinforcement learning enables the agent to adapt and respond accordingly in dynamic environments where conditions, tasks, or goals change over time.

The algorithms update their knowledge and policies, ensuring that they make optimal decisions in the current environment by observing and evaluating the outcomes of their actions. Adaptability is particularly valuable in domains where the environment is unpredictable, uncertain, or subject to variations. Examples include robotics, where robots need to navigate through changing terrain or objects, or finance, where investment strategies need to adapt to evolving market conditions.

Reinforcement learning allows systems to learn and adjust their behavior autonomously, leading to improved performance and the ability to handle complex and dynamic scenarios effectively. The adaptability of reinforcement learning makes it a powerful tool for addressing real-world challenges and achieving optimal outcomes in dynamic environments.

4. Continuous Improvement

Continuous improvement is a crucial advantage of reinforcement learning, as it allows systems to iteratively enhance their performance over time. Reinforcement learning algorithms are designed to learn from experience and optimize their decision-making based on feedback received from the environment. The algorithms explore different actions, evaluate their outcomes, and update their policies to maximize rewards or minimize costs through a process of trial and error.

The iterative learning process enables continuous improvement as the algorithms fine-tune their strategies to achieve better performance with each interaction. Reinforcement learning algorithms adapt to changing circumstances, identify and exploit new opportunities, and refine their behaviors to achieve higher levels of efficiency and effectiveness. The ability to learn and grow on a continuous basis is especially beneficial in fields where the environment or tasks are dynamic, complicated, or prone to change.

Reinforcement learning facilitates continuous improvement by providing a framework for systems to optimize their decision-making processes and achieve increasingly better results over time. Such an advantage makes reinforcement learning a powerful tool for solving complex problems and driving ongoing performance enhancement in various domains, such as robotics, gaming, recommendation systems, and autonomous vehicles.

5. Learning from Interaction

The fundamental advantage of reinforcement learning lies in its ability to facilitate learning through interaction, allowing systems to acquire knowledge and enhance their performance through direct experiential engagement. Reinforcement learning agents actively engage with their environment, executing actions and receiving consequential feedback in the form of rewards or penalties. These agents discern the actions that yield favorable outcomes and those that must be avoided through iterative interactions with the environment, gradually refining their decision-making abilities and improving their overall performance.

Reinforcement learning systems utilize feedback received from interactions to update their policies and make informed decisions in subsequent instances. Such a process involves striking a delicate balance between exploration and exploitation. The exploration aspect involves the agent actively seeking out novel actions to gather new insights and uncover latent patterns within the environment. Exploitation entails leveraging the agent’s existing knowledge to maximize rewards and achieve desired outcomes. The ability to learn from interaction enables reinforcement learning systems to adapt to the unique characteristics of their environments, discover intricate patterns, and develop strategies that optimize long-term objectives

The advantage is especially useful in situations where explicit rules or labeled training data are scarce or missing. Reinforcement learning allows systems to learn and develop based on their own experiences, making it well-suited for applications in robotics, gaming, control systems, and a variety of other domains where learning from interaction is critical for obtaining peak performance.

6. Integration with Deep Learning

Integration with deep learning is a notable advantage of reinforcement learning, as it allows for more powerful and effective decision-making systems. Deep learning, with its ability to learn complex patterns and extract high-level features from raw input data, complements reinforcement learning by providing sophisticated function approximators. Deep neural networks process large amounts of sensory input, such as images or sensor readings, and learn intricate representations of the environment.

Reinforcement learning algorithms harness the power of these representations to make discerning decisions and optimize actions. These models exhibit an innate ability to effectively handle the complexities inherent in high-dimensional and continuous state spaces, thereby facilitating more precise and efficient learning processes. Reinforcement learning seamlessly integrates advanced deep learning techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), to further enhance their perceptual, mnemonic, and temporal modeling capabilities. These sophisticated architectures contribute to heightened perceptual acuity, enhanced memory retention, and refined temporal modeling, by fusing deep learning with reinforcement learning, thereby enriching the overall learning process.

The benefits of such integration include improved performance, faster convergence, and the ability to handle complex and diverse tasks. The combination of reinforcement learning and deep learning has shown great promise in domains such as robotics, autonomous vehicles, and game-playing, where the integration of perception, decision-making, and control is crucial for achieving high-level performance.

7. Decision-Making in Sequential Tasks

An essential benefit of reinforcement learning is that it facilitates excellent decision-making in sequential tasks, allowing agents to adapt to novel circumstances. Models trained by reinforcement learning are meant to gain knowledge through trial and error. Reinforcement learning excels at finding the most effective strategies over time in sequential tasks where decisions have consequences that impact future states and rewards.

The reinforcement learning agent actively engages with its environment, executing actions and receiving consequential feedback in the form of rewards or penalties. The reinforcement learning algorithm incrementally refines its decision-making capabilities by discerning which actions lead to higher rewards through this iterative process. The adaptive approach enables reinforcement learning to effectively tackle intricate and protracted tasks characterized by uncertain outcomes. Reinforcement learning adeptly navigates complex environments, dynamically adjusts strategies based on feedback, and uncovers optimal policies for sequential decision-making problems by integrating long-term planning and accounting for the future ramifications of its actions.

Its merits in decision-making tasks encompass the capacity to surmount ever-changing circumstances, adapt to dynamic contexts, and optimize performance over extended time horizons. Such versatility positions reinforcement learning as a valuable asset across diverse domains, including robotics, finance, healthcare, and resource management, where the capacity to create sound decisions amid uncertainty and extended time frames is indispensable for attaining success.

8. Generalization

Generalization is a key advantage of reinforcement learning, allowing agents to transfer knowledge and skills learned in one context to similar but unseen situations. Reinforcement learning models strive to learn policies that are not only effective in the specific environments they are trained on but are even applicable to new, similar environments. Reinforcement learning algorithms make informed decisions in novel situations based on the patterns and principles they have learned by generalizing from past experiences.

Generalization in reinforcement learning works by leveraging the ability of the learning algorithm to extract underlying structures and generalize them to unseen states and actions. It involves encoding information about the environment, the agent’s past interactions, and the resulting rewards into a general representation that captures essential features and relationships. Reinforcement learning algorithms then utilize the representation to make decisions in new contexts, even if they differ from the training environment.

The benefits of reinforcement learning for generalization include the ability to adapt to new scenarios, handle variations and uncertainties in the environment, and achieve robust performance across different contexts. It enables reinforcement learning agents to be more versatile, efficient, and effective in real-world applications, where they encounter diverse and ever-changing conditions.

9. End-to-End Learning

End-to-end learning is an advantageous aspect of reinforcement learning that allows for the direct mapping of sensory inputs to desired actions, eliminating the need for manual feature engineering. Feature extraction and representation play a crucial role in transforming raw input data into meaningful representations before feeding them into learning algorithms in traditional machine learning approaches. However, end-to-end learning in reinforcement learning smoothly incorporates the complete learning process, from perception to action, into a single model. Such an approach enables the agent to learn directly from raw sensory inputs, such as images or sensor readings, without requiring explicit feature engineering.

End-to-end learning in reinforcement learning works by utilizing deep neural networks to capture complex relationships and extract relevant features automatically. The neural network takes the raw input as input and learns to map it to appropriate actions through multiple layers of computation. The benefits of reinforcement learning for end-to-end learning include the ability to handle high-dimensional and unstructured data, adapt to different environments, and discover optimal representations for the task at hand. End-to-end learning simplifies the learning pipeline, reduces human effort, and potentially leads to more efficient and effective decision-making on complex tasks by eliminating the need for manual feature engineering.

10. Ability to Discover Novel Solutions

The capacity to unearth novel solutions stands as a substantial advantage of reinforcement learning. Reinforcement learning algorithms venture into the environment, navigating a landscape of trial and error through a process of iterative exploration and exploitation. Such unfettered exploration empowers the agents to traverse uncharted territories, uncovering hitherto unseen pathways and innovative problem-solving approaches. Reinforcement learning agents have the autonomy to experiment and extract knowledge from their experiential encounters, fostering the emergence of unprecedented and advantageous solutions in stark contrast to conventional rule-based or pre-programmed systems. Such inherent capability for discovery renders reinforcement learning an invaluable paradigm for tackling intricate and multifaceted challenges, where conventional methods fall short in embracing the untapped potential that lies beyond the boundaries of established approaches.

Reinforcement learning works by interacting with the environment, receiving feedback in the form of rewards or penalties, and adjusting behavior based on the feedback to maximize long-term cumulative rewards. The agent explores different actions and strategies, through the iterative learning process, leading to the discovery of unique approaches that are not explicitly programmed or known beforehand.

The ability to discover novel solutions is precious in scenarios where traditional algorithms or human expertise fall short. It allows reinforcement learning to tackle complex problems, adapt to changing environments, and potentially find optimal strategies that were previously undiscovered. The benefits of reinforcement learning for the ability to discover novel solutions include innovation, adaptability, and the potential for breakthroughs in various fields where creative problem-solving is required.

What are the Disadvantages of Reinforcement Learning?

Listed below are the Disadvantages of Reinforcement Learning.

- Sample Inefficiency: Reinforcement learning often requires a large number of interactions with the environment to learn optimal policies. It is time-consuming and computationally expensive, especially in complex environments, limiting its real-world applicability.

- Exploration-Exploitation Trade-off: Finding the right balance between exploration and exploitation is a challenge. Insufficient exploration leads to suboptimal policies, while excessive exploration results in inefficiency and unnecessary exploration of already-known states.

- Lack of Generalization: Reinforcement learning models typically learn in a specific environment and struggle to generalize their knowledge to different environments or tasks. Transfer learning and domain adaptation techniques are often required to address the limitation.

- High-dimensional State and Action Spaces: Reinforcement learning is challenging in environments with high-dimensional state and action spaces. The curse of dimensionality makes it difficult to explore and learn in such spaces effectively.

- Reward Engineering and Sparse Rewards: Designing appropriate reward functions is crucial in reinforcement learning. Defining reward functions that guide the agent toward the desired behavior is challenging for complex tasks. Sparse rewards, where rewards are only received at specific critical points, make learning more difficult.

- Sensitivity to Hyperparameters: Reinforcement learning algorithms often have several hyperparameters that need to be carefully tuned for optimal performance. Small changes in hyperparameter settings have a significant impact on learning outcomes, making it a time-consuming and non-trivial task.

- Ethical Considerations: Reinforcement learning algorithms learn policies that are optimal in terms of achieving rewards but, in specific domains, lead to unethical or undesired behavior. Ensuring that reinforcement learning agents adhere to ethical guidelines and constraints is an ongoing challenge.

- Safety and Risk: The exploratory nature of reinforcement learning leads to unintended consequences or unsafe behavior in some situations. Ensuring safety and mitigating the risks associated with learning in real-world environments is an important consideration.

What are some common exploration strategies in reinforcement learning?

Listed below are some common exploration strategies in reinforcement learning.

- Epsilon-Greedy: The approach strikes a healthy balance between exploration and exploitation by selecting the optimal action with a probability of (1 – epsilon) and investigating a random action with a probability of epsilon. It makes it possible for both exploration and exploitation to take place.

- Boltzmann Exploration: Commonly known as Softmax Exploration, such a strategy assigns probabilities to actions based on their estimated values. Actions with higher values have higher probabilities, but all actions have non-zero probabilities, allowing for exploration.

- Upper Confidence Bound (UCB): UCB assigns exploration bonuses to actions based on their uncertainty or potential for high reward. It balances between selecting actions with high expected rewards and actions with high uncertainty.

- Thompson Sampling: The Bayesian-based approach samples actions according to their posterior distribution of rewards. Actions with higher expected rewards have higher probabilities of being selected.

- Optimistic Initialization: The agent starts with optimistic beginning values for action values such as strategy, encouraging exploration of actions with uncertain rewards.

- Monte Carlo Tree Search (MCTS): MCTS is commonly used in environments with large state spaces. It performs a tree search by simulating multiple possible trajectories and selecting actions that lead to unexplored or promising regions of the search space.

- Curiosity-Based Exploration: Curiosity in RL models was first suggested by Dr. Juergen Schmidhuber in 1991 and was implemented using a framework of inquisitive neural controllers. It explained how boredom and curiosity are likely to drive a particular algorithm. It was accomplished through the use of delayed rewards for activities that expanded the model network’s understanding of the world.

- Memory-Based Exploration: Memory-based exploration strategies were developed to address the shortcomings of intrinsic motivation and reward-based reinforcement learning. In real-time scenarios, rewards in various environments are insufficient. DeepMind’s Agent57, which recently set a new standard for Atari games, used episodic memory in its RL policy.

- Random Exploration: Such a strategy involves selecting actions completely at random. It is straightforward and guarantees a comprehensive investigation of the environment, but it is ineffective in identifying the best policies.

What are the challenges associated with training deep reinforcement learning models?

Training deep reinforcement learning models poses several challenges that need to be addressed for effective learning and performance. Firstly, the high dimensionality of input spaces and the complexity of deep neural networks make training time-consuming and computationally demanding. The large number of parameters in deep models requires substantial amounts of data and significant computational resources to ensure convergence and prevent overfitting.

Secondly, deep reinforcement learning suffers from the problem of sample inefficiency. Deep models typically require a large number of interactions with the environment to learn optimal policies, which is time-consuming and impractical in real-world scenarios. Balancing the trade-off between exploration and exploitation becomes crucial to obtaining optimal rewards while avoiding getting stuck in suboptimal solutions.

Instability during training is a common challenge in deep reinforcement learning. The optimization process is susceptible to issues such as vanishing or exploding gradients, resulting in unstable learning dynamics and difficulty converging to optimal solutions. Techniques like experience replay, target networks, and appropriate parameter initialization help mitigate these instabilities.

Lastly, the transferability and generalization of deep reinforcement learning models remain challenges. Models trained in one environment are likely to readily adapt to new environments, requiring significant retraining or fine-tuning. Achieving robustness and generalization across diverse scenarios and variations in the environment remains an ongoing research area.

Addressing these challenges requires advancements in algorithmic techniques, computational resources, and data collection strategies. Overcoming these obstacles is going to pave the way for a more effective and widespread application of deep reinforcement learning in various domains.

How Does Reinforcement Learning Work with Deep Learning?

Combining reinforcement learning with deep learning is an efficient strategy for solving difficult issues. Deep learning is an area of machine learning that uses multi-layered artificial neural networks to gain insights from data. The topic is referred to as “recurrent neural

networks.” An agent, on the other hand, learns to select behaviors that increase long-term cumulative rewards by interacting with an environment in the setting of reinforcement learning, receiving input in the form of rewards or penalties, and then repeating such a process. The process is how an agent learns to select behaviors that raise long-term cumulative rewards.

The integration of reinforcement learning with deep learning, commonly referred to as deep reinforcement learning, capitalizes on the formidable capabilities of deep neural networks to approximate value functions or policy functions. Reinforcement learning algorithms gain the capacity to effectively navigate high-dimensional input spaces and acquire intricate representations by employing deep neural networks as function approximators. The fusion enables the joint utilization of deep learning’s ability to extract meaningful patterns from complex data and reinforcement learning’s capacity to learn through iterative trial and error. Deep reinforcement learning facilitates the development of intelligent systems that possess the prowess to comprehend, reason, and act proficiently in intricate environments, paving the way for solving complex challenges in the realm of artificial intelligence and beyond.

Deep reinforcement learning employs deep neural networks as powerful function approximators to estimate the value or policy functions inherent in reinforcement learning. These deep neural networks intricately process information gathered from the environment and generate projections of the expected value or action probability. The agent gains the ability to make discerning decisions of heightened complexity and granularity by leveraging the remarkable capacity of deep neural networks to handle intricate and multi-dimensional data. The amalgamation of deep reinforcement learning and deep neural networks endows the agent with a profound understanding of the environment, facilitating nuanced responses and elevating the agent’s decision-making prowess to new horizons.

The combination of reinforcement learning and deep learning has shown remarkable successes in various domains, including game playing, robotics, and natural language processing. Deep reinforcement learning learns directly from raw sensory input, bypassing the need for explicit feature engineering by leveraging the representation power of deep neural networks. Such a process enables the agent to learn complex strategies and behaviors in an end-to-end manner.

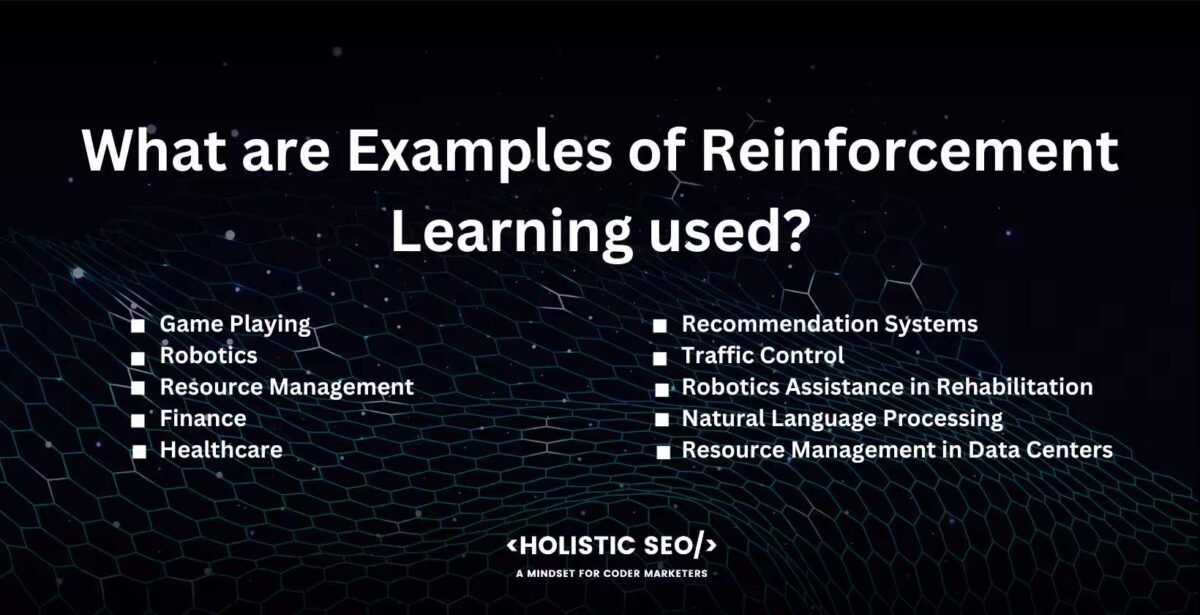

What are Examples of Reinforcement Learning used?

Listed below are several examples of how reinforcement learning is used.

- Game Playing: Reinforcement learning has been employed in training agents to play complex games, such as AlphaGo and OpenAI Five. These agents learn strategies and make decisions through trial and error, eventually surpassing human performance levels.

- Robotics: Reinforcement learning enables robots to learn and adapt to their environments. Robots use reinforcement learning to navigate and perform tasks in dynamic and uncertain environments, such as grasping objects, locomotion, or autonomous driving.

- Resource Management: Reinforcement learning is utilized in optimizing resource allocation and management. For instance, reinforcement learning optimizes power generation and distribution in energy systems, leading to more efficient utilization of resources.

- Finance: Reinforcement learning is applied in algorithmic trading, where agents learn to make trading decisions based on market data and maximize profits. It is used in portfolio management, risk assessment, and fraud detection.

- Healthcare: Reinforcement learning has potential applications in personalized medicine and treatment planning. Agents learn optimal treatment strategies based on patient data and medical guidelines, leading to more effective and tailored interventions.

- Recommendation Systems: Reinforcement learning is used to improve recommendation algorithms on e-commerce platforms and content streaming services. Agents learn user preferences and behaviors to provide personalized recommendations, enhancing user satisfaction and engagement.

- Traffic Control: Reinforcement Learning optimizes traffic signal timings to reduce congestion and improve traffic flow. Agents learn from real-time traffic data and make decisions to minimize travel time and delays.

- Robotics Assistance in Rehabilitation: Reinforcement learning is employed in developing robotic systems that assist in physical rehabilitation. These robots learn to provide tailored exercises and assistance based on the patient’s progress and needs.

- Natural Language Processing: Reinforcement learning is used in developing conversational agents and chatbots. Agents learn to understand and generate human-like responses, enhancing the user experience in human-computer interactions.

- Resource Management in Data Centers: Reinforcement learning algorithms optimize resource allocation in data centers, such as computing resources and cooling systems, to improve energy efficiency and reduce operational costs.

How Can Computer Vision with AI Be Used in Healthcare?

The integration of AI into computer vision has game-changing implications for the medical field, ushering in a new era of precision medicine and patient care. Medical imaging analysis software benefits from the capabilities of computer vision algorithms and artificial intelligence approaches to improve patient outcomes and streamline healthcare delivery.

Computer vision combined with AI has found critical applications in the processing of medical images. Computer vision systems powered by artificial intelligence examine radiological images, including X-rays, CT scans, and MRIs, to help radiologists spot cancers and other abnormalities. Computer vision improves diagnostic accuracy, speeds up the detection process, and guarantees early action by automating image interpretation and giving accurate insights.

Computer vision with AI is even employed in surgical procedures. Surgical robots equipped with computer vision capabilities assist surgeons by providing real-time visual feedback, enhancing precision, and enabling minimally invasive techniques. These systems track surgical instruments, navigate complex anatomical structures, and offer augmented reality guidance, ultimately improving surgical outcomes and patient safety.

Computer vision technology plays a pivotal role in the realm of remote patient monitoring and telemedicine. Video streams are meticulously analyzed to monitor patients’ vital signs, promptly identify instances of falls or other emergencies through the utilization of AI-powered systems, and effectively notify healthcare providers when immediate intervention is warranted. Such an amalgamation of computer vision and AI empowers healthcare professionals to administer proactive and timely care, thereby significantly benefiting patients residing in remote or underserved areas.

The integration of cutting-edge AI algorithms, including deep learning and image recognition, has catapulted the capabilities of computer vision within the healthcare landscape. These algorithms possess the capacity to acquire knowledge from extensive datasets, discern intricate patterns within medical imagery, and provide highly accurate assessments, consequently fostering heightened efficiency and precision in the realms of diagnosis and treatment planning.

Medical professionals have successfully used AI in healthcare to improve diagnostic accuracy, streamline patient care, and enhance treatment outcomes.

How Can Computer Vision with AI Be Used in Transportation?

Advanced systems for driverless vehicles, traffic control, and driver support are just some of the ways in which AI-powered computer vision is being utilized in the transportation sector. Computer vision algorithms and artificial intelligence approaches are used in transportation systems to evaluate visual data from cameras, sensors, and other sources to enhance safety, efficiency, and decision-making.

The fusion of computer vision technology and AI manifests prominently in the domain of autonomous vehicles within the realm of transportation. Vehicles acquire the capability to perceive and comprehend their immediate surroundings comprehensively through the deployment of computer vision systems empowered by sophisticated AI algorithms. They discern and classify a diverse array of objects, ranging from pedestrians and cyclists to other vehicles, thus facilitating informed decision-making processes concerning navigation and collision avoidance. Self-driving cars are now navigating intricate traffic scenarios with ease thanks to cutting-edge technology, improving road safety and ushering in the promise of a time when transportation is streamlined, effective, and convenient.

Computer vision is employed in traffic management and monitoring. AI-powered computer vision systems detect traffic congestion, monitor traffic flow, and identify unusual events, such as accidents or road hazards, by analyzing real-time video feeds from traffic cameras. The information is used to optimize traffic signal timing, suggest alternative routes, and improve overall traffic management strategies, leading to reduced congestion and enhanced efficiency in transportation networks.

Some car makers use AI in transportation, particularly in driver assistance systems. These systems employ computer vision algorithms to analyze video data from onboard cameras and provide real-time alerts and warnings to drivers about potential hazards, lane departures, or collision risks. These systems contribute to increased safety on the roads by enhancing driver situational awareness.

AI techniques have significantly enhanced the accuracy and performance of computer vision-based transportation systems, such as deep learning and object detection algorithms. These algorithms learn from large datasets, recognize and track objects in real-time, and adapt to varying environmental conditions, making them invaluable tools for enhancing transportation safety, efficiency, and the overall user experience.

How Can Computer Vision with AI Be Used in Cyber Security?

Computer vision with AI plays a crucial role in enhancing cybersecurity measures by providing advanced capabilities for threat detection, anomaly detection, and behavior analysis. Cybersecurity systems analyze visual data to identify potential security breaches, unauthorized access, and malicious activities by leveraging computer vision algorithms and techniques.

One application of computer vision in cybersecurity is in the field of video surveillance. AI-powered computer vision systems analyze video footage in real-time to detect suspicious behavior or identify individuals based on facial recognition. It enables the proactive monitoring of physical spaces, such as office premises or public areas, and the rapid response to potential security threats.

Computer vision uses AI in cybersecurity for image and document analysis. AI algorithms analyze images, screenshots, or scanned documents to detect hidden information, sensitive data, or malicious content embedded within them. Such a process is beneficial in identifying steganography techniques, where information is concealed within seemingly innocuous images or files.

Computer vision with AI is utilized for network traffic analysis. Cybersecurity systems have the ability to detect anomalies or unusual behaviors that indicate a cyber attack or intrusion attempt by analyzing network traffic patterns and visualizing data flows. It allows for the timely identification and response to potential threats, enhancing the overall security posture of the network.

The expansion of AI techniques, such as deep learning and convolutional neural networks, has remarkably improved the accuracy and effectiveness of computer vision-based cybersecurity systems. These algorithms learn from large datasets, recognize complex patterns, and adapt to evolving attack techniques, making them valuable tools in combating emerging cyber threats.

How Can Computer Vision with AI Be Used in Robots?

The integration of computer vision with AI has found profound applications in the realm of robotics, affording robots the capacity to comprehend and interpret the visual aspects of their environment. Robots become adept at processing and analyzing visual data, thereby facilitating their seamless execution of a diverse spectrum of tasks with remarkable precision and efficacy by assimilating advanced computer vision algorithms and techniques. The amalgamation of AI and computer vision has revolutionized various industries, including manufacturing, healthcare, and logistics, where robots, equipped with sophisticated AI algorithms, assume roles in undertaking intricate tasks, optimizing operational efficiency, and fostering harmonious collaboration between humans and robots.

Computer vision enables robots to recognize objects, people, and their attributes, facilitating tasks such as object manipulation, navigation, and human-robot interaction. For instance, robots equipped with computer vision are able to identify and pick up specific objects in a cluttered environment, enabling them to perform tasks like sorting items in a warehouse or assisting in assembly processes.

Computer vision allows robots to navigate and map their surroundings. Robots detect obstacles, determine their relative positions, and plan optimal paths to reach their destinations by analyzing visual data from cameras or depth sensors. Such capability is crucial in various applications, such as autonomous vehicles, drones, and mobile robots used in logistics and transportation.

Computer vision plays a vital role in human-robot interaction. Robots recognize and interpret human gestures, facial expressions, and body language, enabling natural and intuitive communication between humans and machines. It has applications in fields like healthcare, where robots understand and respond appropriately to human gestures or expressions of pain or discomfort.

Computer vision enables robots to perform tasks that require visual inspection or quality control. Businesses use AI in robotics to optimize their operations, increase productivity, and drive innovation. For example, robots use computer vision to detect defects, measure dimensions, or identify inconsistencies in products, ensuring high-quality production in manufacturing. Advances in deep learning and convolutional neural networks have facilitated the expansion of computer vision with AI. These techniques enable robots to learn from large datasets, improving their accuracy and robustness in tasks like object recognition and image classification.

What are Different Types of Reinforcement Learning?

Listed below are different types of Reinforcement Learning.

- Value-Based Reinforcement Learning: Such a type of algorithm learns to estimate the value function, which represents the expected long-term return from a particular state or state-action pair. Examples include Q-learning and SARSA.

- Policy-Based Reinforcement Learning: Policy-based algorithms directly learn a policy that maps states to actions without explicitly estimating the value function. They aim to find the optimal policy by optimizing a parameterized policy representation. Examples include REINFORCE and Proximal Policy Optimization (PPO).

- Model-Based Reinforcement Learning: Model-based algorithms learn a model of the environment’s dynamics, including transition probabilities and reward functions. They use such a learned model to plan and make decisions. Model-based algorithms are combined with value-based or policy-based methods. Examples include Monte Carlo Tree Search (MCTS) and Model Predictive Control (MPC).

- Actor-Critic Reinforcement Learning: Actor-critic algorithms combine elements of both value-based and policy-based methods. They maintain both an actor-network that represents the policy and a critic network that estimates the value function. The actor-network guides action selection, while the critic network provides feedback on the quality of the selected actions. Examples include Advantage Actor-Critic (A2C) and Asynchronous Advantage Actor-Critic (A3C).

- Multi-Agent Reinforcement Learning: Multi-agent reinforcement learning deals with scenarios where multiple agents interact with each other and the environment. It involves learning individual policies for each agent while considering the behavior and strategies of other agents. Examples include Independent Q-Learning and Minimax-Q.

- Hierarchical Reinforcement Learning: Hierarchical RL aims to learn and exploit hierarchies or structures in tasks. It involves decomposing complex tasks into subtasks and learning policies at different levels of abstraction. Examples include Option-Critic Architecture and H-DQN.

- Imitation Learning: Imitation learning, sometimes known as learning from demonstrations, involves learning policies by imitating expert demonstrations. It uses supervised learning techniques to learn from labeled data provided by human experts. Examples include Behavioral Cloning and Inverse Reinforcement Learning.

- Meta-Reinforcement Learning: Meta-RL focuses on learning to learn or adapting to new tasks quickly. It aims to develop algorithms that acquire knowledge or prior experience across a set of related tasks, enabling efficient learning of new, unseen tasks. Examples include Model-Agnostic Meta-Learning (MAML) and Reptile.

How can function approximation techniques be used in reinforcement learning?

Function approximation techniques play a crucial role in reinforcement learning by enabling the efficient representation and approximation of value functions or policies. The goal of reinforcement learning is to learn an optimal policy or value function that maximizes the long-term expected reward. However, it becomes computationally infeasible to explicitly represent and store values for every state or state-action pair in complex environments with large state spaces. Function approximation techniques address such a challenge by approximating the value function or policy using a compact and generalizable representation.

One common approach is to use function approximators, such as artificial neural networks or decision trees, which are capable of capturing complex relationships between inputs (states or state-action pairs) and corresponding values. These function approximators learn from observed data and generalize their predictions to unseen states or actions. Reinforcement learning algorithms efficiently estimate values or policies for states that have not been encountered during training by leveraging function approximation techniques.

Function approximation techniques enable generalization across similar states, reducing the need for extensive exploration in the state space. It improves the learning efficiency of reinforcement learning algorithms, allowing them to converge to optimal or near-optimal solutions more quickly.

Function approximation techniques are combined with other reinforcement learning algorithms, such as Q-learning or policy gradients, to enhance their performance. These techniques facilitate the scalability and applicability of reinforcement learning in real-world problems with large state spaces and continuous domains.

What is the difference between Reinforcement learning and supervised learning?

There are two main schools of thought when it comes to machine learning, supervised learning and reinforcement learning. A machine learning model is trained with a labeled dataset in which each data item has a label indicating its intended use in supervised learning. The model acquires the ability to correctly translate input features to the desired output by extrapolating from the annotated examples. The model learns to produce predictions that are as close as possible to the genuine labels included in the training data. Classification, regression, and pattern recognition are all examples of supervised learning applications in which an accurate mapping function for predicting unseen occurrences based on the training examples is sought.

On the other hand, reinforcement learning operates in an interactive environment where an agent learns to make sequential decisions to maximize a long-term reward signal. The agent explores the environment by taking actions, receives feedback in the form of rewards or penalties, and adjusts its actions based on the received feedback to optimize its performance over time. Reinforcement learning does not have labeled examples to guide the learning process, unlike supervised learning. The agent learns through trial and error instead of exploring different actions and evaluating their consequences. The objective of reinforcement learning is to learn a policy or strategy that maximizes the cumulative reward obtained from the environment.

- 48 Online Shopping and Consumer Behavior Statistics, Facts and Trends - August 22, 2023

- B2B Marketing Statistics - August 22, 2023

- 38 Podcast Statistics, Facts, and Trends - August 22, 2023