NLTK Stemming is a process to produce morphological variations of a word’s original root form with NLTK. Stemming is a part of linguistic morphology and information retrieval. The root of the stemmed word has to be equal to the morphological root of the word. Stemming algorithms and stemming technologies are called stemmers. Natural Language Tool Kit has a built-in stemming algorithm called “PorterStemmer”. “PorterStemmer” of the NLTK comes from the Linguistic Scientist Martin Porter. Some of the Stemming examples can be found below.

- For the word Flying, “ing” is the suffix. The original stem word is Fly.

- The words beauty and duty are the stem words for beautiful and dutiful.

- The suffixes able, and possible can be used for generating ability, and possibility words with stemming.

- The Waits, Waited, Waiting words are the inflectional forms of the word Wait.

NLTK Stemming is beneficial for performing the steaming process to clean textual data to develop a Natural Language Processing Algorithm. NLTK Lemmatization and NLTK Stemming are connected to each other to perform a better Word and Sentence Tokenization with NLTK. Besides the NLTK PorterStemmer, there are other stemming algorithms within the NLTK such as SnowballStemmer, or RegexpStemmer. Different types of stemmers within the NLTK focus on different languages, rules, or algorithmic rules. For instance, the RegexpStemmer is a rule-based stemmer that focuses on regex rules, while PorterStemmer is the standard stemmer of the NLTK.

In this NLTK Stemming tutorial and guideline, stemming functions, parameters, visualizations, and examples will be processed and demonstrated.

How to Perform Stemming with NLTK?

To perform stemming with NLTK (Natural Language Tool Kit), the “PorterStemmer” from the “nltk.stem” should be imported to the Python Script. Before tokenizing the words with NLTK, performing stemming can change the tokens after the stemming process. Below, you will be able to see an example of the NLTK Stemming with Python code script.

from nltk.stem import PorterStemmer

from nltk.tokenize import word_tokenize

import pandas as pd

ps = PorterStemmer()

text = """Hebrew and Arabic are still considered difficult research languages for stemming. English stemmers are fairly trivial (with only occasional problems, such as "dries" being the third-person singular present form of the verb "dry", "axes" being the plural of "axe" as well as "axis"); but stemmers become harder to design as the morphology, orthography, and character encoding of the target language becomes more complex. For example, an Italian stemmer is more complex than an English one (because of a greater number of verb inflections), a Russian one is more complex (more noun declensions), a Hebrew one is even more complex (due to nonconcatenative morphology, a writing system without vowels, and the requirement of prefix stripping: Hebrew stems can be two, three or four characters, but not more), and so on."""

tokens = word_tokenize(text)

print(tokens)

stemmed = []

for token in tokens:

stemmed_word = ps.stem(token)

stemmed.append(stemmed_word)

print(stemmed)

df = pd.DataFrame(data={"tokens":tokens, "stemmed": stemmed})

OUTPUT >>>

['Hebrew', 'and', 'Arabic', 'are', 'still', 'considered', 'difficult', 'research', 'languages', 'for', 'stemming', '.', 'English', 'stemmers', 'are', 'fairly', 'trivial', '(', 'with', 'only', 'occasional', 'problems', ',', 'such', 'as', '``', 'dries', "''", 'being', 'the', 'third-person', 'singular', 'present', 'form', 'of', 'the', 'verb', '``', 'dry', "''", ',', '``', 'axes', "''", 'being', 'the', 'plural', 'of', '``', 'axe', "''", 'as', 'well', 'as', '``', 'axis', "''", ')', ';', 'but', 'stemmers', 'become', 'harder', 'to', 'design', 'as', 'the', 'morphology', ',', 'orthography', ',', 'and', 'character', 'encoding', 'of', 'the', 'target', 'language', 'becomes', 'more', 'complex', '.', 'For', 'example', ',', 'an', 'Italian', 'stemmer', 'is', 'more', 'complex', 'than', 'an', 'English', 'one', '(', 'because', 'of', 'a', 'greater', 'number', 'of', 'verb', 'inflections', ')', ',', 'a', 'Russian', 'one', 'is', 'more', 'complex', '(', 'more', 'noun', 'declensions', ')', ',', 'a', 'Hebrew', 'one', 'is', 'even', 'more', 'complex', '(', 'due', 'to', 'nonconcatenative', 'morphology', ',', 'a', 'writing', 'system', 'without', 'vowels', ',', 'and', 'the', 'requirement', 'of', 'prefix', 'stripping', ':', 'Hebrew', 'stems', 'can', 'be', 'two', ',', 'three', 'or', 'four', 'characters', ',', 'but', 'not', 'more', ')', ',', 'and', 'so', 'on', '.']

['hebrew', 'and', 'arab', 'are', 'still', 'consid', 'difficult', 'research', 'languag', 'for', 'stem', '.', 'english', 'stemmer', 'are', 'fairli', 'trivial', '(', 'with', 'onli', 'occasion', 'problem', ',', 'such', 'as', '``', 'dri', "''", 'be', 'the', 'third-person', 'singular', 'present', 'form', 'of', 'the', 'verb', '``', 'dri', "''", ',', '``', 'axe', "''", 'be', 'the', 'plural', 'of', '``', 'axe', "''", 'as', 'well', 'as', '``', 'axi', "''", ')', ';', 'but', 'stemmer', 'becom', 'harder', 'to', 'design', 'as', 'the', 'morpholog', ',', 'orthographi', ',', 'and', 'charact', 'encod', 'of', 'the', 'target', 'languag', 'becom', 'more', 'complex', '.', 'for', 'exampl', ',', 'an', 'italian', 'stemmer', 'is', 'more', 'complex', 'than', 'an', 'english', 'one', '(', 'becaus', 'of', 'a', 'greater', 'number', 'of', 'verb', 'inflect', ')', ',', 'a', 'russian', 'one', 'is', 'more', 'complex', '(', 'more', 'noun', 'declens', ')', ',', 'a', 'hebrew', 'one', 'is', 'even', 'more', 'complex', '(', 'due', 'to', 'nonconcaten', 'morpholog', ',', 'a', 'write', 'system', 'without', 'vowel', ',', 'and', 'the', 'requir', 'of', 'prefix', 'strip', ':', 'hebrew', 'stem', 'can', 'be', 'two', ',', 'three', 'or', 'four', 'charact', ',', 'but', 'not', 'more', ')', ',', 'and', 'so', 'on', '.']The explanation of the NLTK Stemmer code block above can be found as follows.

- First, imported the NLTK.stem.PorterStemmer.

- Second, imported NLTK.tokenize.wordtokenize.

- Third, imported the Pandas as pd.

- Fourth, create a variable, and assign the PorterStemmer() to it.

- Take a text for Stemming. In this example, a text is used for Stemming.

- Tokenize the words for getting tokens from the example text.

- Print the tokens.

- Create a variable for appending the stemmed tokens.

- Use a for loop for stemming the tokenized words from the example text that is used for the “word_tokenize” function.

- Print the stemmed words that are stemmed with NLTK “PorterStemmer()”.

- Create a data frame with the tokenized words, and stemmed words to compare them to each other.

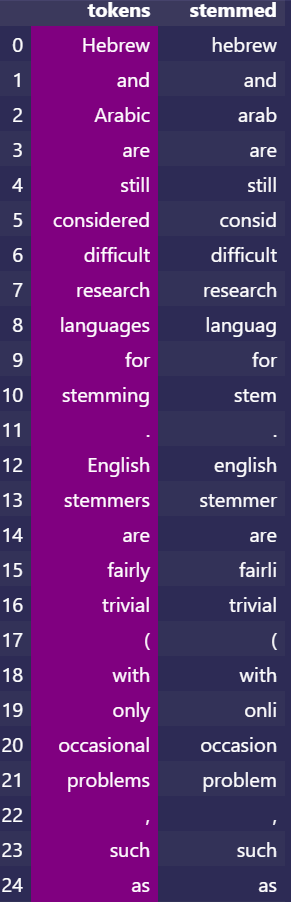

Below, you will see the output of the NLTK Stemming with the words stem root and their original form with suffixes.

The table version of the results of the NLTK Stemming can be found below.

| tokens | stemmed | |

| 0 | Hebrew | hebrew |

| 1 | and | and |

| 2 | Arabic | arab |

| 3 | are | are |

| 4 | still | still |

| 5 | considered | consid |

| 6 | difficult | difficult |

| 7 | research | research |

| 8 | languages | languag |

| 9 | for | for |

| 10 | stemming | stem |

| 11 | . | . |

| 12 | English | english |

| 13 | stemmers | stemmer |

| 14 | are | are |

| 15 | fairly | fairli |

| 16 | trivial | trivial |

| 17 | ( | ( |

| 18 | with | with |

| 19 | only | onli |

| 20 | occasional | occasion |

| 21 | problems | problem |

| 22 | , | , |

| 23 | such | such |

| 24 | as | as |

| 25 | “ | “ |

| 26 | dries | dri |

| 27 | ‘ | ‘ |

| 28 | being | be |

| 29 | the | the |

| 30 | third-person | third-person |

| 31 | singular | singular |

| 32 | present | present |

| 33 | form | form |

| 34 | of | of |

| 35 | the | the |

| 36 | verb | verb |

| 37 | “ | “ |

| 38 | dry | dri |

| 39 | ‘ | ‘ |

| 40 | , | , |

| 41 | “ | “ |

| 42 | axes | axe |

| 43 | ‘ | ‘ |

| 44 | being | be |

| 45 | the | the |

| 46 | plural | plural |

| 47 | of | of |

| 48 | “ | “ |

| 49 | axe | axe |

What are the benefits of the NLTK Stemming Algorithms?

- The benefits of the NLTK Stemming algorithms are listed below.

- Removing the affixes from the words.

- Helping to understand the role of the word within the sentence

- Helping to understand tense of the sentence

- Helping to see derivational morphology of words

- Making irregular words to similar each other for better text understanding

What modules do exist within the NLTK for Stemming?

The modules that can be used for stemming with NLTK are listed below.

- nltk.stem.regexp module

- nltk.stem.rslp module

- nltk.stem.snowball module

- nltk.stem.util module

- nltk.stem.wordnet module

- nltk.stem.api module

- nltk.stem.arlstem module

- nltk.stem.arlstem2 module

- nltk.stem.cistem module

- nltk.stem.isri module

- nltk.stem.lancaster module

- nltk.stem.porter module

The different types of NLTK Stemmers exist with different algorithms for different purposes. The explanations, descriptions, and examples of all of the NLTK Stemmers can be found in the next sections.

How to perform a Regex Stemming with NLTK?

To perform a Regex Stemming with NLTK, the “RegexpStemmer” should be used. Regex Stemming with NLTK is an example of rule-based stemming. A regex rule can be used for stemming the words with a different methodology. In this context, only a certain suffix can be stemmed with a different output via NLTK. Example usage of the RegexpStemmer can be found below.

from nltk.stem import RegexpStemmer

st = RegexpStemmer('ing$|s$|ed$|able$', min=4)

regex_stemming_words = ["running", "runs", "runed", "workable"]

for word in regex_stemming_words:

print(st.stem(word))

OUTPUT>>>

runn

run

run

workThe example explanation of the NLTK Stemming with Regex Rules can be found below.

- Import the NLTK.stem.RegexpStemmer

- Assigned it to a different variable which is “st”.

- Take words for stemming with Regex and NLTK.

- Use a for loop for stemming with regex, and NLTK.

- Put a minimum length for the stemming word length with the “min” parameter of “RegexpStemmer”.

- Determine the “suffixes that will be stemmed” with NLTK RegexpStemmer, in this case, these are ” ing$|s$|ed$|able$”.

The regex stemming with NLTK can be used for text cleaning for specific cases. Instead of training a new algorithm, the regex rules can be more economical and faster for NLTK Stemming.

How to use LancasterStemmer with NLTK?

To use the Lancaster Stemmer with NLTK, the “LancasterStemmer” function from NLTK should be used. The instructions for using the LancasterStemmer with NLTK can be found below.

- Import the “LancasterStemmer” from the “nltk.stem”.

- Create a variable, assign the “LancasterStemmer()” to the variable.

- Call the “LancasterStemmer().stem()” method for the example text.

- Take the results for examination, or training an NLP Algorithm.

Example usage of the Lancaster Stemmer can be found below.

from nltk.stem import LancasterStemmer

lancaster=LancasterStemmer()

print("Lancaster Stemmer Examples")

print(lancaster.stem("dogs"))

print(lancaster.stem("shooting"))

print(lancaster.stem("shot"))

print(lancaster.stem("standartization"))

OUTPUT>>>

Lancaster Stemmer Examples

dog

shoot

shot

standartThe Lancaster Stemming with NLTK example and its output can be seen above. The Lancaster Stemming (Paice-Husk stemmer) and the Porter Stemming are close to each other. The Porter Stemmer is the oldest NLTK Stemmer since 1990, while the Lancaster Stemmer has been developed later for some other specific situations. In some cases, the PorterStemmer, and the LancasterStemmer are not perfect for the English Language, since they remove the suffixes from the words based on certain rules in the context of Suffix Stripping. To perform stemming for the English Language based on the Linguistics, the “PorterStemmer” should be used with the SnowballStemmers which is developed by Martin Porter.

To learn how to use SnowballStemmer with NLTK for English Linguistics, read the next section.

How to use SnowballStemmer with NLTK?

To use the SnowballStemmers with NLTK, the “SnowballStemmbers” function of the NLTK is used. To use the SnowballStemmer with NLTK, follow the instructions below.

- Import the “nltk.stem.snowball.SnowballStemmer”.

- Assign the “SnowballStemmer()” to a variable.

- Take the textual date and assign it to a variable such as “snowball_stemmer_words”.

- Perform the SnowballStemmer over the textual data with the “SnowballStemmer.stem()” example.

- Print the output of the NLTK SnowballStemmer.

Example usage of the SnowballStemmer with NLTK can be found below.

from nltk.stem.snowball import SnowballStemmer

snowball_stemmer = SnowballStemmer(language='english')

words = ['loved','table','closely','serch engine optimization','googling',

'stemming','stemmer','lemmatization','opportunity', 'process']

snowball_stemmer_words = []

for w in words:

x = snowball_stemmer.stem(w)

snowball_stemmer_words.append(x)

ps = PorterStemmer()

porter_stemmer_words = []

for w in words:

x = ps.stem(w)

porter_stemmer_words.append(x)

for e1, e2 in zip(words, snowball_stemmer_words):

print(e1+' ----> '+e2)

class bcolors:

HEADER = '\033[95m'

OKBLUE = '\033[94m'

OKCYAN = '\033[96m'

OKGREEN = '\033[92m'

WARNING = '\033[93m'

FAIL = '\033[91m'

ENDC = '\033[0m'

BOLD = '\033[1m'

UNDERLINE = '\033[4m'

print(f"{bcolors.WARNING}Comparison of the PorterStemmer to the SnowballStemmer{bcolors.ENDC}")

for e1, e2 in zip(snowball_stemmer_words, porter_stemmer_words ):

print(e1+' ----> '+e2)

from nltk.stem.snowball import SnowballStemmer

snowball_stemmer = SnowballStemmer(language='english')

words = ['loved','table','closely','serch engine optimization','googling',

'stemming','stemmer','lemmatization','opportunity', 'process']

snowball_stemmer_words = []

for w in words:

x = snowball_stemmer.stem(w)

snowball_stemmer_words.append(x)

ps = PorterStemmer()

porter_stemmer_words = []

for w in words:

x = ps.stem(w)

porter_stemmer_words.append(x)

for e1, e2 in zip(words, snowball_stemmer_words):

print(e1+' ----> '+e2)

class bcolors:

HEADER = '\033[95m'

OKBLUE = '\033[94m'

OKCYAN = '\033[96m'

OKGREEN = '\033[92m'

WARNING = '\033[93m'

FAIL = '\033[91m'

ENDC = '\033[0m'

BOLD = '\033[1m'

UNDERLINE = '\033[4m'

print(f"{bcolors.WARNING}Comparison of the PorterStemmer to the SnowballStemmer{bcolors.ENDC}")

for e1, e2 in zip(snowball_stemmer_words, porter_stemmer_words ):

print(e1+' ----> '+e2)

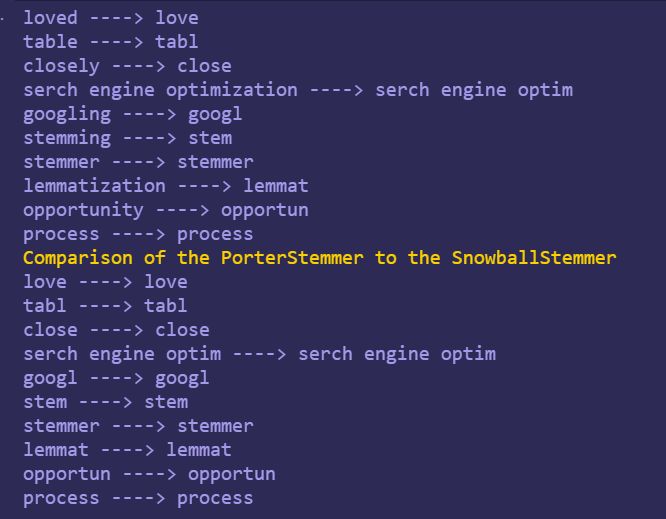

At the code block above, we have performed an NLTK Stemming example with PorterStemmer. We used SnowballStemmer first and compared its results to the NLTK PorterStemmer at the last section with the help of the “zip” function of the Python. The visual version of the output can be found below.

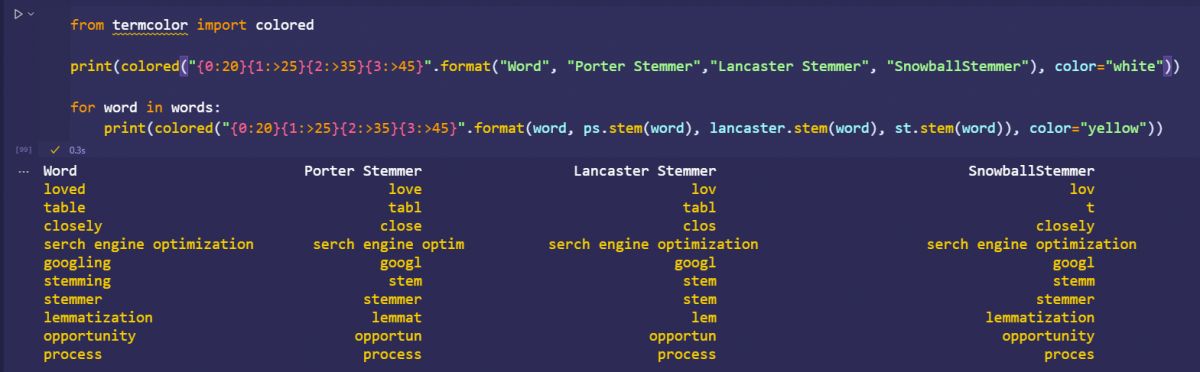

Since the words above are close variations of each other, most of the time the PorterStemmer and the SnowballStemmer give the same results. Below, the stemming methodologies with NLTK and their outputs for the Porter Stemmer, Lancester Stemmer, and the SnowballStemmer can be found with colorized and printed output.

from termcolor import colored

print(colored("{0:20}{1:>25}{2:>35}{3:>45}".format("Word", "Porter Stemmer","Lancaster Stemmer", "SnowballStemmer"), color="white"))

for word in words:

print(colored("{0:20}{1:>25}{2:>35}{3:>45}".format(word, ps.stem(word), lancaster.stem(word), st.stem(word)), color="yellow"))

OUTPUT >>>

Word Porter Stemmer Lancaster Stemmer SnowballStemmer

loved love lov lov

table tabl tabl t

closely close clos closely

serch engine optimization serch engine optim serch engine optimization serch engine optimization

googling googl googl googl

stemming stem stem stemm

stemmer stemmer stem stemmer

lemmatization lemmat lem lemmatization

opportunity opportun opportun opportunity

process process process procesThe output of the code block above for the Python NLTK Stemming in different ways can be found below as an image.

The unique side of the SnowballStemmer from the PorterStemmer is that it can be used for other languages with the help of the “language” parameter.

How to Stem Sentences with NLTK?

To stem the sentences instead of the words with NLTK, a unique function should be used. With the help of the word_tokenize and the sent_tokenize, stemming sentences is possible. The instructions for stemming sentences with the NLTK are below.

- Tokenize the text with “word_tokenize”.

- Stem the words within the tokenized words list.

- Unite the stemmed and tokenized words with white space via “join” string method.

- Print the output as stemmed words’ unification.

Below, you can find an example of the sentence stemming with NLTK.

from nltk.tokenize import word_tokenize

from nltk.stem import PorterStemmer

ps = PorterStemmer()

def sentence_stemmer(sentence):

tokens = word_tokenize(sentence)

stemmed_sentences = []

for word in tokens:

stemmed_sentences.append(ps.stem(word))

stemmed_sentences.append(" ")

return "".join(stemmed_sentences)

textual_data = """Suffix stripping algorithms may differ in results for a variety of reasons. One such reason is whether the algorithm constrains whether the output word must be a real word in the given language. Some approaches do not require the word to actually exist in the language lexicon (the set of all words in the language). Alternatively, some suffix stripping approaches maintain a database (a large list) of all known morphological word roots that exist as real words. These approaches check the list for the existence of the term prior to making a decision. Typically, if the term does not exist, alternate action is taken. This alternate action may involve several other criteria. The non-existence of an output term may serve to cause the algorithm to try alternate suffix stripping rules."""

sentence_stemmer(textual_data)

OUTPUT>>>

'suffix strip algorithm may differ in result for a varieti of reason . one such reason is whether the algorithm constrain whether the output word must be a real word in the given languag . some approach do not requir the word to actual exist in the languag lexicon ( the set of all word in the languag ) . altern , some suffix strip approach maintain a databas ( a larg list ) of all known morpholog word root that exist as real word . these approach check the list for the exist of the term prior to make a decis . typic , if the term doe not exist , altern action is taken . thi altern action may involv sever other criteria . the non-exist of an output term may serv to caus the algorithm to tri altern suffix strip rule . 'The example above demonstrates how to use NLTK Stemming on sentences. If you want to see the difference between the original text, and the stemmed sentences, you can use the “difflib” as below.

import difflib

s1 = textual_data.split(' ')

s2 = sentence_stemmer(textual_data).split(' ')

matcher = difflib.SequenceMatcher(a=s1, b=s2)

print("Matching Sequences:")

for match in matcher.get_matching_blocks():

print("Match : {}".format(match))

print("Matching Sequence : {}".format(s1[match.a:match.a+match.size]))The example above outputs the matching sequences of words for the stemmed and non-stemmed versions of the same text. In this context, stemming with NLTK or other Python Libraries such as TensorFlow or Genism and Spacy are important for information retrieval algorithms, and search engines. Stemming can be found to understand the similar text pieces to each other for document clustering, and omitting the nearly duplicated and closely similar articles from the SERP for improving the quality of the text within the search results. In this context, you can see the matching sequences between the stemmed sentences with NLTK and original text.

Matching Sequences:

Match : Match(a=3, b=3, size=3)

Matching Sequence : ['may', 'differ', 'in']

Match : Match(a=7, b=7, size=2)

Matching Sequence : ['for', 'a']

Match : Match(a=10, b=10, size=1)

Matching Sequence : ['of']

Match : Match(a=13, b=14, size=6)

Matching Sequence : ['such', 'reason', 'is', 'whether', 'the', 'algorithm']

Match : Match(a=20, b=21, size=12)

Matching Sequence : ['whether', 'the', 'output', 'word', 'must', 'be', 'a', 'real', 'word', 'in', 'the', 'given']

Match : Match(a=35, b=37, size=2)

Matching Sequence : ['do', 'not']

Match : Match(a=38, b=40, size=3)

Matching Sequence : ['the', 'word', 'to']

Match : Match(a=42, b=44, size=3)

Matching Sequence : ['exist', 'in', 'the']

Match : Match(a=46, b=48, size=1)

Matching Sequence : ['lexicon']

Match : Match(a=48, b=51, size=3)

Matching Sequence : ['set', 'of', 'all']

Match : Match(a=52, b=55, size=2)

Matching Sequence : ['in', 'the']

Match : Match(a=56, b=62, size=2)

Matching Sequence : ['some', 'suffix']

Match : Match(a=60, b=66, size=2)

Matching Sequence : ['maintain', 'a']

Match : Match(a=66, b=74, size=3)

Matching Sequence : ['of', 'all', 'known']

Match : Match(a=70, b=78, size=1)

Matching Sequence : ['word']

Match : Match(a=72, b=80, size=4)

Matching Sequence : ['that', 'exist', 'as', 'real']

Match : Match(a=79, b=88, size=5)

Matching Sequence : ['check', 'the', 'list', 'for', 'the']

Match : Match(a=85, b=94, size=5)

Matching Sequence : ['of', 'the', 'term', 'prior', 'to']

Match : Match(a=91, b=100, size=1)

Matching Sequence : ['a']

Match : Match(a=94, b=105, size=3)

Matching Sequence : ['if', 'the', 'term']

Match : Match(a=98, b=109, size=1)

Matching Sequence : ['not']

Match : Match(a=101, b=113, size=2)

Matching Sequence : ['action', 'is']

Match : Match(a=106, b=119, size=2)

Matching Sequence : ['action', 'may']

Match : Match(a=110, b=123, size=1)

Matching Sequence : ['other']

Match : Match(a=114, b=128, size=5)

Matching Sequence : ['of', 'an', 'output', 'term', 'may']

Match : Match(a=120, b=134, size=1)

Matching Sequence : ['to']

Match : Match(a=122, b=136, size=3)

Matching Sequence : ['the', 'algorithm', 'to']

Match : Match(a=127, b=141, size=1)

Matching Sequence : ['suffix']

Match : Match(a=130, b=146, size=0)

Matching Sequence : []

For the Information Retrieval Environments, and text understanding the stemming is important.

How to Stem Arabic with NLTK?

To stem the Arabic Language with NLTK, the “arlstem” is used. The example of Arabic language stemming can be found below.

from nltk.stem.arlstem import ARLSTem

stemmer = ARLSTem()

stemmer.stem('مكتبة لمعالجة الكلمات العربية وتجذيعها')

OUTPUT >>>

'مكتبة لمعالجة الكلمات العربية وتجذيع'NLTK has other stemming options and usage examples that can be beneficial for text understanding and cleaning for Natural Language Processing (NLP). NLTK Stemming and NLTK Lemmatization along with the NLTK Tokenization are connected to each other for providing a better NLP Process.

What is the Relation between NLTK Stemming and NLTK Tokenization?

NLTK Stemming and NLTK Tokenization are related to each other for different purposes of the NLP. For cleaning a text, or tokenizing words to perform a statistical approach to text understanding, the stemmed words’ tokenized versions can be used. Tokenization and stemming can be used for different purposes, or within a sequence. To understand a word’s context within a sentence, or to understand a word’s count as a root word within a document, the NLTK Tokenization can be used with NLTK Stemming.

What is the Relation Between NLTK Stemming and NLTK Lemmatization?

NLTK Lemmatization is different but related to NLTK Stemming. NLTK Stemming’s difference from NLTK Lemmatization is that the NLTK Stemming removes the suffixes while the NLTK Lemmatization strips word from all of the possible inflections and the prefixes, suffixes. Unlike stemming, Lemmatization uses the context of the words within the sentence for removing the affixes from it. The NLTK Lemmatization can be used with NLTK Stemming, and NLTK Tokenization for text understanding, text cleaning, and processing. Both the NLTK Lemmatization and the NLTK Stemming can be used for document clustering, and sentiment analysis as an NLTK Process to improve the accuracy of the algorithms.

What is the Relation Between NLTK Stemming and Named Entity Recognition?

The relation between NLTK Stemming and the Named Entity Recognition is that the NLTK Stemming can be used for stripping the suffixes from words to recognize entities within the sentences, and paragraphs, or big corpora better. Named Entity Recognition is the process of recognizing the named entities within a text such as a place, a person, or a thing of any type. NLP Algorithms can understand a word’s tag and importance for a sentence with stemming and lemmatization better. Understanding the context of a sentence or the word is useful for a better Named Entity Recognition (NER).

What is the Definition of Stemming for NLP?

Stemming is the process of reducing words to their roots and prefixes or to their suffixes and prefixes that are attached to suffixes and prefixes. Stemming is an important concept in the field of Natural Language Understanding (NLU) and Natural Language Processing (NLP).

Information retrieval and extraction involve stemming as a part of linguistic studies in morphology and artificial intelligence (AI). The use of stemming and artificial intelligence allows data from vast sources like big data or the Web to be interpreted meaningfully since the best results may require searching for additional forms of a word related to a topic. In addition to searching on the Internet, stemming is also used in queries. To learn more about Stemming, read the related guide.

Last Thoughts on NLTK Stemming and Holistic SEO

Stemming and Holistic SEO are related to each other in the context of the semantics. The semantic SEO contains the NLP Technologies and NLP understanding. If an SEO Understands the NLTK and stemming, it will be helpful to understand how a search engine can infer the content, extracts the information, and retrieve the meaning within the words, or context within a web page. NLTK Stemming can be used for understanding the threshold for being perceived as duplicate content by the search engine, or how a search engine can use stemmed words to infer a sentence or replace some of the suffixes to extract more information. Semantic SEO and Semantic Search understanding are improved by NLTK technologies and methodologies such as NLTK Stemming. Using NLTK Stemming on the content of a website will give a Holistic SEO to understand the search engine algorithms, and ranking perspective for the content in a better way.

The NLTK Stemming article will be updated in light of the new content.

- Sliding Window - August 12, 2024

- B2P Marketing: How it Works, Benefits, and Strategies - April 26, 2024

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024