The entity, Attribute, Value (EAV) Architecture for SEO involves different types of entities, their attributes, and values within the data model to encode a space-efficient knowledge base. Knowledge representation includes different types of methodologies such as semantic networks, frames, and production rules. The entity, Attribute, Value (EAV) model is to provide a better knowledge representation for different topics and applications. EAV is called an object-attribute-value model, a vertical database, and lastly, an “open schema”.

Choosing the right attributes with accurate values from text involves different types of attribute prioritization to provide better contextual relevance, and to specify for the search engine. Deciding the right attributes, or prominent-popular-relevant attributes, for question generation and answer pairing helps a search engine process the text and meaning that can come from content.

In this SEO Case Study, attribute types, and their prominence for providing a better semantic content network, along with context flow, are explained via a real-world SEO success that comes from a training and “document template” creation based on the “query-intent” templates.

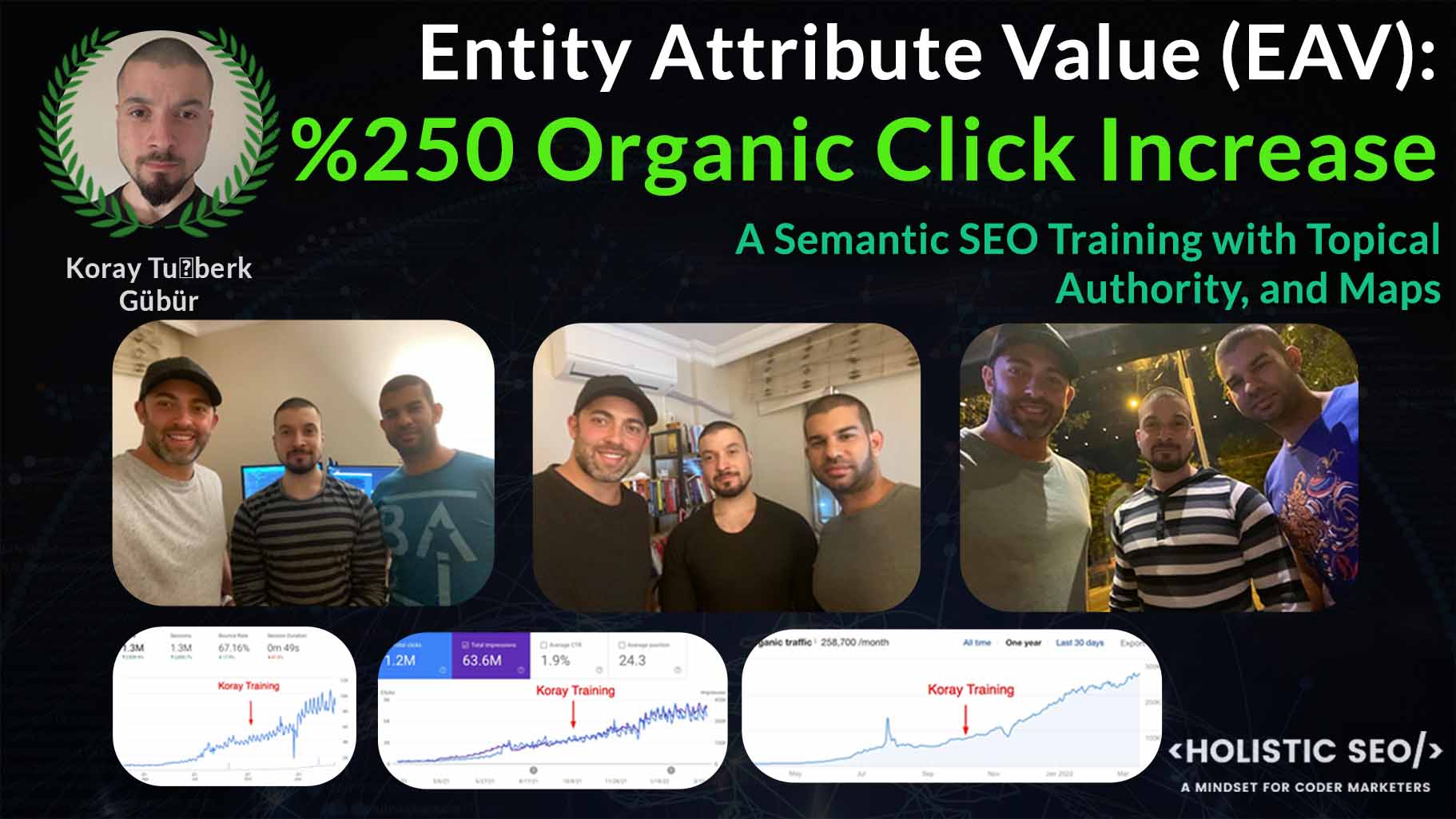

Below, you can see the result and where the training actually starts.

The Google Search Console results and the “acceleration” can be seen.

The Google Analytics graphic is below.

In six months, over 250% of organic traffic has increased. The daily 4000 clicks increased to over 10000. It is important because the training increased the website’s overall traffic, rankability, and information value despite the fact that the website already has a decent value for the search engine and the existing industry. Due to respect, I won’t explain the industry name or the client name, but a website that dominates its own niche increases its traffic by more than 250% in a half year. It happens if a website operator is able to communicate with the search engine, and feeds the search engine algorithms with the data that tells the website to decrease the “cost of retrieval,” increase the “contextual relevance,” and use better “semantic content networks” to provide “topical authority.”

Every website has a better “rankability” possibility in the “comparative ranking algorithms.” The initial and re-ranking algorithms of Google can be analyzed, and provide further potential for any website. Below, you will find pictures of Koray Tuğberk GÜBÜR Dean Scaduto and Kevin Farrugia.

The Query-Document-Intent templates are processed heavily already; thus, I won’t focus on them further. And, at the moment, I am writing a book about how search engines generate questions from queries and how they are able to choose different answers for these questions based on certain selection criteria. So, you should know that I’ve made an algorithmic authorship template, a topical map, and a semantic content network design for this education. I didn’t mention the “algorithmic authorship template” before. However, every verb, noun, phrase pattern, answer, answer type, format, or any kind of list, table, information graph, and content are designed before—literally, letter by letter, sentence by sentence. And, over an algorithmic authorship template, the main author, Kevin Farrugia has been trained, and how the fundamental template should be implemented by others is explained and practiced.

In 7 days, and 20+ hours, a semantic content network, topical map, and algorithmic authorship template have been created, and the writing sessions, search engines’ preferences, text processing methods, query-document clustering, and matching methodologies are explained.

Before continuing further, I must thank Dean Scaduto for his excellent friendship, and honesty. In the “Importance of Definitions for SEO” SEO Case Study, I have included these results and Dean Scaduto and Kevin Farrugia with special thanks, and I have hidden their data, website name, or other things (topical map, content item briefs, and more) while sharing the K9Web sessions, semantic content network design, and even the algorithmic authorship templates, including the VSSMonitoring.com as well.

What is Algorithmic Authorship?

Algorithmic authorship involves writing methods, rules, sentence structures, paragraph structures, bridge words, discourse integration configuration, and connections between different questions and their subordinate texts. The goal of algorithmic authorship is to provide a strict writing style for certain stylometry, speed up the production of content without lowering its quality, and increase the value of content.

Algorithmic authorship is used in programming for Natural Language Generation, but most of the time, the AI uses certain attention windows for certain word distributions. Distributional Semantics is able to find all the possible word sequences to determine the real author. The article on Google Author Rank is the best comprehensive research to explain how a search engine can classify and evaluate the main content creator. Thus, in this context, the algorithmic authorship provides an authentic main content creator while helping the semantic content network have a better evaluation and association process with the help of an easy-to-parse text structure. Visuals and other types of content types are provided within algorithmic authorship as well.

Algorithmic Authorship is used to determine certain types of content creation flows, and rule sets to help the authors while training them.

Google Author Rank Research demonstrates the importance of the Expression of Author Identities, and Author Vectors for Author Authority. Algorithmic Authorship helps SEOs to create authoritative and accurate quality information with brand signals and unique expertise proof in an NLP-proven way.

What is an Algorithmic Authorship Template?

An algorithmic authorship template is the process of the creation of an article template based on algorithmic writing rule sets. An article can be written based on certain types of attribute selections, and attribute hierarchies. Certain types of queries require certain types of phrases to be used, or certain types of sentence structures to be used. The algorithmic authorship template involves an article template based on a content item brief template. A content item brief involves all the context vectors, structure, hierarchy, and connections for covering a topic based on Semantic SEO. A content item brief template involves other content item briefs’ overall fundamental structure and rule sets. An algorithmic authorship template is created based on the content item brief template. An algorithmic authorship template is an article with all the possible sentences, tables, lists, paragraphs, or even table columns, row names, table heads, table footers, table outros, list definitions, exact matching answers, direct answers, comparison superlative usage, and more. The entire algorithmic authorship template involves all the headings and other types of SEO-related article skeletons.

An algorithmic authorship template is integrated into the next content item brief that has been generated based on the content item template. An algorithmic authorship template doesn’t have to include all the actual values or the sentence structures, but it has to involve all the variables and complete sentence suggestions. The rule set of the algorithmic authorship template has to include modifications, and further improvements based on the other entities and their unique, and rare attributes.

To create a successful algorithmic authorship template, the semantic content network, and the central entity along with the main entities should be processed properly and carefully. The concepts of central entities, main entities, and attribute dimensions are explained in the Semantic SEO Course initials. But, here we will focus on mainly the attributes. An algorithmic authorship template is a must to understand how to configure and balance the relevance of certain things.

Can two different Algorithmic Authorship Templates be configured to rank accordingly?

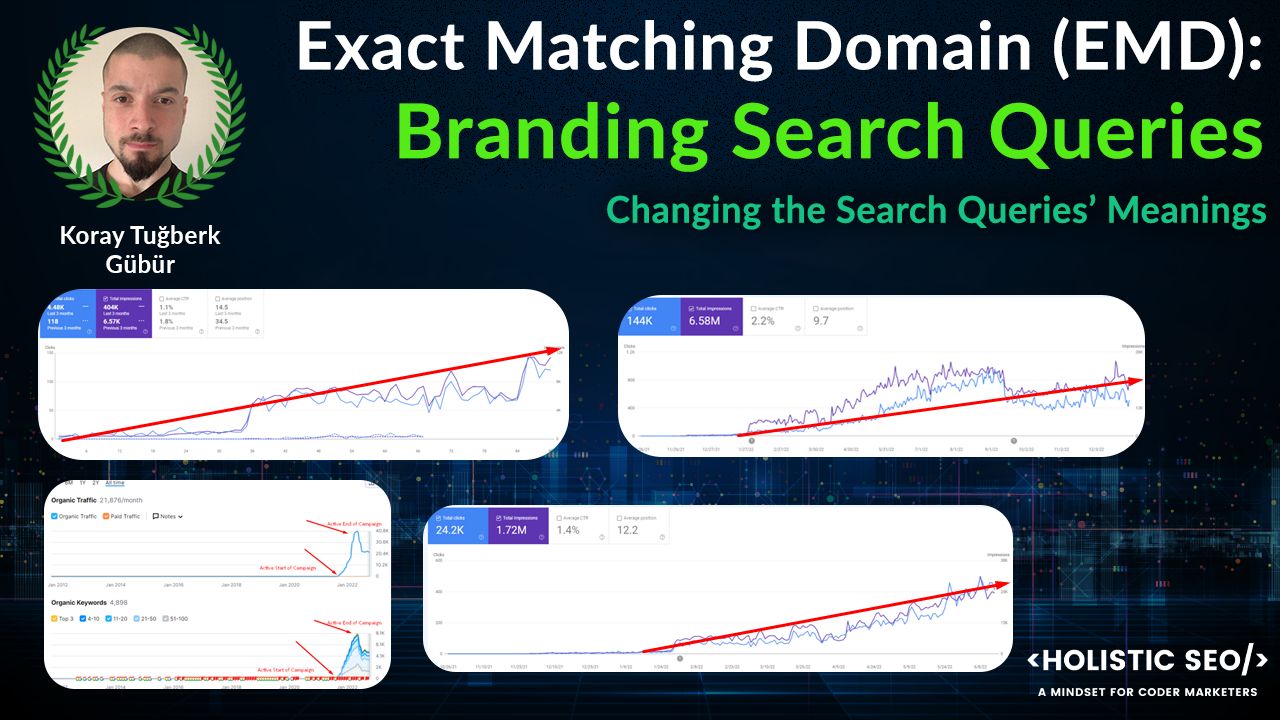

Yes, algorithmic authorship templates can be configured and arranged based on certain criteria. In the Cost-of-retrieval SEO Case Study, I have demonstrated a sample for “Source Shadowing”. Source Shadowing is the process of one source shadowing another one in terms of rankings. Both websites react to the algorithmic updates in the same direction, but the representative source always benefits it further, and the second, or third (shadowed sources) always follow the representative one by supporting it further. In the Exact Match Domain SEO Case Study, the “Comparison for Exact Match Domains” section explains this. In Koray Tuğberk GÜBÜR’s YouTube Channel, the “Can a Search Engine make a mistake” video, explains the source shadowing with real-world and open Google Search Console data. In the Cost-of-retrieval and, How does Google ranks SEO Case Studies, the “source shadowing” is explained further.

The two semantically configured algorithmic authorship templates can be configured differently to be ranked differently, too. In other words, a good semantic SEO will be able to determine which article structure will rank how and for which queries and how the authority gained from these queries will be distributed. It is the ability to specify a seed source criterion and a website representation vector. Website representation vectors are explained further in the Google Author Rank and Topical Authority Long SEO Case Study articles. An algorithmic authorship template determines the contextual consolidation, and depth based on the specifically chosen entity-attribute-value model, and semantic network structure.

The Contextual Search SEO Case Study explains this process further with the help of specifically created questions, and answers, and how they increase the query count, and the rankings overall. The two different algorithmic authorship templates can be configured, and modified to balance the “authenticity” of the semantic network and provide uniqueness for the source’s information content. Changing the sentence structures, decreasing the contextual coverage, and removing certain verbs, and phrase patterns can change the overall success and ranking process of the content networks. The verbs and phrase patterns can be understood further in the article “Understanding the Verbs from Life,” which focuses on the semantic role labels, FrameNet, and word themes.

The article “semantic content networks and entity relationships” explains how to choose entity connections properly, and how to create possible semantic networks over a knowledge base. As a result, an algorithmic authorship template can be configured to support various parts of the query network from the perspective of the knowledge base.

Note: Dean Scaduto wanted to create a second website by using a similar semantic network. He told me that he wants the second website to rank lower than the “mothership website,” and I configured the semantic content network, and the topical map, along with the algorithmic authorship template, based on this request. I can share the results in the future if he lets me.

The configuration process of semantic content networks and the algorithmic authorship template are relevant to the “corroboration of answers from the open web.” It will be processed in the book that is coming, and also in the Semantic SEO Course, where it has already been processed in every detail.

What is Content Configuration?

Content configuration is relevant to the entity-attribute-value databases and how they are ranked, as well as how these rankings can be consolidated further. Content configuration involves the existing content items being configured further for better rankings, Information Retrieval Scores, and Query Responsiveness (Information Extraction). Content Configuration involves two different steps, one is “content revision based on semantic networks”, and the second is “re-ranking the documents” and repeating the process. Content configuration can focus on the vocabulary gaps between the competing documents, query-document vocabulary differences, broken context flow, non-accurate information, broken sentence structures, and non-factual language usage. Content configuration and algorithmic authorship are two different processes that complete each other for further semantic SEO processes, and improvements.

Content configuration is relevant to the Entity, Attribute, Value Model further because search engines structure their indices based on the entities, this is called entity indexing. Furthermore, missing attributes and incorrect attribute values are stored within multi-value entity-attribute pairs under different fact IDs and entity IDs to distinguish between a factoid and a fact. The multiple value attributes are relevant to the attribute types, such as composite, or derived attribute types.

What is the Entity, Attribute, Value (EAV) Architecture

The entity, Attribute, Value Model involves a space-efficient knowledge representation model. It organizes the data in the form of an entity, a specific attribute, and a changing value that changes. It helps to cluster and store similar entity attributes together for a better efficient understanding and serving of the query owner.

An entity-attribute-value model example is given below.

- A City, Population, X

- C City, Population, Y

- Z City, Population, V-D

The entity-attribute-value model can be enriched with extra dimensions like dates, ranges, specific demographics, reasons for the population amount, or even change percentage for the last year. The entity, attribute, and value model is used in hospitals, courts, justice systems, educational systems, machine learning, and any kind of database, including search engine systems. Thus, it is a common technology in everyday life.

The presentation of “Semantic Search Engines, and Query Processing” explains the Browsable Fact Repository from Andrew Houge similarly. It explains how a search engine can generate and use a browseable fact repository for anything that exists. Later, Andrew Houge’s inventions led us to the Knowledge Graph, and Base of Google in 2012. The Entity, attribute, and value model data structure is described below.

- The Entity: A thing in the real world with an independent existence from any kind of need. An entity is self-dependent, it can be physical, or conceptual. Entities might have specific names with specific attributes, and values.

- The Attribute: Composite and Simple Attributes are two different types of attributes. Composite Attributes can be parsed as two different types. For example, a composite attribute can be size, size can have height, width, and depth. Simple attributes are the parts of the composite attributes, such as height, they can’t be parsed or chunked further, it is a simple-defined attribute. But, an entity can have multiple same and simple attributes, for example, height can be attributed to two different other types of the same entity, such as “wheel height”, and “window height”, these two can belong to a car at the same time. These are called indirect attributes. A direct attribute belongs to a specific entity, while an indirect attribute belongs to a specific part of the entity. Understanding lexical relations, Hypernyms, or Hyponyms are necessary due to this. There are more attribute types, such as single-valued attributes and multivalued attributes. Single-valued attributes have only a single value, such as “age”. But, multivalued attributes can have multiple values, such as “college degree”, or “known languages”. There are also derived attributes, and stored attributes. For example, derived attributes can come from another attribute. If something is from “iron”, it should be “hard”, if someone has an “age”, there should be a “birthdate”. Stored attributes are stored values for specific attributes. Complex Attributes might have multivalued and composite components since they have multiple sub-attribute parts.

- The Value: The values are the content of the specific attribute from the particular entity. The value represents the meaning of the text. Understanding the values means understanding the real meaning of the content.

Besides these, we can focus on entity types, just as we focused on attribute types, but entity types such as entity sets, place, and song entities can be processed in another meeting.

Key Attributes for Entities

Key Attributes are different from composite, simple, multivalued, single-valued, direct, indirect, derived, and stored attributes. Key attributes represent the specific attributes that can be taken from an entity set to differentiate an entity from another. If the topic is city living conditions, the cities can be differentiated from each other based on their overall economic attributes rather than the attributes of their historical beauties in the city. Thus, key attributes are used to specify the context and differentiate the subsections from each other.

Value Set for Attributes

The value set represents the specific values, or value types, that can be assigned to an attribute. For example, for the attribute height, the value has to be a type of length, and when it comes to temperature, it has to be a type of Celsius or a different alternative to Celsius such as Fahrenheit. Value Set of Attributes helps a search engine for performing the neural matching processes.

Neural Matching and EAV Model

Neural Matching and the EAV Model are related to each other to match the entity type from the query, and the entity type from the answer. Google didn’t reveal what actually matches from query to document in terms of the “types of the words”. For example, if you use a word as a “verb” in the query, the search engine finds it as a noun, or uses different word-sense disambiguation, and stemming versions to relate to each other. But, this is not a sample for neural matching, it is simply Information Retrieval with lexical semantics. When it comes to neural matching, it is not matching query and document vocabulary to each other, it is matching the entity, attribute and value triple from the query to the documents. Most times, people use non-structured queries with implicit questions or incomplete phrases.

Thus, it is a must to provide a better neural matching and mind-reading process for understanding the users from the search engine’s perspective. In this context, it is necessary, and helpful to guess the specific questions, possible other synthetic queries, and query refinements for possible entity connections, or attribute types, along with the value sets.

For a query like “X degree in Africa”, the search engine might determine specific question formats. Question generation will be shared in the book that I am writing with every detail. But, here, we can tell that search engines generate some questions such as “X degree in Africa for hunting”, or “for cooking”, “for traveling”, “for walking”, “the places in Africa for X”, and some other things, as well.

Examples of Entity, Attribute, Value Models

There are different entity, attribute, and value model databases. For example, a school can use the EAV model for student and note records and a hospital can use it for patients, and their health records. But, EAV Models are capable of more things, since they contain a diverse and highly complicated substructure. It is possible to read an EAV model as JSON, which makes it more useful for other types of big data processors such as search engines. The EAV Model can have substructures, for example, a plane can have wings, wings can have engines, and engines can have different types of sub-parts. All these parts have different types of attributes. And, all these attributes represent different types of value sets. Thus, EAV Models can have complex structures and traversal retrievals.

Traversal retrievals are for retrieving the data of an entity based on connections from other entities. Imagine that, a search engine tries to take information for a specific context term that contains two different entities. In this case, thousands of different queries, and tens of different attributes from different contexts are needed. Thus, different types of semantic networks or SERP samples can be used. Or, even SERPs can represent different knowledge graph designs, and the semantic networks can be updated for all SERP instances. With that said, the specific context term entities can be taken and used based on the connected EAV Class Relationships. An EAV model for the cars and an EAV model for the planes can have engines, and some other parts mutually, this connectivity can bring them together for traversal retrievals.

In the Frames section of the Understanding the Verbs of Life SEO Case Study, we have processed the “subcategorization frames”. These frames help a search engine categorize situations and connected concepts. Similarly, in EAV Models, an entity can be called via a mutual attribute from another EAV Model. Thus, EAV Models help many institutions with quick query performance and result in an interaction.

In the context of Semantic SEO, an EAV Model Sample can come from queries or documents. Search engines can apply the same principles to build their own knowledge bases. By adding semantic capabilities to their EAV Models, a search engine can alternate and connect the EAV Models to each other. Based on the detailed “attribute types,” these EAV Models can be used for shaping the query networks.

A Brief History of the EAV Model

The EAV Model has been invented by “association lists.” Or, attribute-value pairs. The Language “LISP” has been mentioned for the Creation of Semantic Content Networks with an Example project. The LISP is an old programming language for querying attribute-value pairs. EAV and UIMA, Unstructured Information Architecture, are connected to each other. UIMA is launched by Apache Foundation to support the NLP. The EAV was used for the first time in the 1970s to support hospital systems. Clement MacDonald, William Stead, and Ed Hammonds started a system called The Medical Record. They used an attribute-value pair list with the names of the patients. In this case, the patients were the entities, timestamps were the key attribute for differentiating them from each other, and medical conditions were the attribute to be checked for entity evaluation. The full name of the patient, or the patient ID in the list’s raw version can signal the key attribute, but it doesn’t have a proper context like the date, for pivoting.

Identifying a source document from which one or more facts of the entity represented by the object were derived,

Identifying a plurality of linking documents that link to the source document through hyperlinks, each hyperlink having an anchor text,

Processing the anchor texts in the plurality of linking documents to generate a collection of synonym candidates for the entity represented by the object, and

Selecting a synonymous name for the entity represented by the object from the collection of synonym candidates. — Learning synonymous object names from anchor texts

IBM joined the race of the EAV Model, and they started to use it for database management, and their own search systems. Object Orientation and EAV are used together to support the usability of the databases for better management.

EAV Model is seen as relevant to the search, after e-commerce websites, or any kind of big online store. Because they have specific resources and products for specific purposes. EAV-like knowledge representation models like knowledge bases become a central column of search engines, like Google.

EAV helps to understand how an entity group can be clustered and listed by only their attributes. And how attribute and value pair types can help distinguish them from one another. Thus, for algorithmic authorship template, and content item brief template creation, they enlighten the methodology of semantic SEO.

Metadata and Logical Schema for EAV

EAV Model is called Open Schema from time to time. And, Unstructured Information Management Structure is prominent to provide a better understanding of content based on text processing. Unstructured Information represents any kind of data without a structure, which is a text with lots of other types of hyperstructures without proper connections. Metadata represents the value of the specific entity-attribute pair group to help the query performer. While there are multiple EAV Models that are close to each other, it is not easy to understand which one is for which one. The metadata of the EAV Models represents their definitions and meanings. Usually, query performers use a physical and logical structure for pivoting the data, but it makes the process a little slower and harder.

EAV models can use RDF or an RDF-like schema to express how they differ from others. The first EAV Model TMRs did not function properly because they only had one metadata for the main EAV Model and none for the sub-structures. But later, metadata started to be used as an annotation and documentation system.

For each document in the selected set, a name, and one or more facts are identified by applying the title pattern and the contextual pattern to the document. Objects are identified or created based on the identified names and associated with the identified facts… Attributes and Values Facts associated with specific entities may include specific fact types of values associated with them. For George Washington, we have a “Date of Birth” attribute, and a value of “Feb. 22, 1732…” — Learning objects and facts from documents

While creating the metadata for EAV Models, there were other difficult things to handle. One of them was that it requires consistent metadata for the EAV architecture. At first, the metadata is used without a good pattern, which makes the process more difficult. From time to time, the metadata is not updated, despite the fact that the data changes. Furthermore, the metadata quality is prominent in order to support all EAV Model users.

Why is the metadata of EAV important for SEO? Because the metadata of the EAV Model describes the data and its use for other EAV Models. In this context, a Knowledge Panel from the SERP can represent many metadata sections for the specific entity type and set. And, it helps to understand how a knowledge base creator should think, behave and connect these things to each other. For example, in the Brand SERP Optimization, and the Knowledge Panel Management guides, I explain that search engines do not choose every kind of “subtitle” directly. They try to alternate phrases or filter them for presentation as metadata for the specific entity.

And, metadata of an entity can be seen as a “sub-schema” for describing the specific entity. Thus, metadata browsing is a quick way of checking the entity-attribute-value sets and relationships for understanding their overall structure.

The next section explains the metadata types and will describe the metadata types. While reading them, compare these metadata types to the entity descriptions and definitions on Google’s SERP and Knowledge Base.

Metadata Types

Metadata types are the types for describing an EAV model with metadata. They contain different purposes such as validation, presentation, grouping, and dependency.

- Validation Metadata: Limits the possible values for the attributes by auditing the ranges. The Validation Metadata is important for Information Extraction, and Corroboration of Web Answers as it is stated in the Lexical Semantics SEO and Factoids and News Ranking Presentations of Koray Tugberk GUBUR, because it helps for determining a range of truth for the value.

- Presentation Metadata: Helps to explain how the metadata for the specific attribute of the entity should be presented on the UI. This section is relevant to Information Retrieval in terms of Google’s own serving of the metadata of the constructed snippets. The UI of the Search Engine Result Pages represents the metadata serving styles. And, Google Keys or the keys that are used by Google to order, and structure the SERP snippets are an example of these. The Google Keys will be explained in the future in more detail.

- Grouping Metadata: Attribute types and relations are represented with the groups of the metadata. The size-related attributes and taste-related attributes are grouped differently and connected to different queries, and contexts.

- Dependency Metadata: The dependency metadata determines the ranges of the truths based on other attributes. For example, a maximum speed of a land animal can change according to the average speed, and gender attributes’ values. Thus, the maximum speed attribute is dependent on the other two attributes.

- Complex Computation Validation Metadata: The complex computation validation metadata turns the existing attribute values into a different representation, such as percentages, or proportions. Thus, a specific metadata content of an attribute has to be evaluated over 100, since the percentages have to be completed to 100. This helps information creators to make the textual and visual representations richer, and more unique with different expressions of the same value such as from meters to feet, or Euro to USD.

EAV and JSON Usage

EAV and JSON, along with XML, are connected to each other. Because JSON and XML are different types of structured data for representing the information in a relational database. However, due to some fundamental differences, none of them are strong enough to be compared to EAV. EAV databases are able to store, retrieve, and present complex entities with more details, while JSON and XML are limited to the specific instance of themselves. For example, the EAV with Classes and Relationships involves complex sub-instances that open a new attribute tree for the existing superior entity, while these attributes are inherited, and transferred with a logical tree. JSON has another benefit compared to the EAV, which is speed. The EAV is hard to process, and store, while JSON is way faster to retrieve, construct, or modify. Thus, EAV and JSON are united with Open Schema by helping EAV databases to involve JSON structures in the rows. This brings us to the concept of Row Modelling.

Row Modelling and EAV

Row Modelling is a data modeling technique to structure the values for certain types of entities. Row modeling and data structuring are reflexive behaviors for intelligent and salient beings such as humans. Humans perceive entities and assign, and associate certain attributes and values for things. Row modeling is a semi-automated way of structuring entities as humans do, but it has some limitations.

Row modeling is associated with a single angle and entity for a specific context. In other words, multiple rows and models are needed to structure the data for a specific entity. But humans connect different types of contexts, entities, attributes, and angles to each other. Thus, multi-layered and contextually merged rows are needed to create the Entity, Attribute, and Value structures.

EAV and UIMA

Unstructured Information Management Architecture is connected with the Open Schema, Vertical Database, and Object, Attribute, Value (Entity, Attribute, Value) models because it provides named entity recognition, and text segment context resolution within Natural Language Processing. UIMA was founded by the Apache Software Foundation, and it is used by IBM Research’s Watson, the Clinical Text Analysis and Knowledge Extraction System, and the Ubiquitous Knowledge Processing Lab. The Organization for the Advancement of Structured Information Standards (OASIS) standard for content analysis involves the UIMA, because it is the first organized content analytics and NLP technology for search technologies in the context of unstructured information analysis. And, UIMA uses a non-database EAV model for knowledge representation of unstructured information, to give it structure.

In other words, the first OASIS standard for structured information extraction from unstructured textual data uses the EAV for context, entity, and meaning resolution via triples, and object-attribute pairs in the forms of association lists. Thus, E-A-V is still an important concept for Entity SEO and Semantic SEO. E-A-V (Entity, Attribute, Value) model helps for giving meaning to the Triples, and re-structure the web search engine indexes from strings, and phrase postings to entities, context terms, and triples.

Attribute Types and Dimensions for Semantic Content Network Designs

Semantic Content Networks are defined and found by Koray Tugberk GUBUR to explain the power of semantics, patterns, and microsemantics along with senential NLP to rank higher on web search engines such as Google by providing higher relevance, and better query responsiveness. The concepts such as Topical Authority, Topical Maps, Cost-of-retrieval, Information Responsiveness with Ranking State, Initial Ranking, and Re-ranking Analysis are found and used to complete the working principles of Semantic Content Networks.

With the help of Artificial Intelligence (AI) and NLP (Natural Language Processing), NLG (Natural Language Generation), and NLU (Natural Language Understanding) technologies, the microsemantics, or minor differences and changes in the content networks, create huge ranking changes. Google’s perception is connected to the factoids in topically authoritative sources, and when the authoritative sources start to change based on microsemantics, the entire knowledge base of Google changes for the entities, attributes, values, and their connected triples. Thus, attribute types, and their dimensions are prominent in semantic content networks.

The Semantic Content Networks use the attribute types and dimensions listed below.

- Root

- Rare

- Unique

Definitions of those attributes are expanded in the semantic SEO Course.

Attributes and EAV Connections

The attributes for Semantic Content Networks and the E-A-V data structure align with each other because every attribute that is used for a specific entity from a specific set of classes represents the mutual aspects between the things in a certain context. The attributes signal the context of the specific content item, and it helps search engines to generate answer terms, and match the context terms. Thus, using the E-A-V database structure while designing an article helps an SEO to have a better understanding of search engines, and communicate with the search engine information extraction patterns and conditions better. The semantic content networks’ attributes, context segments inside the content briefs, and macro-micro context connections are arranged based on attribute prioritization.

The design from Google above shows a clear indication of “extraction patterns” and “target classes” for the specific entities, all these thought streams, and their patterns are matched with the neural nets of humans that create the search behaviors. Thus, a proper topical map and the semantic content network have to evolve with the search behaviors, but search behaviors are divided into two segments possible search behaviors and related search behaviors. Every search behavior is connected to a real-world search activity, too. Real-world search activity, possible search activity, and related search activities are connected with each other with the help of entity-oriented search understanding. As one more note, an attribute that too most prominent inside the queries can force a search engine to create an index series based on the attribute concept that signals the conditions, classes, or things. For example, the population might be an attribute for the cities, but the macro context might be “population problems”, thus the cities would be inside the side and sub-contexts while “population” related attributes and population as attributes for cities would create another contextual domain. Thus, the E-A-V model as a knowledge representation methodology helps in understanding the semantics of SEO, and search along with a “structured and disciplined mindset” for understanding the internet of things.

Most of those concepts are explained and deepened further in the semantic SEO Course.

Last Thoughts on Entity, Attribute, Value Model, and Attribute Filtering for SEO

Attribute filtering is a concept that was discovered by Koray Tugberk GUBUR to help future SEOs understand which attributes to process first and which to skip for a specific article. Rather than stuffing every entity, and attributing it to a specific article, the SEOs should focus on intelligent and aware methodologies for contextual consolidation and topicality signals, along with information richness, and density.

Before finishing this article, to show the prominence of attributes and patterns that come from them, Google Base is explained.

Most SEOs place too much emphasis on entities while ignoring attributes. But, I am thankful, at least they understood the value of entities after 20 years of preaching by search scholars such as Bill Slawski. The attributes are more important than entities to classify the context and cluster the documents with certain types of queries from certain search behavior patterns. Thus, Google launched a project in the past, called Google Base. Google Base helps users submit any kind of XML file or product with certain types of attributes. Google launched Biperbedia which is a non-announced project to collect and gather billions of attributes. Biperbedia comes from the Freebase Era to understand the semantic web and search. Thus, Google Base is a good example of collecting attributes with the help of the users. Google performed the same approach from 2005–2011 for abbreviations, too. In the E-A-V model, we explained why the synonyms for entities are prominent and how anchor tags help in extracting synonyms for entity recognition. Similarly, Google launched a project on Twitter before by telling people to share the abbreviations for brands and organizations, concepts, and situations so that Google could recognize these synonym sets better.

The purpose and definition of the Google Base are below to define the prominence of attributes, and their first methodology of collecting attributes for products, product search, and overall web search verticals. Google base is turned into Google Merchant, and it started to take the attributes from the e-commerce stores from their product feeds in the form of XML files. And, the same approach is followed for Google Questions Hub, Google collected questions for certain entities, and attributes, and helped users to submit questions and documents. Now, Google Question Hubs are integrated into the Google Search Console as Google Base is integrated into the Google Merchant Center, and Google Merchant Center is integrated back into the Google Search Console. The methodology of Google for constructing the web stays the same. Scraping the web, asking users, and taking submissions from users create the networks of attributes that help Search Engines and SEOs to use E-A-V, and Object-Attribute-Value along with Triple (Subject, Predicate, Object) data structures. Google’s journey for semantic search and attribute collection along with the Biperbedia will be processed further in the future.

Google Base was a database that allowed anyone to add information in formats such as text, images, and structured information such as XML, PDF, Excel, RTF, and WordPerfect. Google Merchant Center has been downgraded to its current version as of September 2010.

“When Google determines that user-added content is relevant, it displays it on its shopping search engine, Google Maps, or even the web search. For example, a recipe’s ingredients or a stock photo’s camera model could be labeled based on the content. There was a lot of interest in the service before it was made available to the public because information about it spread before its release. An official statement was subsequently published on Google’s blog:

We’ve been testing a new product and speculating about our plans. Here’s what’s really happening. Our team is testing a new way for content owners to send their content to Google, which we hope will work well with Google Sitemaps and our web crawl. “We think it’s an exciting product, and we’ll let you know when more information becomes available.”

Uploading files to Google Base servers can be done via your computer, the web, various FTP methods, or API coding. “A number of performance metrics, including the number of downloads, were available online.”

- Sliding Window - August 12, 2024

- B2P Marketing: How it Works, Benefits, and Strategies - April 26, 2024

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024

Thank you for your hard work. As always, a very detailed and deeply structured text.

Tell me what material to read about the processing of the HTML code structure by Google robots. Which HTML code structure is more friendly to Google?

Koray,

This is great information! I’ve been reading and watching your videos and the last few are getting closer to topics and information that people can grasp and starting taking action to implement on their websites.

Is there a way to automate the attributes and their ranking for entities? Or is this calculated based on entities/attributes of existing, ranking webpages?

Also, is it necessary to create a webpage for each entity/attribute? And then that links up to the main entity webpage, which would have a heading for each attribute in it? I think I can see how the Topical Map can be constructed using these attributes.

Best Regards,

Lance

Great article, filled with information. Thank you, Koray!

Thank you.

Great information Koray. I have tried to create a simplistic article for my team here https://www.fatrank.com/identify-the-root-rare-and-unique-attributes-of-an-entity/

Im absolutely loving the semantic SEO course and it has certainly transformed the quality output from my writers

Thank you for your kind comment, James. It is always great to see you. Sorry for checking the comment area late. 🙂

A pillar of information and many takeaways from your research and work. Thank you for making the life of SEOs easy.

Thank you so much, Umair!

This is a great in-depth about entity attribute & value.

Thank you, for your kind review about our article.

Great Article Koray. It had added so many new concepts, which I was struggling a little bit to understand. I have one question, “How to do Content Configuration”.

Contnet configuration is a method of changing the word order, and connections to increase the relevance.

For ranking the query “Financial Independence”, the specific contextual phrase should be the subject in the sentence rather than the object.

For example, “Financial independence is liberty relies on sufficient personal economics” sentence is better than “Financial advisors help for achieving financial independence”.

The second sentence focuses on the job description of financial advisor, while the first one gives the function and prominence of the financial independence.

What a indepth Information it was! Thanks Leader

Thank you so much.