Initial ranking score and historical data for SEO are two useful concepts to understand a website’s (source) potential to be able to rank for a query network, phrase variations, or topical cluster initially. Initial Ranking and Re-ranking are two different phases of the ranking process of Search Engines. Understanding Initial Ranking and Re-ranking Processes, and Search Engines’ perspective for ranking related processes, is important to be able to initially rank a source for a query network better.

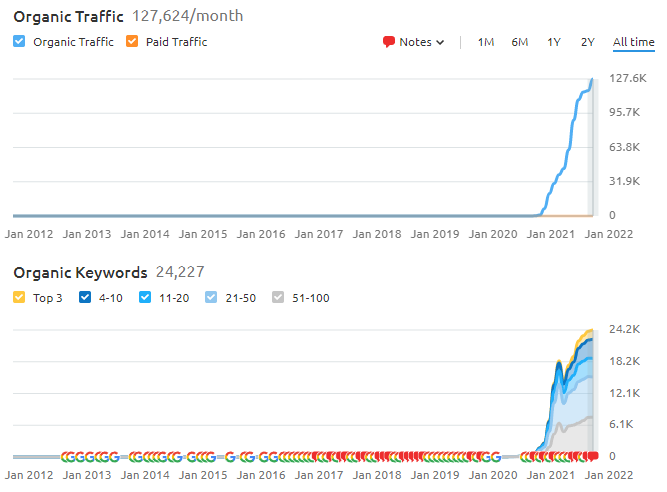

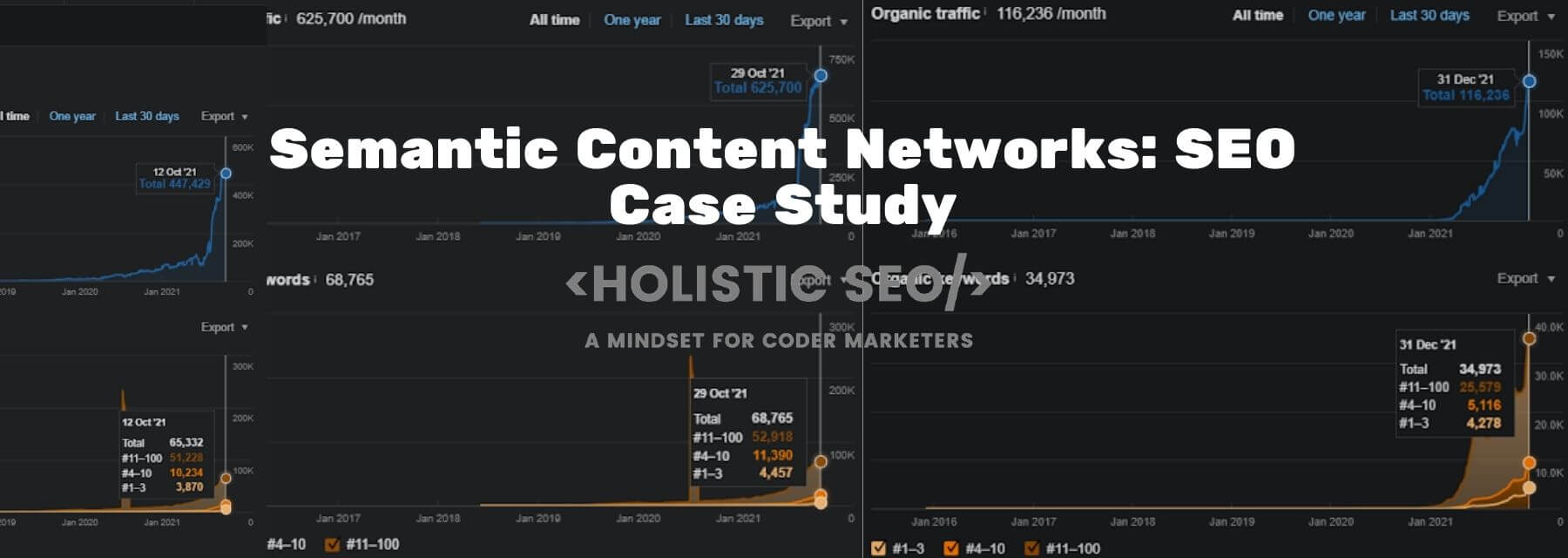

Initial-Ranking and Historical Data Importance for SEO are mainly connected to Semantic SEO along with Technical SEO, Web Page Loading Performance, Source Popularity, and Reputation. During the Initial-ranking and Historical Data SEO Case Study, four websites will be given as an example. These websites’ names and their organic search performance change during the SEO Case Study can be seen below.

- 10,000 Queries, Daily 300 Clicks, 40,000 Impressions in 20 Days (Initial Ranking), İstanbulBogaziciEnstitu.

- 33,000 Queries, Daily 11,000 Clicks, 150,000 Impressions in 65 Days (Re-ranking), İstanbulBogaziciEnstitu.

- 3,500 Queries, Daily 200 Clicks, 2100 Impressions in 30 Days. (Initial Ranking), Vizem.net.

- 32,000 Queries, Daily 5,000 Clicks, 54,000 Impressions in 30 Days. (Re-ranking), Vizem.net.

- 416,000 Queries, Daily 2,000 Clicks, 890,000 Impressions in 45 Days. (Initial-Ranking), Site-3 (Not disclosed).

- 185,000 Queries, 1000 Clicks, 201,000 Impressions in 6 months. (Initial-Ranking), Site-3 (Not disclosed).

One of the newly (entirely) published websites’, how it is ranked initially, and how it is re-ranked by the search engine can be seen below. This example is from Site-3.

The example below is also for the same website and its increase in terms of organic search performance.

Another example can be seen below.

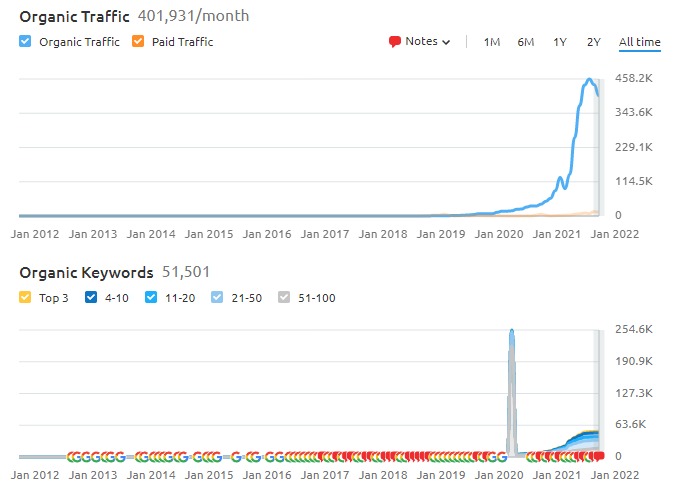

The last situation of Vizem.net can be seen below.

After 30 days, this is the last version of Vizem.net from the Semantic Content Network SEO Case Study.

The three weeks later versions can be seen below.

The last situation of IstanbulBogaziciEnstitu.com can be seen below.

Vizem.net’s last situation can be seen below.

This is the 30-day later view of Vizem.net for the Semantic Content Network SEO Case Study.

The Videos to Explain How Google Ranks are presented below. Semantic SEO Strategy to convince Google’s ranking algorithms is presented below.

An algorithm analysis for Google’s Ranking Systems is below.

450% Increase in 3 Month: From 60,000 to 330,000 / 2,000 to 11,000 Daily/Monthly Clicks – İstanbulBogaziciEnstitu

The first website’s industry is education for students, workers, employees, and any person from daily life with every kind of possible skill improvement. Since the first website is about “paid course selling”, it is easier to find proper query templates, intent templates, and document templates to create a contextual attachment between the query and the document around a specific topic and interest area. Most of the queries include a verb when it comes to improving a talent, or skill via a paid course such as “coding, convincing, managing, concentrating”, and they also include a context with a noun, or object such as “business, motivation, software, resources”. When these different contexts are combined based on a topical map, they can consolidate the expertise and categorical quality of a source for better initial ranking.

During İstanbulBogaziciEnstitu ’s SEO Project, the advantage of the initial ranking has been used from day one. The organization of the information on the open web by a search engine can be perceived by the search engine’s preference for ranking the documents and clustering the queries, and sources along with contexts. Based on this, for the first website of the “Initial-ranking and Re-ranking SEO Case Study”, the methods below have been used.

- Choosing a contextual gap between the educational websites and the audience for the specific topic.

- Deciding the best possible centroids for the clustered queries from the specific topic.

- Generating the best possible query templates.

- Generating questions from the query templates.

- Matching the answers with the generated questions.

- Connecting the different contextual domains to each other based on related search activities.

- Creating the content network as ready to be opened to the Google Search Engine.

- Letting Google index all the content networks by noticing “strongly connected components”, and attached “contextually matching queries and documents”.

During the first website’s SEO Case Study, I noticed the things below.

- The documents from the content network that are placed higher, and earlier indexed faster than the delayed articles. If a document has been linked before then others within the seed page, and if the “local interconnectivity” of these pages supports this order, it will be indexed than others.

- The first indexed documents have changed their rankings in a faster way than others. In other words, the “re-ranking” has been triggered earlier than delayed documents within the content network.

- If for certain types of entities, the source doesn’t have enough historical data, the initial ranking will be lowered.

- Google can index the same amount of URLs with certain day breaks. It shows that Googlebot creates a frequency and regularity for the indexing. And, when the first indexed pages are successful to overcome the quality, relevance, and accuracy threshold, search engines will increase the frequency and velocity for indexing by decreasing the crawl delay.

- Seasonal Events can be used for triggering a re-ranking event.

- A seasonal event can include multiple trending topics, and clustered queries, if a new source publishes a new content network with high quality for the specific topic with high accuracy, search engines will prioritize the indexing documents from the same topic while refreshing the old content from its context.

- During this prioritization, a new source can have the benefit of creating historical data for the specific topic, and this historical data will help for further indexation, and improve the overall quality, and organic search performance.

- Having high impressions from certain types of trending topics, and related queries will improve the average position of the related documents. These documents will protect their rankings if they are successful to overcome the quality threshold by providing better structured factual information organization than existing competitors.

Below, you will find the specific seasonal event, and its effect on the average position and total click count.

On Jun 9, 2021, the related content network has been opened to Google.

The targeted seasonal event was on 28 July.

- The target was to have the seasonal SEO Effect for gaining the trust of the search engine by gathering all the historical data possible.

- To do that, the contextual vector, hierarchy, neighborhood content, and knowledge domain terms are arranged with related information and facts.

- Sentence structures, the related entities, and contextual bridges across the topical map have been created.

- The contextual hierarchy is provided with related taxonomy, and also related search activity for the specific topic’s subtopics.

- Until 28 July 2021, search engines were indexing between 5-25 pages a day.

- After 28 July 2021, it was more than 100 per day, and soon after, all subsections were indexed.

- Before the seasonal SEO Event, there was an unconfirmed Google Update.

- This specific update was a test for the source, and it is not affected permanently.

- The average position before the seasonal event was between 11-13.

- During the seasonal event, the average position was 7.6 since Google prioritized the relevant sources.

- After the seasonal event, the average position was between 9.0-10.

- There was another unconfirmed update on the first day of September.

- Until and during the unconfirmed update, the seasonal SEO event’s effect continued, but Google tested the source one more time.

- During the update, the average position was 13.8, and the daily average click has dropped to 3,000 – 4,000 from the 12,000.

- After the unconfirmed update of Google from the first day of September, it was 28,000 daily clicks with an average position of 8.

- During the 5-6-7-8 of September, there was another sequential unconfirmed Google Updates, and Google has normalized the traffic and the average position as 8.6, and the average clicks per day were between 12.000-13.000.

A comparison of the 2020 September and 2021 August shows the true change of the SEO Project in the best way.

This is an example of using the historical data, the initial-ranking advantage with high coverage, and first-day comprehensiveness for a topic by triggering a re-evaluation for all sources during the unconfirmed updates. And, the sections above explain how Google has tested the source thanks to a seasonal trending event and re-ranked the source for further testing.

The comparison of 2020 July and 2021 July.

When enough historical data, and “implicit user-feedback” have been provided, the source has ranked last time with a high confidence score as after the seasonal event with nearly the same average ranking and click amount.

The comparison of March 2021, and August 2021.

Below, you will see the changes in the daily crawl requests for İstanbulBogaziciEnstitu during the SEO Case Study of Initial-ranking and Re-ranking examination.

There is a gradual increase in the crawl requests and the total download size. After the Seasonal SEO Event, the gradual increase continued. And, the unconfirmed Google Updates’ correlation with the requested amount of Googlebot can be seen. Since the crawl data from GSC is partial, still performing a full analysis is not possible, but acquiring the log data for every SEO Project for a log analysis might not be possible.

The indexation process can be seen below.

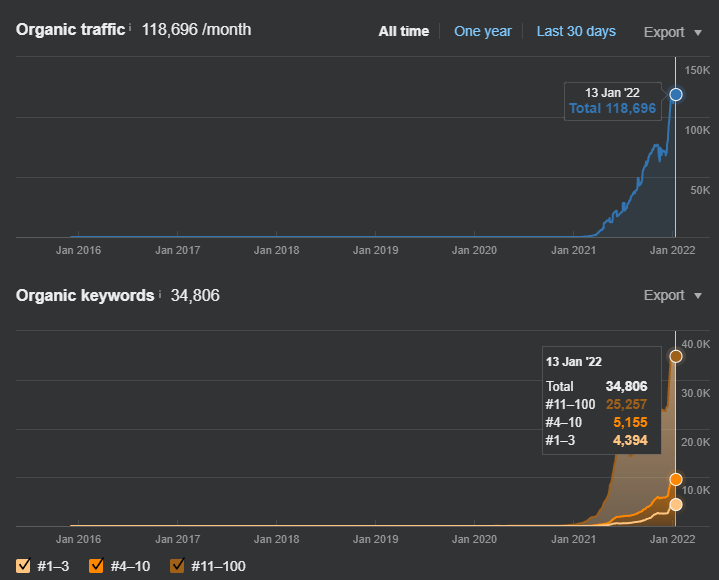

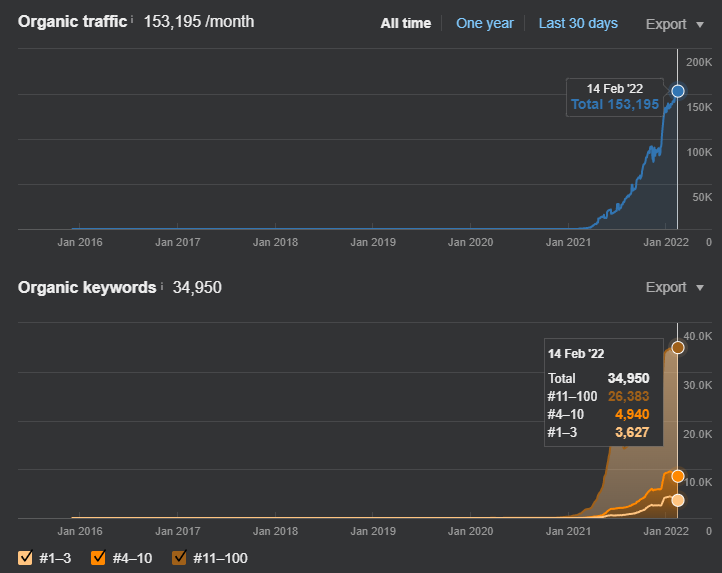

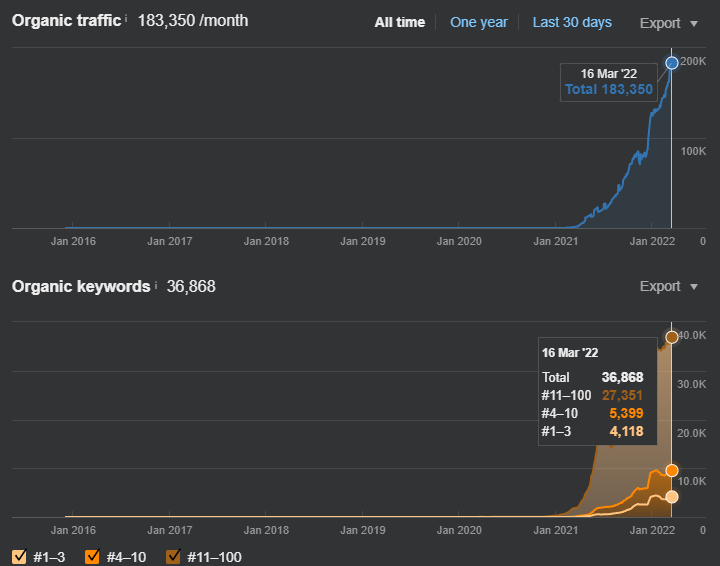

The “total crawl requests” and the “impressions”, and the “indexation” process, along with the “unconfirmed updates of Google” has an alignment. During the SEO Case Study, you can see the change from SEMrush as below.

The change and the success can be seen from Ahrefs as below.

Below, you will see the change only for the published content network.

All this change happened in 3 months. The same content network can be seen from SEMRush as below.

While I am writing these lines, I have published another topical map with sub-topics that are related to the content network that I have published previously. Below, you will see the graphics for only the newly published content network. And, these graphics are from the second day of launch.

From Ahrefs, the second day of the new published content network can be seen below.

From some videos, I actually have some view of the first day, 7th day, and 20th day of the previously published education topical map’s success based on Semantic SEO practices with initial ranking. Below, you can see the images of the first published content network’s success and change for the first 20 days.

1

2

3

I am showing both of the content networks’ existing and previous versions because they are similar. Once, three months ago, the educational topical map didn’t have even a single query within the first 3 rankings. With 0 backlinks, only with SEO Theories and Search Engine Understanding, it has more than 1500 queries in the top 3, and more than 32,000 queries in total in 90 days.

In this context, let me explain the initial-ranking advancement. Above, we have processed the average position changes during the indexation of the education topical map (first published content network) and we compared it to the different timelines to show how the confidence judgment of search engines changes. In this context, below, you will see the initial ranking of the new published content network which focuses on “jobs, and occupations”.

This is a screenshot from the first 7 days of the related subfolder, and it has an average of 6.5 positions. It is two times better than the initial ranking of the content network that focused on “education”. The second content network has a topical map for the “jobs and occupations”. As you can see, the “jobs” and the “education”, “skill improvement”, and “career advancement” are related to each other. And, semantically created topical map hierarchies, and aligned content networks with knowledge domains, contextual domains, and layers are supporting each other by making the same source more authoritative.

Below, you will see the initial ranking of the “educational topical map” which is the first content network that I launched on 9 June 2021.

You can see the improvement of Topical Authority and its reflection on the initial ranking. Since I love to focus on theoretical concepts of Search Engines, I will process the “initial ranking”, and how it can be improved, how to generate the content templates, based on interest areas and query templates, or how to connect different content networks to each other. To plan a topical map, to catch a seasonal event, to gather historical data, and convince the search engine with comprehensive information and high topical coverage, semantic SEO and search engines’ nature should be known by SEOs.

Broad Core Algorithm Update and Unconfirmed Google Update Effects on the İstanbulBogaziciEnstitu

A Broad Core Algorithm Update can change the overall relevance radius and ranking efficiency of a website. A search engine can change the crawl priority, indexing delay, and a confidence score for rankings with a Broad Core Algorithm Update. Websites can experience a relative improvement after a broad core algorithm update thanks to an Unconfirmed Google Update, but reversing the entire effect of a Broad Core Algorithm Update can happen with another Broad Core Algorithm Update. In the context of re-ranking, and initial ranking, both of the algorithmic update types, whether it is an Unconfirmed Google Update such as a Phantom Update, or Broad Core Algorithm Update (BCAU), can affect the initial ranking and re-ranking processes, assignments, and potentials along with the relevance radius.

The Google Broad Core Algorithm Update from June affected İstanbulBogaziciEnstitu negatively. It means that all the values that have been seen from above could be way much better than the actual values since Broad Core Algorithm Updates affect the initial-ranking potential, and re-ranking processes’ negativity or positivity.

Below, you can see the negative effect of the June Broad Core Algorithm Update for İstanbulBogaziciEnstitu .

Since the website has acquired a tremendous amount of organic traffic increase shortly after, the negative effect of the broad core algorithm update can’t be seen clearly. Below, you can see its negative effect a little in clarity.

A Broad Core Algorithm Update can be won by a website even if it loses organic traffic for the previous time, or it can be lost even if the website increases its organic traffic for the previous period. A broad core algorithm update can slow down the rankings of the articles that will be published after the update’s launch, or it can improve the initial rankings. A relevance radius, prominence score, and quality score for the overall source can be recalculated by re-distributing the source’s position on the query clusters based on topical borders. In the example of İstanbulBogaziciEnstitu, despite the negative effect of Google’s June Broad Core Algorithm Update, the website is able to rank better than sources with authority, historical data, and relevance for a specific topic thanks to Semantic SEO implementation.

Further Information on the Second Content Network Launch

While writing the Initial-ranking and Re-ranking SEO Case Study and Research, I was able to check the second content network’s initial-ranking process with continuous re-ranking. Below, you can see a video from three different sources with the daily improvement.

Google Search Console Data for the Second Content Network’s first 10 days can be found below.

When the query count increases, the positional data for the specific queries change. Whenever more web pages are indexed for more queries, the average position decreases, after collecting the necessary amount of historical data and relevance consolidation from the content network, the average position rises again with the help of a positive re-ranking effect. You can see the relevance of impressions and the average position with the same perspective.

The re-ranking decay for a web page from the semantically created and configured content network is lower than the previously created and relevant to the second one thanks to the source’s improving historical data and confidence score of the search engine.

- An inverse proportion between the average position and the increasing impression means that the source shows itself for more queries.

- And, if queries, impressions, and average position increase together after a short period of time, it means that the re-ranking happened after the initial ranking.

Ahrefs Data for the Second Content Network can be seen below only for the first 10 days.

Some key points from the Ahrefs data for the second content network’s first 10 days can be found below.

- Taking the queries for the first 3 rankings with the first content network has taken 60 days.

- With the second content network, it has taken only 10 days.

- This “re-ranking positive effect” and “re-ranking decay” are the signal of the topical authority increase.

SEMRush Data for the Second Content Network can be seen only for the first 10 days.

Below, you can check the first content network’s overall performance for the last 3 months.

From zero to 712,000 organic clicks can be acquired with semantic SEO and “Initial-ranking” advantage. And, nearly 15% of these organic clicks are acquired in a single day for the historical data advantage and a faster re-ranking process.

What are the Last Situations of the Content Networks that are Published?

Since I do not know when I will publish this long SEO Article and Case Study, I have put this heading here. You can see the last situations of these sections below.

The last Situation of the first content network is below.

The last Situation of the second content network is below.

15 October 2021, the performance of the Second Semantic Content Network of İstanbulBogaziciEnstitu which is the 19th day of the launch.

The third semantic content network’s potential is larger than the first two in terms of the topical coverage and the search activity frequency, thus, with the support of the first two semantic content networks, the third one can have more than 200,000 queries alone with more than 1.5 million organic sessions. Since it won’t have a detailed configuration and semantic closeness, the actual numbers will be lower than they should be.

In normal conditions, only this SEO Experience deserves a separate article, because there are way much more details. If I do not publish this article, I will put the change of the second content network that focuses on the jobs and occupations as well below with an extra note. Or, I will add it later.

Now, we can focus on the website which has a different methodology that has been followed for initial ranking and the better re-ranking processes.

From 0 to 10.000 Daily Organic Clicks in 6 Months – Vizem.net

For the second website, I can’t write a proper organic click increase percentage since it comes from 0. On Vizem.net, the methodology that I use for the initial ranking and the re-ranking are entirely different. And, to explain these differences, I will need to explain the knowledge domains, contextual domains, context qualifiers, query templates, question-answer pairing, and entity association based on entity types, related-possible search activity coverage, search intent coverage, query paths, broad appeal, and more. Since my main focus here is “re-ranking” and “initial-ranking”, I will explain some of these terms based on “ranking” algorithms of search engines in future subsections.

What is the background of Vizem.net?

Vizem.net is a Visa Application Website. The website doesn’t have a specific purpose in terms of country, region, or visa-related process. The client wants to cover everything for a specific industry, which is a great advantage for the “Broad Appeal” which is a quality signal. Based on this, the Query Parsing and Processing methods of a Semantic Search Engine are used to understand the importance of the attributes and possible search activities based on them.

Query Parsing and Processing methods of a Semantic Search Engine rely on named entity recognition, phrase-based indexing, co-occurring matrices, word clusters, word vectors, and semantic role labels along with fact extraction about entities. In this context, to create a proper topical map for topical authority, a person should be able to find the mutual parts of queries, their possible templates, and “next word-sentence prediction” algorithms’ reflexes. After the query parsing and process analysis, the important part within the queries was “countries”. And, all the taxonomy and ontology have been used based on entities with the type of countries.

To keep things around the “re-ranking” and “initial-ranking”, I won’t go deep on the topical map creation, and query processing. To learn more about query parsing and processing methods of a search engine, you can read one of the related presentations.

What are the methodological differences between İstanbulBogaziciEnstitu (Site One) and Vizem.net (Site 2) SEO Case Studies?

Vizem.net’s main methodological difference from the İstanbulBogaziciEnstitu is…. Continue

What is Initial Ranking for SEO?

Initial ranking is the first assigned ranking value to a web page for a query within the Search Engine index. Initial Ranking can be changed during the re-ranking process by the Search Engine, according to the changes over time for the document based on internal or external factors. Initial ranking value for a web document can affect its historical success and discoverability itself. Based on this historical data, the re-ranking scores will be altered by the initial-ranking score irreversibly. Thus, an initial ranking score is important to make a web page document successful and ranks it higher on the surfaces of a search engine.

Every web source can have a different average initial ranking score for a different topic, query pattern, entity, or context-based on its topical coverage, authority, and popularity. Historical data for a source can affect the initial ranking score for the web page documents that belong to the source. If there is high historical data with positive user feedback, and relevance, quality, and reliability signals, the initial ranking score will be boosted furthermore.

To boost the initial ranking score of a website without historical data, topical authority, and popularity for a query network, broader appeal, deeper information, and faster topical map completion can be used.

What is Initial-Ranking Potential for a Source?

Initial Ranking Potential is the potential of a source for increasing its initial ranking value for a topic, query network, or phrase variation based on its historical data, topical relevance, authority, and click satisfaction evaluation. Initial Ranking Score and Potential can be perceived, be affected, and affect the things below, in the context of SEO.

- Same textual and visual content can have different initial rankings within different sources.

- The same textual and visual content can be published in multiple sources, and Google can assign the one as the canonical version based on the initial ranking score.

- Initial ranking score and potential can be increased by updating, and publishing content more frequently for a certain topic.

- Topical coverage, topical authority, and topical relevance are directly related to the initial ranking score.

- Source authority, popularity, and reliability-trust signals can affect initial ranking potential.

- An initial ranking score of a source can impact the source’s re-ranking process and indexing delay.

- The initial ranking potential of a source can be measured by the initial ranking of a document from a source for a specific, certain type of query.

- The initial ranking score can be influenced by the Page Speed, Technical SEO, grammar structure, and overall quality score of the source.

- Initial-ranking score and potential can increase after a Broad Core Algorithm Update for a source.

Full Domain

How Does Initial Ranking Work for SEO?

Initial ranking score (value) assignment for a source can affect different HTML, PDF, CSV, or PPX Documents’ rankings on the SERP. Based on this, Initial Ranking for SEO can be used to boost a website’s initial ranking for better organic search visibility. For websites such as News Sites, Blogs, E-commerce, or Affiliate Sources, an initial ranking score can be used to rank these websites’ documents faster.

To rank content faster with better rankings, initial ranking concepts and scores can be used to understand the Search Engines’ perspective. The overall quality of a website and clarity can help a Search Engine to assign a source with better initial ranking potential for the documents of the website. Thus, for certain topics, contexts, entities, and queries, some sources can be ranked initially with higher rankings. To use and measure the Initial Ranking Score for SEO, the methods below can be used.

- Auditing the initial ranking of a source for a specific topic.

- Checking the synonyms, phrase variations, and related queries for the initial ranking of the document.

- Checking the re-ranking process, speed, and direction for the document.

- Comparing different categorical queries and documents for the initial ranking value.

- Checking the competitors’ initial ranking.

- Auditing the indexing delay for certain topical content.

- Checking the partial indexing and rendering phases of a document with the Search Engine perspective.

- Improving the neighbor content, publication, and update frequency for the information amount on the source for a certain topic and website segment.

- Decreasing the average web page size to decrease the crawling, and indexing cost.

- Increasing the source’s popularity and reliability, along with its reputation.

- Completing a topical map by covering more topical nodes faster than competitors.

- Improving the source size with unique content, information, and sentence count faster than competitors.

- Using the front-title words for checking the average initial ranking value and potential of the source.

A high initial ranking score will decrease the time decay for the re-ranking process and its positive direction intensity.

Why is the Initial Ranking Score useful for Search Engines?

The initial ranking score can decrease a search engine’s time and resource cost for the evaluation of a document to rank it. Initial Ranking Score Assignment can rank documents on the web more efficiently. High Initial Ranking Score Assignment can be used for the authoritative sources for different topics. Topical Authority Assignment and Semantic Clustering of the information on the web complete the Initial Ranking Value for sources and documents.

Google uses Machine Learning algorithms to allocate Web Crawling Resources to different sources, and also source sections. Initial-ranking scores can be different for different web source sections. The initial ranking score assignment for different website sections is below.

- A Search Engine can assign a high initial ranking score to an eCommerce website’s specific product pages for specific product types, and features while for the informational content on these pages, different website sections can have a lower initial ranking score.

- A Search Engine can try a source by increasing its initial ranking score and evaluation to see the source’s link proposition value.

- A Search Engine can assign high initial-ranking scores for certain keywords, entities, attributes, or query patterns for certain source sections.

- A Source section can be determined by author name, web page layout, URL or Breadcrumb breakdowns, Structured Data, or question and answer format of the content.

- A Source section can be evaluated based on the external references, whether it is a link, mention, or referenceless quote from the original source.

- A Source can have a better initial ranking score for specific sessions or seasonal trends based on its source identity, or source section identity.

- A Source’s initial ranking can be affected based on the visual content, or visual content material for specific site sections. A query can seek a video, image, infographic, or visual explanation, and representation. If a source section has more visual content than others, it might have a better initial-ranking score for these types of queries, and questions.

- A Source section can have a better or lower initial-ranking score based on the unique sentence count, unique paragraph count, unique content count, or grammar errors, writing style, and unique terms, information, facts, prepositions, and structured opinions with numeric values, and statistics.

- A Source can be clustered with other sources based on links, content similarity, webpage layout, or organizational and entity profile features. If a source is clustered with other sources, the initial ranking factor can be evaluated together with other sources.

- A Source’s different sections can be clustered with different sources. If a source’s news section is closely related to a specific news site, while the recipe section is closely related to a recipe site, and if the blog and product sections are closely related to other types of sites, the initial ranking evaluation process can be handled by different clustering processes. To modify the initial ranking score of a Search Engine, a source should target to become the representative of the quality source clusters for specific topics, and contexts.

Thus, an initial-ranking score is helpful for Search Engines to understand the web faster, and introduce the new web documents to the web search engine users within the query results with the best relevant results. If a source is authoritative for a topic, entity, or phrase group, it can be evaluated faster, and ranked better initially. Initial Ranking Algorithms decrease the cost, the time needed, and the computation needed for the search engines in the context of crawling, indexing, ranking, and serving.

An initial ranking algorithm can be supported by a re-ranking algorithm.

What is Re-ranking for SEO?

Re-ranking is the process of evaluating and modifying the initial rankings and existing rankings of a query result instance. A Search Engine Result Page includes the filtered web page documents for a specific query based on relevance, quality, reliability, authority, popularity, and originality. The re-ranking algorithm’s purpose is to support the initial rankings by increasing the efficiency, quality, and sustainability of the SERP with different SERP Features, and sources on the web.

Re-ranking algorithms for a search engine are crucial to continuing to satisfy the users’ possible and related search activities. Calculating the different search intent possibilities based on simple or complex user queries, and matching these search intents with different documents, requires different types of re-ranking algorithms. A Re-ranking process can be evaluated based on relevance, and another one can be evaluated based on the freshness of the document.

ForexRecommend, initial ranking, sitemap from robots.txt

Encazip, Authoritas URL-Link

BKMKitap.com Link

How Does Re-ranking Work in SEO?

When a web page is initially ranked, the re-ranking process starts after the first feedback from the web. The feedback from the web after the initial ranking process can be a timespan, user behavior, source changes, web page changes, external reference changes along with internal and external popularity signals. Re-ranking algorithms can be triggered to make a Search Engine reevaluate a web page so that the rankings can be changed.

How to Trigger a Re-ranking Process from Search Engines for SEO?

To trigger a re-ranking process, the web page document can have a new external reference, internal reference, or a content change. A re-ranking trigger can be acquired with controllable and uncontrollable factors for SEOs.

- Uncontrollable factors of re-ranking triggers for SEO are trending news, query demand changes, Search Engine Algorithm Updates, or bugs, and seasonal changes.

- Controllable factors of re-ranking triggers for SEO are external, and internal changes at the level of a website, web page, and intersection of external, internal, website, and web page areas. Such social media accounts of the brand are internal for the brand but external for the website in the context of SEO evaluation.

The next section of the article has many learnings from Dear Bill Slawski. As the biggest fan of Bill Slawski, I owe many things to him, especially my vision. Thus, we all should thank him for the vision and the inspiration that he gives.

Koray Tuğberk GÜBÜR

What are the Re-ranking Methods and Criteria of Search Engines?

The criteria and methods that can be used by a search engine for reranking the web page documents for user queries are listed below.

- Rerank search results by filtering duplicate, or near-duplicate content: A search engine can filter the duplicate content or highly similar content to the other instances without any additional value or prominent source attribute. Even if a copy or duplicate content is initially ranked, the specific content can be negatively re-ranked by the search engine based on other criteria.

- Rerank Search Results by removing multiple relevant pages from the same site: There are three different possible outcomes of multiple relevant pages for a specific query, one is cannibalization, one is being clustered for different subqueries, and one is outranking computation. If two web pages are closely related to a query, they can cannibalize each other and create ranking signal dilution. If two web pages are competing with each other for different attributes of the same entity by subqueries, they can support each other by being clustered. In the last option, the more relevant, externally, and internally popular one can outrank the second page to be reranked.

- Rerank search results based upon personal interests: Personalization of user, and user segments are closely related to the historical data of a web page, and the source. A search engine can track a user’s interests, and cluster the user with a user segment to modify the search results for better quality SERP instances. A search engine can rank a web page document for a user’s personal interests higher or lower than the usual. A query pattern, past search activity, and visited sites can affect the rankings on the SERP for individual users. These modified ranking results for personal interests can be used to modify the default SERP versions for specific trends, or SERP instances without any personal information.

- Rerank search results based upon local interconnectivity: Local interconnectivity between the web page documents includes the links between documents within a closely related web graph. If there are “n number of documents” for a query, if these documents mostly link certain sources, and if these referenced sources link some other sources, local interconnectivity for specific documents can be measured. Thus, the Programmable Search Engine of Google includes a parameter for retrieving documents if only they link a specific document for a query. To learn how to use the Custom Search Engine API of Google with Python, read the related guideline.

- Rerank search results by sorting for country-specific results: A user may want to see results only from a country-specific domain or country-specific IP. A topic or entity can be more popular for a specific country. In the field of SEO, from time to time, the IP Address of the source (website) can affect the local search rankings of a domain. If a domain has an IP Address from Poland or Australia, it can have higher rankings for the users from the specific country. Also, even if the IP Address is from some other country, if the source has lots of external link references from other countries, the Search Engine can relate the specific source for these localities, or countries again. In some cases, if the source has an extensive amount of content about a country, the Search Engine relates the source with that specific country for reranking processes.

- Rerank search results by sorting for language-specific results: A user’s operating system language, browser language, or communication language can affect the reranking process for specific queries. Query language, and preferred documents’ languages, can affect the reranking computation for a Search Engine. If a user is located within a multilingual country with a device with the local languages, the results can be mixed with the country-specific and language-specific results based on the query language. Authority for a topic and the initial-ranking potential of a source can help a website to rank higher across different languages and regions for different queries, devices, browsers, operating systems’s and user languages.

- Rerank search results by looking at the population or audience segmentation information: Audience Segmentation is the process of creating a demographic profile that includes gender, age, location, interest, income, occupation, character, and condition profile for the larger user segments. The first population assignment can be created with the first ranking score (initial ranking score) during the document retrieval process. When the document is retrieved, it will be matched with an audience, which is called the first population. This matching will generate a “selection score”. The same process will be performed for the second document too. After the second “selection score” is created, the second audience and the first audience will be compared to each other for creating a better-consolidated population and audience segmentation. When the audience profile is finalized, the selection scores for queries and documents will be refreshed, and the reranking process based on audience segmentation will be completed. During the audience segmentation for different documents for reranking by the Search Engine, historical data has been collected and used.

- Rerank search results based upon historical data: Historical data can be used to gather information across historical changes for a document. Historical data can define a document again based on historical changes for content, visual design, layout, brand entity, and external and internal popularity changes. A search-demand change or trending topic can change the historical importance and quality data of a document. When the historical data is used, an older document with a larger dataset for the successful click satisfaction feedback can have better rankings. Thus, historical data deficiency is one of the main disadvantages for the initial-ranking score of the new sources for a topic or entire web.

Systems and methods for modifying search results based on a user’s history

- Rerank search results based upon topic familiarity: Topic Familiarity refers to the expertise and detail level of the document, not the topical familiarity between different topics. A document can have more detail for a topic while another document might have a basic summary for the same topic. A Search Engine can understand the user’s preference, and rerank the documents on the SERP for satisfying the users’ document preferences. Writing style, opinions, design, source type, stop word count, and information count can be used to understand the document’s familiarity with the topic itself.

- Rerank search results by changing orders based upon commercial intent: According to the search intent, a search engine can change the order of the documents on the SERP. If a user explicitly states that the search intent has commercial characteristics, the search engine can change the SERP design, features, and preferred sources. One of the first examples of ‘commercial intent-based reranks’ was Yahoo’s Mindset.

- Reranking and removing results based upon mobile device friendliness: A search engine can use a Mobile-friendliness Indicator with mobile-friendliness indications to re-rank the URLs based on user agents. Usability of the web page is necessary to satisfy the need behind the query, and for certain user-agents, a search engine can re-rank a website overall, or a group of webpages, and individual webpages. According to the data, and implicit feedback from the web search engine users, a search engine can initially rank a website higher, and re-rank it for certain user agents and web user device types such as mobile phones, and tablets.

- Rerank search results based upon accessibility: Web accessibility is a user experience term that aims to improve the usability of websites for people with disabilities. Web accessibility is important for SEO since a certain amount of the intended audience of the source (website) has disabilities, making a website usable for everyone is an advantage to satisfy a broader audience on the web. Google has many “Voluntary Product Accessibility Templates”, and in Lighthouse, Google Developer Guidelines, PageSpeed Insights API, and their own Accessibility Guidelines they educate content publishers to make their websites friendly for everyone, including the ones with disabilities. A search engine can re-rank the sources on the web for different types of queries based on their accessibility, and friendliness for people with disabilities. If a website has color contrast issues or visual and non-visual communication issues for disabled people, a search engine can decrease the usability, and click satisfaction score of that specific website. Sundar Pichai, CEO of Google said many times “They build for everyone” by taking attention to the term web accessibility.

- Rerank search results based upon editorial content: A search engine can understand the theme of a query, and based on the theme of a query, editorial opinions, or editorial content can be favored on the web. Thus, having the correct content format, and tonality for a group of queries is important to take advantage of the re-ranking process, especially the editorial content. A query theme can be reflected on the verbs or the nouns, and entities within the query, based on the query theme, certain opinions, or certain sources can be favored, or not favored for being re-ranked by the search engine.

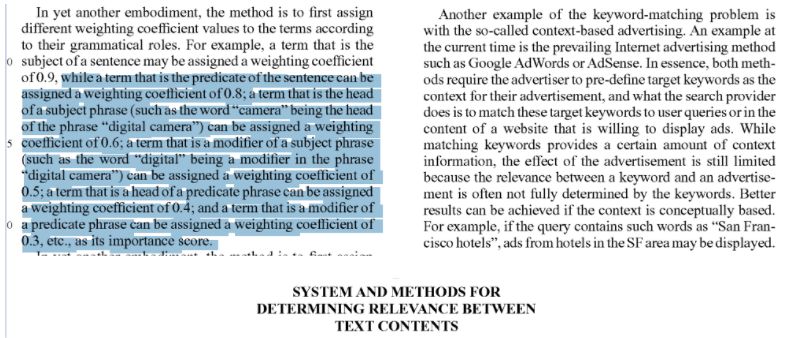

- Reranking based upon additional terms (boosting) and comparing text similarity: A search engine can re-rank the results based on text similarity to check whether the found documents are related to the specific query or group of queries. According to the research and patent of Query-Free News Search, Google can generate queries, match these queries to the portions of news articles, and check the similarity of articles to each other to filter out the irrelevant ones. In this context, text similarity can be an advantage to be ranked better during the re-rank process, but also being unique, comprehensive, and more informative can help to be seen as non-duplicate, and even the most authoritative source for the specific topic, and news for that topic. During the research paper, the A5-HIST and A4-COMP algorithms are used to generate queries from text segments to find the most relevant similar documents to be ranked together. A search engine can have obstacles in taking results for vague queries or being able to find similar documents for too specific queries, thus they also use A7-IDF and A6-3 algorithms to shorten the queries until they get a result. These details to find the text-similarity between news articles are important because it shows the obstacles of a search engine during the re-ranking process for a variety of queries, queries that are hard to satisfy, and newsworthy.

- Reordering based upon implicit feedback from user activities and click-throughs: Google and other search engines can use implicit user feedback and activity on the SERP, and in the web page documents to re-rank the sources on the query results. “Modifying search result ranking based on implicit user feedback and model of presentation bias” patent of Google, and “Query Chains: Learning to Rank from Implicit Feedback” research shows that instead of taking every user feedback into account, taking the implicit user feedback for longer timeline help to decrease the noise while increasing the efficiency. A search engine can understand the typos for queries such as in “Lexis Nexis” and “Lexis Nexus”, or it can use machine learning to evaluate the implicit user feedback. The good part of re-ranking with implicit user feedback for Search Engines is that it decreases the cost of the re-ranking process, and it is document-independent since it only focuses on the users’ behavior instead of the words on the documents. Thus, the relevance algorithms and implicit user-feedback algorithms support and complement each other to improve the efficiency of the re-ranking process.

- Reranking based upon community endorsement: Community endorsement, or social media shares, collaborative consistent web search behaviors can signal the popularity, reliability, and relevance of a source, or a web page of the source for a specific topic, trend, for a certain amount of time. Community endorsement can help a source to improve its prominence, search demand, and usability to the search engine. Click, selection, session ID, and statistical count of the number of times a page has been endorsed, bookmarked, shared, mentioned, or quoted. Community endorsement for re-ranking is also important to see the consistency and naturality of the external link-related references to a source, or a web page document. The link selection, or snippet selection without a “query term” and “terms from the document” matching can signal a synonym, and it can trigger synonym and query expansion algorithms. Thus, community endorsement can also affect the neighborhood SERP instances, and it can trigger a re-ranking process for these queries too.

- Reranking based upon information redundancy: Re-ranking query results based on information redundancy uses the “word distribution probability”, and “answer redundancy”. If the query breadth is narrow, and the query is vague, a search engine can try to understand the word distribution probability for possible and related search intents to re-rank the results to satisfy the search intent. The purpose of “reranking based upon information redundancy” is to decrease the number of off-topic documents and repetitive documents from the SERP for certain queries. Search engines rank 8-10 and in some cases with extra SERP Features 14-20 organic search results on one SERP instance. Thus, by re-ranking the documents on the SERP, it increases the total information redundancy that can be presented on a single SERP instance to improve the user satisfaction possibility. Information redundancy for documents can be acquired by calculating the word distribution possibility for every document. A result set and results from a result set can be compared to each other to improve the SERP’s information redundancy. Re-ranking based on information redundancy will improve the re-ranking process of multi-angled contents that cover multiple contextual layers for certain topics. For instance, “Abraham Lincoln Theme Park, Abraham Lincoln, and Assassination of Abraham Lincoln, Political Quotes of Abraham Lincoln, Personal Life of Abraham Lincoln” are different topics, and they will have different word distribution probabilities. According to the query, context of the query, and context of the user, the search engine will determine a different information redundancy value for all of these different but related topics.

- Reranking based upon storylines: A search engine can generate storylines from the results on the SERP. A storyline can summarize a result web page document for a query, and a storyline can summarize multiple web pages from the SERP at the same time. A storyline can be used to define the SERP documents thematically, and similar documents will generate different storylines, and be grouped together. The purpose of the reranking with storylines is to improve the quality of the SERP, and diversified result counts on the SERP. The global ranking mechanism of Google (PageRank) is to understand the important sources on the web that mentions a specific term, but it also doesn’t increase the quality of the second, third, or ninth page of the query results. There is no proper quality difference between the 11th-ranked document and the 44th-ranked document for a query. The re-ranking process based upon the storylines tries to improve the quality of SERP by decreasing the prominence of PageRank. Re-ranking based upon storylines can diversify the SERP with more relevant documents, “focused vocabulary” can be used to group pages, and co-occurrence possibilities can be used to see the context of the document. This method is not implemented as “storylines” but, search engines have used the re-ranking based upon relevance, and fact redundancy by extracting facts, prepositions from documents by calculating the importance score of the document for the given entities in terms of definition, and explanation.

- Reranking by looking at blogs, news, and web pages as an infectious disease: Turning search engine result pages’ documents into storylines via vocabularies, and co-occurrence probabilities, distribution and reranking documents on the SERP based on “blogs, news, web pages as an infectious disease” are related to each other. A search engine can see the most co-occurring terms together, and also it can recognize that some new terms and concepts started to co-occur together in certain types of documents, and sources within a certain amount of time. These co-occurrence changes can be used to detect the newsworthy queries, and trending terms in the context of search demand. And, a search engine can group different sources with different sources as storylines, or it can individually re-rank them based on the newsworthiness of the source for a possibly trending search query.

- Reranking based upon conceptually related information including time-based and use-based factors: A search engine can group the documents based on co-occurring terms. And, it can re-rank by re-grouping them based on user affinity, or the user segment. The user segment’s interaction, users’ location, and users’ selection change, based on selection change, if the co-occurrent terms change also, the search engine can refresh its grouping choice while re-ranking the search engine result page documents.

- Blended and Universal Search: Blended Search is the unofficial name of Universal Search. Blended Search or Universal Search is the name of mixing images, podcasts, movies, videos, news, dictionaries, questions, answers, knowledge panels, and other types of search engine result types into the regular blue link results. Blended Search or Universal search can change the order of the results, and it can push some of the results into the second page. Also, Universal Search can affect the ranking of the documents, since some of the documents will have a better relevance for image search, the universal search can boost the document if the images are also important for the specific query group. In this context, the universal search can affect the ranking of the results, and re-rank them based on the feedback from the users for different search verticals.

- Phrase-Based Indexing: A search engine can differentiate the bad phrases and good phrases from each other. Unknown entities, topics, or queries can be detected by the search engine if only they make a “good phrase” sample. A search engine can expand its knowledge base, and fact repository based on new good phrases, thus having a good phrase threshold is important to keep the knowledge base, and fact repository clean, and efficient. In this context, a search engine can group phrases, and check their co-occurrence frequency to re-rank the search engine result pages. From spam detection to the phrase taxonomy creation, or understanding the query breadth, phrase-based indexing is one of the most fundamental perspectives to keep SERP quality high. In the context of re-ranking the query results, good phrases, phrases from top-ranking results, phrases from authoritative sources, and phrases from side contexts can help a search engine understand the users, and documents around queries in a better way.

- Time-Based Data and Query Log Statistics: A search engine can change the look of the universally created SERP instance, and it can change or re-rank the documents on the SERP. A query can have a different meaning, and query intent from morning to night, or from winter to spring. In this context, the search engines can understand the meanings of the query, and user affinities in a better way to rank these documents in a better methodology.

- Navigational Queries: A search engine can understand the queries with only a navigation purpose to a specific web page. Subsequent click count, click reversion, mouse-over, and result selection time can be used to see whether there is a navigational character in the query or not. Query logs include a query, a search activity, and a selection activity that signal a query’s purpose, and the satisfaction of the click. If the users click only a single result, and if the document includes a brand, location, or any kind of name entity within the prominent relevance points, the query can be chosen as navigational for the specific document. A navigational query detection system can re-rank the documents, but also it can re-rank the non-navigational documents. If a source is chosen by the search engine, the other sources can be re-ranked according to the similarity, relevance, or closeness to the specific source in the context of the navigational query. Thus, for affiliate industries, or aggregators, still creating landing pages, useful information, and definitive documents for the brands or institutions based on navigational queries will improve the relevance of the source to the specific topic, and it will strengthen the historical data with the targeted user segment.

- Patterns in Click and Query Logs: a query log includes a search term and the document retrieved from the ordered index of a search engine. A click log includes the click event for the selection of a document. If the people that search for a query, also search for other queries in a sequence, these queries will be named sequential queries. And, if the sequential queries do not include the same terms, or same entities, or an entity from the same type, it doesn’t mean that they are necessarily relevant to each other. A search engine can cluster the queries based on their chronological order for a search behavior pattern, and the documents that are selected for certain types of questions, entity types, or attributes can be ranked higher. The search engine can recognize the context of the search session based on sequential queries. A sequential query can have a query path based on the queries that are used. For instance, if the searcher used the terms “banana”, “apple”, or “berries”, the query path will be “banana/apple/berries”. Different permutations can affect the ranking of the documents. The search engines can determine “content terminuses” based on different query paths. And, these content terminuses can be changed based on the searched query paths. A pattern can benefit a source if the source includes all the relevant and distinctive seed queries with different content items. In this context, patterns in click and query logs are also related to the topical authority. Based on different query paths, and search behaviors search engines will adjust a “proportional relation to the likelihood” for relevance, and the context of the search behavior. In terms of re-ranking of a search engine result page documents for a query, and the possible search intents, the query logs, click logs, query patterns, content terminuses, and content items can be used.

- TrustRank: TrustRank is a term that belongs to Yahoo initially, but the Google search engine also used it within its patents. In the context of re-ranking the search results, a search engine can use trust signals. The main theme of TrustRank is the process of understanding the trust signals for a source, web entity, or document owner based on links, or the people’s labels for them. Since both of the search engines (Yahoo and Google) used the term TrustRank, it also shows the significance of having search technology patents. And, thus Google didn’t focus on links for the TrustRank patent, because Yahoo did it instead of Google. TrustRank Perspective of Google relies on feedback from the people on the web for a web page. And, in this context, in 2009, Google published Sidewiki. Sidewiki was a system where people can state their opinions on a web page, and it was working as an extension for browsers. Also, Local Experts for Google Local Search, Inferred Links, mentions, reviews on the web, social shares, and community proof for a web page can affect a website’s, and the web page document’s TrustRank. Google’s TrustRank understanding is highly similar to the content distribution system of social search engines such as Facebook, TikTok, Instagram, or Twitter. If the web page has good feedback, and if it is labeled in a good way, the web page will have a better TrustRank. On the other hand, Yahoo’s TrustRank understanding relies on links. Thus, it is called a “Link-based spam detection system”. According to Yahoo, TrustRank is a link analysis technique related to PageRank. Basically, it uses the high authority pages to determine other high authority pages while recognizing the reciprocal links between different websites. Besides the TrustRank understanding of Google, and Yahoo, there are more methods, and patents from search engines that focus on trust signals whether it is from social activity or links. Thus, the TrustRank term has better prominence, because it focuses on the basics of trust signals such as “annotations for a page”, “labels for a section of a web page”, or “highly authoritative link for a web page”, and “size of a web page link farm”. In the context of re-ranking documents based on context and trust, the TrustRank is a useful and evergreen understanding for search engines.

- Social and Community Evidence for Quality: Social and Community Evidence for a web page to be shared, or interpreted shows how the web page content is prominent in a specific country and the industry. Social and Community Evidence can be followed on the Search Engine’s technology designs, or some of the official explanations along with the search engine result page feature from the SERP for social media. Social and Community Evidence is prominent for being ranked or re-ranked in the future.

- Customization based upon previous related queries: Customization based upon previous related queries is a method for re-ranking the search engine result pages based on query logs. If two queries are related to each other, and they are sequential, based on the following queries they can change the search engine result pages, just for that query log. The difference between customization based upon previous related queries and the “Patterns in Click and Query Logs” is one of them affects the conditional search engine query results, and the other one affects the universal search results by re-ranking. Based on this, if the query includes a region or a language signal, it can customize the ranking by re-ranking. If a source is more relevant to the specific region, or district, it can overrank others due to the query’s regional signals rather than just simple string matching. In this context, the misspelling queries, correlated user behavior in a short period of time, lexical relations such as synonym, antonym, acronym,

Various methods for ranking and re-ranking can be seen above. The initial ranking score definition and function can be checked below.

Customization based upon the previous related query reranking methodology can generate different relevance and ranking scores for different documents for different related queries. For instance, a web page can be more relevant for the first query, and the competing document can be more relevant for the second query. And, if the user searches for the first query, and if it chooses the first document, the competing document might not have the same weight as the second document in the context of ranking score calculation, and re-ranking.

- Being linked to by Blogs: A search engine can weigh some links on the web more than other links based on the type of source. A search engine can weigh different types of links from different types of sources based on the purpose, and the type of the query. Being linked to blogs, patents, and methodology belong to Microsoft. And, it is about differentiating links from each other. In this context, Microsoft thinks in the “Ranking Method Using Hyperlinks in Blogs” patent that being linked to blogs is valuable, and they can pass more PageRank. Because of this understanding, in the golden age of Black Hat SEO, the tier 1, tier 2, or tier 3 blogs, web 2.0 links, and Private Blog Networks, link farms were popular. Over time, the search engines decreased the value of links from blogs unless the blog doesn’t provide real value. This patent sample is from 2007, and in 2007 the blogs were also in trend. In this context, you can assume that the re-ranking methods of search engines and the re-ranking information points can change their prominence or importance over time. Another important statement from the same patent is that Microsoft classifies the ranking algorithms of the search engines as “content-based”, “usage-based”, and “link-based”. And, when it comes to relevance, we also have “query-dependent” and “query-independent” relevance points. And, this methodology is a “query-independent”, “link-based” re-ranking algorithm. In this context, the Search Engine tries to find some better ways against the spammy endorsed pages, and it mentions the “Systems And Methods For Ranking Documents Based Upon Structurally Interrelated Information” patent. Based on the “Structured Interrelated Information” understanding, it also modifies the PageRank calculation to give more weight to the non-endorsed, and informative links from blogs.

- By Ages of Linking Domains: The domain age is a controversial topic when it comes to SEO. By Ages of Linking Domains is another method of re-ranking for search engines, and it is related to the terms Google Sandbox, Domain Maturity, Expired Domain, Linkage, Link Echo, and Ghost Links. If a backlink is removed from a web page to another page, the PageRank decrease can cause a re-rank. If the link gets older, and if the link source domain is more mature, it can cause more PageRank increases by changing the re-ranking process of search engines. Based on the domain age, we can tell that the domain age is actually an important source for historical data, selection scores from queries, and consistency-trust signals for the industry, and the users. But, this situation caused “Fear, Uncertainty, and Doubt (FUD)” as a sale tactic for domain registrations. And, since it has been exploited by domain registrars, Google repeatedly said that domain age is not a factor for ranking. In this context, we can tell that a link that lives longer with more consistency is better than a temporary and inconsistent link. Search engines try to find consistency over time for different ranking signals. In the “Ranking Domains Using Domain Maturity” method of Microsoft, different methods for the domain age are mentioned, such as registration date, the time that the domain is linked the first time, or it is crawled the first time. To give a link weight based on the domain maturity, the search engine mentions the “contributing domain” definition. Based on that, it is not just about maturity, but also contribution, and the value of the domain.

- Diversification of Search Results: Diversification of search results is related to the Information Foraging Theory and web search engine users’ behaviors on the SERP. A search engine can show different types of documents, or SERP features on the SERP to direct the users to certain types of documents, or search behaviors. Diversification of search results is closely related to the search intent distribution, and search activity coverage, along with the connection of concepts, and interest areas. In the Search Off the Record Podcast series of Google, Garry Illyes talks about “Universal Search” in the “Cheese, Web Workers, universal search, and more!” episode. Google can show different types of search features within a bidding system by measuring the implicit user feedback and its cumulative characteristics based on historical data. The search vertical icons below the search bar such as “image”, “video”, “news”, “shopping”, “flight”, and “books” can switch places, and order based on the dominant search intent, and search features. According to the search features, a search engine can differentiate its re-ranking algorithms. If the images on the SERP take engagement, or if it satisfies the users, image-landing page pairs can be used for the re-ranking process. Thus, while creating a web page for a probabilistic search engine, SEO should create a universal web page for every format of the content, by covering every contextual layer. Diversification of search results can happen with different methods in the context of re-ranking. For instance, a search engine can diversify search results based on fresh queries, documents, personalization, past queries, and the location of the user. Every query that is put into the search bar has an ambiguous nature, and search results diversification is closely related to satisfying all the possible search intents with different content formats.

Deduplication, and canonicalization, along with cannibalization, are also related to the Search Engine Results Page Diversification. A search engine might re-rank the results if a source (web entity, domain) has multiple results within the SERP for a query. A search engine might choose one of the competing web pages to outrank another one, or it can cluster similar ones with a different SERP design, such as “site-links”, or “one hat site-wide links”.

In the context of SERP Diversification, a search engine can use “non-diversified”, “diversified” systems, “aspectual tasks”, and “ad-hoc tasks” to test the users and their comparison in terms of “engagement stop-time” to the users.

In the figure above, the “a”, “b”, “c”, and “d” sub-figures compare the “stopping time of engagement” based on different Diversification of Search Results profiles. And, in the context of result diversification, it appears that complex search tasks require multiple queries, and if the results are not diversified, it ends up with multiple search behaviors.

In the context of search results diversification, Google has started to use “Dynamic Content” as below.

For a prominent and complex entity, if it overcomes the threshold of the “multi-faceted search behavior” count, it starts to use dynamic search features by multi-facets. It uses “videos”, “top stories”, “people also ask”, “knowledge panel”, “knowledge panel expandable questions”, “images”, “people also search for”, “suggested search queries”, and “blue links”, “see results about”, “local search pack”, “electric animals-industry” as related “entity types”.

The search results diversification as a re-ranking system, information foraging theory, and the “degraded relevance ranking”, or “relevance degradation” are connected. These terms are connected to the “search activity coverage”, and “search intent understanding” via sequential queries, query paths, and chained search behaviors. Prabhakar Raghavan (Vice President of Organic Search in Google) also has nice research on this topic.

“Are web users really Markovian” research paper tries to find some relations between the chained search activities. The latest MuM and LamDA announcements of Google during the Google IO 2021 are also connected to the “search activity coverage and relation”. In the context of re-ranking search results, a search engine can understand different contextual layers of a topic, related entities, their connections, and similarities, and a complex search task can be perceived. If the topic exceeds the threshold of complexity and prominence, you will see a “search result diversification”, or “dynamic content” on the SERP.

The source that covers all of the related contextual layers initially will have a better initial ranking for the specific topic. And as the topical coverage increases, the topical authority of the source during the re-ranking process will be increased too.

Above, you can see how a search engine can predict the possible behaviors of users. Below, you can see an example of the SERP design for ad-hoc retrieval.

This section is detailed purposely in the context of user and search engine communication method explanation.

- Desktop Search Influenced by the Contents of an Active Window: A search engine can re-rank the documents on the SERP based on the applications, or the programs that are used, and is used during the search activity or before the search activity. The context-sensitive ranking has three sections, user, device, and search contexts. In this perspective, a search engine can relate the device’s context to the users’ true search intent for a specific moment. A computer can open multiple user interfaces at the same time, and these activities and inactive user interfaces might not be related to each other. Or, they can have a sequential contextual relevance with each other. Thus, a search engine can recommend new content, or it can rewrite the query of the user to relate the documents or content from the inactive user interface to the new search activity. Using desktop search influenced by contents of an active window, or “Systems and methods for associating a keyword with a user interface area” is connected to the inactive browser tabs or inactive programs, and web applications in the background. Unifying different platforms, and activities of users to the search results with re-ranking can be observed while YouTube shows the search results from Google. Or, when a user watches a movie on Netflix, and he/she starts to see related videos on YouTube. The same situation can happen when a user opens a browser tab with the title of “World of Warcraft”, and when he/she types “world” in the search bar, a search engine can choose “Blizzard” related queries instead of “world news”. Some of these

According to the “Systems and methods for associating a keyword with a user interface area”, search engines can choose only one result among others from the “re-written implicit query”. And, search engines can give more weight to the related queries to the “re-written implicit query”. A search engine can also use the time periods between the active, and inactive user interfaces, or the media that is plugged into the device such as a microphone, CD, DVD, and more.

- Expanded and Adjacent Queries from User Logs: Query Processing is a part of the relevant understanding between documents, and queries. A search engine can re-rank a document based on the query term match, or terms from the query, and their proximity to each other within the document. The query log includes a query input and a SERP behavior instance. A query can be clustered with other queries if the query is searched just after other queries. “Re-ranking search results based on query log” is a patent belonging to Google. Its methodology is basic and fundamental. The “adjacent queries”, or “sequential queries” can be used to understand relevant queries, and if a query is more related to the other query, the rankings can change since the possible search activities will change. Thus, creating correct contextual vectors, and understanding the contextual domains are important. There are some essential terms from the patent such as “lexical similarity measure”, “frequency of query”, “query utilization”, “initial query”, “temporally related query”, “lexically related query” and “language model”. Lexically related queries are the query terms that are related to meanings, and temporally related queries might include trends or small contextual bridges between them. The frequency of a query can trigger query utilization via an iterative re-ranking process to understand which query is related to which context, and how other queries can possibly be searched at the same time. This patent is closely similar to the Google Search Engine’s Multi-stage Query Processing methodology which is refreshed over years, along with Phrase-based Indexing methodologies. Simply put, a search engine can change the context of a query by classifying it with others based on frequency, lexical, trending behaviors, and query logs. Query classification can affect the rankings, and trigger a re-reranking process. Thus, creating comprehensive sources by including all possible queries and the need behind the queries is a must from an advanced SEO perspective.

- Social Network Endorsements: A search engine can re-rank the documents on the query results based on the engagement rate of a document on social media. Re-ranking search results based on social media and social endorsement is also related to entity-oriented search and SEO. Entity-oriented search understanding means that a website can have a Facebook page, or YouTube channel, and lots of different influencers, and authors; all these attributes of the website will be united under one “web entity” to evaluate the quality, trust, and expertise signals. In this context, between 2010-2015, Facebook likes, and shares affected Google’s SERP heavily. The main reason Google was being influenced by search engines is that Facebook was too popular, and it was one of its main competitors of Google. Google has created Google Discover or “queryless search feed” by being inspired by Facebook, and it has tried to create its own social media platform such as “Google +”. In the TrustRank section of this guide of search engine re-ranking systems, we also have seen that they created “Sidewiki”, and Google has many other patents when it comes to Social Media Understanding, and tracking. Google can show the social media profiles in the Knowledge Panels, it can be used to understand an entity better, and hype on the social media platforms for a hashtag, or a topic, an account can trigger news-related SERP features for a topic, or it can make Google use the specific content on the Google Discover. We also know that Google Discover bots use “Open Graph tags’ ‘ when they find useful information, and they check the consistency of Open Graph Tags and the SEO-related HTML tags to improve the confidence score.

Above, you will see a post from 2011 that has been written by Matthew Peters. And, below you will see a correlation plot between the Facebook shares, along with other engagement types, and the Google Rankings.

If you look at the past of SEO, you will see lots of similar search results, proofs, or explanations from Forums and Search Engines themselves.

During the same period, Google started to demote Facebook Videos, and it promoted YouTube. Google also started to decrease the traffic of Facebook from organic search, and it has started to use Facebook only for cooperation pages, and personal profile listing results mostly, along with some “#”, hashtag-related results. Besides the feedback from Social Media platforms, it is true that Google can use Social Media activity to understand the queries or the context of the search. It can signal the trust or the brand power of a web entity, and we know that Google has systems to score influencers, or it has systems to aggregate text items, and feedback from social media platforms based on consistent user feedback. The patent “Methods, systems, and media for presenting recommended content based on social cues” also tries to find contextual relevance based on the social connections, these connections do not have to be in social media platforms, but a search engine can use social media platforms and their media to see these social connections.

Lastly, an “Automated agent for social media systems” can be used to measure the users’ behaviors and connect multiple social media profiles to each other.

In this context, social media endorsement or any kind of endorsement, and mention can be used to re-rank search results by search engines. An endorsement can show trust and quality, or it can show the untrustworthy, and non-quality sides of an entity to the open web. Thus, we also know that search engines started to list LinkedIn profiles of employees of companies within PAA questions, or it directly shows events and trust-related information to the users from their own social media platforms.