NLTK Wordnet can be used to find synonyms and antonyms of words. NLTK Corpus package is used to read the corpus to understand the lexical semantics of the words within the document. A WordNet involves semantic relations of words and their meanings within a lexical database. The semantic relations within the WordNet are hypernyms, synonyms, holonyms, hyponyms, meronyms. NLTK WordNet includes the usage of synsets for finding the words within the WordNet with their usages, definitions, and examples. NLTK WordNet is to find the representations between senses. Relation type detection is connected to the WordNet with lexical semantics. A dog can be a mammal, and this can be expressed with an “IS-A” relation type sentence. Thus, NLTK Wordnet is used to find the relations between words from a document, spam detection, duplication detection, or characteristics of the words within a written text with their POS Tags.

NLTK Lemmatization, stemming, tokenization, and POS Tagging are related to the NLTK WordNet for Natural Language Processing. To use the Natural Language Tool Kit WordNet with better efficiency, the synonyms, and antonyms, holonyms, hypernyms, and hyponyms, and all of the lexical relations should be used for text processing and text cleaning. In this NLTK WordNet Python tutorial, the synonym and antonym finding, along with word similarity calculation will be used with NLTK Corpus Reader for the English Language.

A quick example of the synonym and antonym finding with NLTK Python can be found below.

def synonym_antonym_extractor(phrase):

from nltk.corpus import wordnet

synonyms = []

antonyms = []

for syn in wordnet.synsets(phrase):

for l in syn.lemmas():

synonyms.append(l.name())

if l.antonyms():

antonyms.append(l.antonyms()[0].name())

print(set(synonyms))

print(set(antonyms))

synonym_antonym_extractor(phrase="word")

OUTPUT >>>

{'tidings', 'password', 'Holy_Writ', 'Good_Book', 'Bible', 'discussion', 'news', 'parole', 'give_voice', 'articulate', 'Son', 'word', 'Holy_Scripture', 'Book', 'give-and-take', 'Christian_Bible', 'intelligence', 'Logos', 'phrase', 'word_of_honor', 'formulate', 'Scripture', 'Word', 'watchword', 'countersign', 'Word_of_God'}

set()The Synonym and Antonym finding example code block with Python NLTK involves a custom function creation, “nltk.corpus”, and “wordnet” with “syn.lemmas”, “syn.antonyms” along with a for a loop. The phrase “word” has been used as an example for the NLTK Synonym and Antonym finding. According to the WordNet within the NLTK.corpus, there is no antonym for “word” phrase, but the synonyms are “password”, “Holy Writ”, “Good Book”, “Bible”, “Discussion”, “News”, “Parole”. NLTK Synonyms and Antonyms involve lexical synonyms and contextual synonyms from WordNet.

In this Python and NLTK Synonym and Antonym finding guide, the usage of the NLTK WordNet for lexical semantics, word similarities, and synonym, antonym, hypernym, hyponym, verb frames, and more will be processed.

How to Find Synonyms of a Word with NLTK WordNet and Python?

To find the synonyms of a word with NLTK WordNet, the instructions below should be followed.

- Import NLTK.corpus

- Import WordNet from NLTK.Corpus

- Create a list for assigning the synonym values of the word.

- Use the “synsets” method.

- use the “syn.lemmas” property to assign the synonyms to the list with a for loop.

- Call the synonyms of the word with NLTK WordNet within a set.

An example of the finding of the synonym of a word via NLTK and Python is below.

from nltk.corpus import WordNet

synonyms = []

for syn in wordnet.synsets("love"):

for i in syn.lemmas():

synonyms.append(l.name())

print(set(synonyms))

OUTPUT >>>

{'dearest', 'love_life', 'get_it_on', 'roll_in_the_hay', 'lie_with', 'screw', 'bonk', 'passion', 'honey', 'sleep_together', 'lovemaking', 'making_love', 'make_love', 'have_sex', 'jazz', 'bed', 'erotic_love', 'dear', 'do_it', 'have_it_away', 'be_intimate', 'fuck', 'have_a_go_at_it', 'sleep_with', 'hump', 'enjoy', 'eff', 'have_it_off', 'know', 'have_intercourse', 'make_out', 'bang', 'beloved', 'love', 'get_laid', 'sexual_love'}In the example above, the word “love” is used for finding its synonyms for different contexts with the NLTK and Python. The synonyms that are found for the “love” involves “dearest”, “lie with”, “screw”, “bonk”, “passion”, “honey” and some subtypes such as “sexual love”, “erotic love”. A word can be a synonym of another word, and indirectly related and connected words can be included within the synonym list of a word with NLTK WordNet. Thus, to find the different contextual synonyms and sibling phrases for a word, NLTK can be used. The compositional compounds and non-compositional compounds, or synonyms are used by the search engines. For a search engine optimization or search engine creation project, the NLTK WordNet and synonyms are prominent for understanding the context of textual data. Thus, from the Google Patents, the NLTK and WordNet can be found as mentioned methodology for synonym finding.

How to Find Antonyms of a Word with NLTK WordNet and Python?

To find the Antonyms of a Word with NLTK WordNet and Python, the following instructions should be followed.

- Import NLTK.corpus

- Import WordNet from NLTK.Corpus

- Create a list for assigning the synonym values of the word.

- Use the “synsets” method.

- use the “syn.lemmas” property to assign the synonyms to the list with a for loop.

- Use the “antonyms()” method with “name” property for calling the antonym of the phrase.

- Call the antonyms of the word with NLTK WordNet within a set.

from nltk.corpus import wordnet

antonyms = []

for syn in wordnet.synsets("love"):

for i in syn.lemmas():

if i.antonyms():

antonyms.append(i.antonyms()[0].name())

print(set(antonyms))

OPUTPUT >>>

{'hate'}The antonym of the word “love” has been found as “hate” via the NLTK Antonym finding code example. Finding Synonyms and Antonyms from sentences by tokenizing the words within the sentence is beneficial to see the possible contextual connections to understand the content with NLP. Thus, creating a custom function for synonym finding within the text with Python is useful. The next section of the NLTK Python Synonym and Antonym Finding Tutorial with WordNet will be about a custom function creation.

How to use a custom Python Function for Finding Synonyms and Antonyms with NLTK WordNet?

To use a custom Python Function for finding synonyms and antonyms with NLTK, follow the instructions below.

- Create a custom function with the Python built-in “def” command.

- Use the text for synonym and antonym finding as the argument of the custom synonym and antonym finder Python function.

- Import the “word_tokenize” from the “nltk.tokenize”.

- Import the “wordnet” from the “nltk.corpus”.

- Import “defualtdict” from the “collections”.

- Import “pprint” for the pretty print the antonyms and synonyms.

- Tokenize the words within the sentence for synonym and antonym finding with NLTK.

- Create the antonym and synonym lists with “defaultdict(list)”.

- Use a for loop with the tokens of tokenized sentence with NLTK for synonym and antonym finding.

- Use a for a loop with the “synsets” for synonym and antonym finding.

- Use an “if” statement to check whether the antonym of the word exists or not.

- Use “pprint.pformat” and “dict” for making the synonym and antonym list writable to the a txt file.

- Append all of the synonyms and antonyms for every word within the sentence with the created synonym and antonym defaultdict lists.

- Open a new file as txt.

- Print all of the synonyms and antonyms to a txt file.

- Close the opened and created txt file.

An example of using the WordNet NLTK for finding synonyms and antonyms from an example sentence can be found below.

def text_parser_synonym_antonym_finder(text:str):

from nltk.tokenize import word_tokenize

from nltk.corpus import wordnet

from collections import defaultdict

import pprint

tokens = word_tokenize(text)

synonyms = defaultdict(list)

antonyms = defaultdict(list)

for token in tokens:

for syn in wordnet.synsets(token):

for i in syn.lemmas():

#synonyms.append(i.name())

#print(f'{token} synonyms are: {i.name()}')

synonyms[token].append(i.name())

if i.antonyms():

#antonyms.append(i.antonyms()[0].name())

#print(f'{token} antonyms are: {i.antonyms()[0].name()}')

antonyms[token].append(i.antonyms()[0].name())

pprint.pprint(dict(synonyms))

pprint.pprint(dict(synonyms))

synonym_output = pprint.pformat((dict(synonyms)))

antonyms_output = pprint.pformat((dict(antonyms)))

with open(str(text[:5]) + ".txt", "a") as f:

f.write("Starting of Synonyms of the Words from the Sentences: " + synonym_output + "\n")

f.write("Starting of Antonyms of the Words from the Sentences: " + antonyms_output + "\n")

f.close()

text_parser_synonym_antonym_finder(text="WordNet is a lexical database that has been used by a major search engine. From the WordNet, information about a given word or phrase can be calculated such as")

OUTPUT >>>

Starting of Synonyms of the Words from the Sentences: {'WordNet': ['wordnet',

'WordNet',

'Princeton_WordNet',

'wordnet',

'WordNet',

'Princeton_WordNet'],

'a': ['angstrom',

'angstrom_unit',

'A',

'vitamin_A',

'antiophthalmic_factor',

'axerophthol',

'A',

'deoxyadenosine_monophosphate',

'A',

'adenine',

'A',

'ampere',

'amp',

'A',

'A',

'a',

'A',

'type_A',

'group_A',

'angstrom',

'angstrom_unit',

'A',

'vitamin_A',

'antiophthalmic_factor',

'axerophthol',

'A',

'deoxyadenosine_monophosphate',

'A',

'adenine',

'A',

'ampere',

'amp',

'A',

'A',

'a',

'A',

'type_A',

'group_A',

'angstrom',

'angstrom_unit',

'A',

'vitamin_A',

'antiophthalmic_factor',

'axerophthol',

'A',

'deoxyadenosine_monophosphate',

'A',

'adenine',

'A',

'ampere',

'amp',

'A',

'A',

'a',

'A',

'type_A',

'group_A'],

'about': ['about',

'astir',

'approximately',

'about',

'close_to',

'just_about',

'some',

'roughly',

'more_or_less',

'around',

'or_so',

'about',

'around',

'about',

'around',

'about',

'around',

'about',

'around',

'about',

'about',

'almost',

'most',

'nearly',

'near',

'nigh',

'virtually',

'well-nigh'],

'as': ['arsenic',

'As',

'atomic_number_33',

'American_Samoa',

'Eastern_Samoa',

'AS',

'angstrom',

'angstrom_unit',

'A',

'vitamin_A',

'antiophthalmic_factor',

'axerophthol',

'A',

'deoxyadenosine_monophosphate',

'A',

'adenine',

'A',

'ampere',

'amp',

'A',

'A',

'a',

'A',

'type_A',

'group_A',

'equally',

'as',

'every_bit'],

'be': ['beryllium',

'Be',

'glucinium',

'atomic_number_4',

'be',

'be',

'be',

'exist',

'be',

'be',

'equal',

'be',

'constitute',

'represent',

'make_up',

'comprise',

'be',

'be',

'follow',

'embody',

'be',

'personify',

'be',

'be',

'live',

'be',

'cost',

'be'],

'been': ['be',

'be',

'be',

'exist',

'be',

'be',

'equal',

'be',

'constitute',

'represent',

'make_up',

'comprise',

'be',

'be',

'follow',

'embody',

'be',

'personify',

'be',

'be',

'live',

'be',

'cost',

'be'],

'by': ['by', 'past', 'aside', 'by', 'away'],

'calculated': ['calculate',

'cipher',

'cypher',

'compute',

'work_out',

'reckon',

'figure',

'calculate',

'estimate',

'reckon',

'count_on',

'figure',

'forecast',

'account',

'calculate',

'forecast',

'calculate',

'calculate',

'aim',

'direct',

'count',

'bet',

'depend',

'look',

'calculate',

'reckon',

'deliberate',

'calculated',

'measured'],

'can': ['can',

'tin',

'tin_can',

'can',

'canful',

'can',

'can_buoy',

'buttocks',

'nates',

'arse',

'butt',

'backside',

'bum',

'buns',

'can',

'fundament',

'hindquarters',

'hind_end',

'keister',

'posterior',

'prat',

'rear',

'rear_end',

'rump',

'stern',

'seat',

'tail',

'tail_end',

'tooshie',

'tush',

'bottom',

'behind',

'derriere',

'fanny',

'ass',

'toilet',

'can',

'commode',

'crapper',

'pot',

'potty',

'stool',

'throne',

'toilet',

'lavatory',

'lav',

'can',

'john',

'privy',

'bathroom',

'can',

'tin',

'put_up',

'displace',

'fire',

'give_notice',

'can',

'dismiss',

'give_the_axe',

'send_away',

'sack',

'force_out',

'give_the_sack',

'terminate'],

'database': ['database'],

'engine': ['engine',

'engine',

'locomotive',

'engine',

'locomotive_engine',

'railway_locomotive',

'engine'],

'given': ['given',

'presumption',

'precondition',

'give',

'yield',

'give',

'afford',

'give',

'give',

'give',

'pay',

'hold',

'throw',

'have',

'make',

'give',

'give',

'throw',

'give',

'gift',

'present',

'give',

'yield',

'give',

'pay',

'devote',

'render',

'yield',

'return',

'give',

'generate',

'impart',

'leave',

'give',

'pass_on',

'establish',

'give',

'give',

'give',

'sacrifice',

'give',

'pass',

'hand',

'reach',

'pass_on',

'turn_over',

'give',

'give',

'dedicate',

'consecrate',

'commit',

'devote',

'give',

'give',

'apply',

'give',

'render',

'grant',

'give',

'move_over',

'give_way',

'give',

'ease_up',

'yield',

'feed',

'give',

'contribute',

'give',

'chip_in',

'kick_in',

'collapse',

'fall_in',

'cave_in',

'give',

'give_way',

'break',

'founder',

'give',

'give',

'give',

'afford',

'open',

'give',

'give',

'give',

'give',

'yield',

'give',

'give',

'give',

'give',

'give',

'give',

'give',

'give',

'give',

'give',

'give',

'given',

'granted',

'apt',

'disposed',

'given',

'minded',

'tending'],

'has': ['hour_angle',

'HA',

'have',

'have_got',

'hold',

'have',

'feature',

'experience',

'receive',

'have',

'get',

'own',

'have',

'possess',

'get',

'let',

'have',

'consume',

'ingest',

'take_in',

'take',

'have',

'have',

'hold',

'throw',

'have',

'make',

'give',

'have',

'have',

'have',

'experience',

'have',

'induce',

'stimulate',

'cause',

'have',

'get',

'make',

'accept',

'take',

'have',

'receive',

'have',

'suffer',

'sustain',

'have',

'get',

'have',

'get',

'make',

'give_birth',

'deliver',

'bear',

'birth',

'have',

'take',

'have'],

'information': ['information',

'info',

'information',

'information',

'data',

'information',

'information',

'selective_information',

'entropy'],

'is': ['be',

'be',

'be',

'exist',

'be',

'be',

'equal',

'be',

'constitute',

'represent',

'make_up',

'comprise',

'be',

'be',

'follow',

'embody',

'be',

'personify',

'be',

'be',

'live',

'be',

'cost',

'be'],

'lexical': ['lexical', 'lexical'],

'major': ['major',

'Major',

'John_Major',

'John_R._Major',

'John_Roy_Major',

'major',

'major',

'major',

'major',

'major',

'major',

'major',

'major',

'major',

'major',

'major'],

'or': ['Oregon',

'Beaver_State',

'OR',

'operating_room',

'OR',

'operating_theater',

'operating_theatre',

'surgery'],

'phrase': ['phrase',

'phrase',

'musical_phrase',

'idiom',

'idiomatic_expression',

'phrasal_idiom',

'set_phrase',

'phrase',

'phrase',

'give_voice',

'formulate',

'word',

'phrase',

'articulate',

'phrase'],

'search': ['search',

'hunt',

'hunting',

'search',

'search',

'lookup',

'search',

'search',

'search',

'seek',

'look_for',

'search',

'look',

'research',

'search',

'explore',

'search'],

'such': ['such', 'such'],

'used': ['use',

'utilize',

'utilise',

'apply',

'employ',

'use',

'habituate',

'use',

'expend',

'use',

'practice',

'apply',

'use',

'use',

'used',

'exploited',

'ill-used',

'put-upon',

'used',

'victimized',

'victimised',

'secondhand',

'used'],

'word': ['word',

'word',

'news',

'intelligence',

'tidings',

'word',

'word',

'discussion',

'give-and-take',

'word',

'parole',

'word',

'word_of_honor',

'word',

'Son',

'Word',

'Logos',

'password',

'watchword',

'word',

'parole',

'countersign',

'Bible',

'Christian_Bible',

'Book',

'Good_Book',

'Holy_Scripture',

'Holy_Writ',

'Scripture',

'Word_of_God',

'Word',

'give_voice',

'formulate',

'word',

'phrase',

'articulate']}

Starting of Antonyms of the Words from the Sentences: {'be': ['differ'],

'been': ['differ'],

'can': ['hire'],

'given': ['take', 'starve'],

'has': ['lack', 'abstain', 'refuse'],

'is': ['differ'],

'major': ['minor', 'minor', 'minor', 'minor', 'minor', 'minor', 'minor'],

'used': ['misused']}

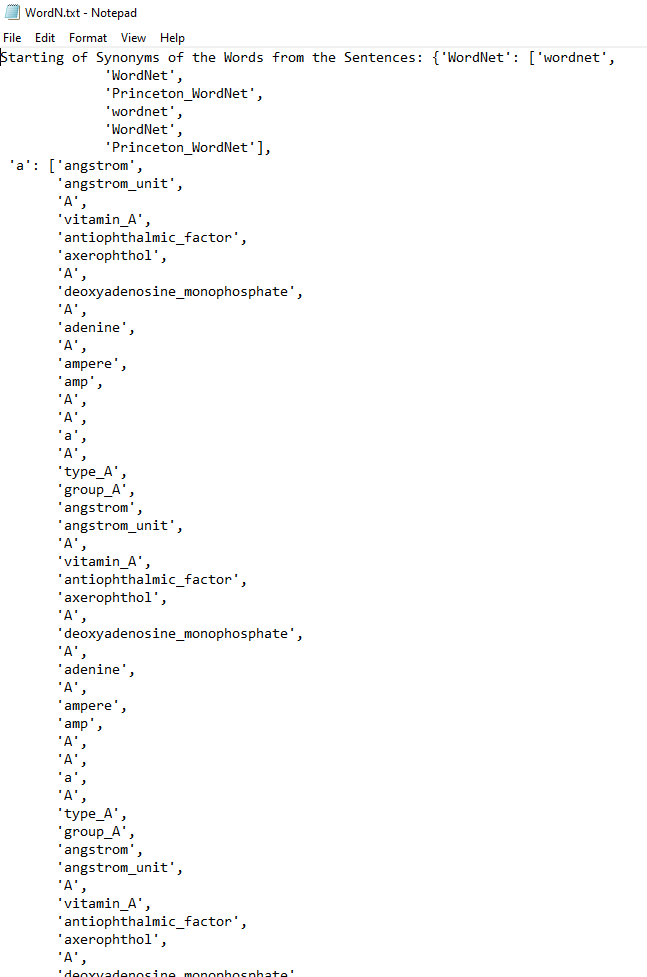

At the example above, a sentence has been used as an example for synonym and antonym finding with a custom Python function which is ” text_parser_synonym_antonym_finder”. Below, you can see the “txt” output of the synonym and antonym extractor from a sentence.

For the synonym and antonym finding and extraction from the text, we have created a new “.txt” file with the name of the first word of the sentence. It is important to notice that with NLTK WordNet and Python, a word can have multiple synonyms with the same word because there are different POS Tags for every word within the antonym and synonym list.

How to use POS Tagging for Synonym and Antonym Finding with NLTK WordNet?

To use POS Tagging for synonym and antonym finding with NLTK WordNet, the “pos” attribute should be used with the WordNet of NLTK. An example of usage for POS Tagging to find antonym and synonym with NLTK WordNet is below.

print("VERB of Love: ", wordnet.synsets("love", pos = wordnet.VERB))

print("ADJECTIVE of Love: ", wordnet.synsets("love", pos = wordnet.ADJ))

print("NOUN of Love: ", wordnet.synsets("love", pos = wordnet.NOUN))

OUTPUT >>>

VERB of Love: [Synset('love.v.01'), Synset('love.v.02'), Synset('love.v.03'), Synset('sleep_together.v.01')]

ADJECTIVE of Love: []

NOUN of Love: [Synset('love.n.01'), Synset('love.n.02'), Synset('beloved.n.01'), Synset('love.n.04'), Synset('love.n.05'), Synset('sexual_love.n.02')]The POS Tagging for Synonyms and Antonyms with NLTK WordNet shows different synsets (synonym rings) for different synonyms and antonyms of a word based on its context. For instance, the “love.v.01” and the “love.v.02” are not the same with each other in terms of context. To see the difference of a word in terms of its synonym meaning and context, the “definition” method of NLTK can be used with POS Tagging. To learn more about the NLTK POS Tagging, read the related guide and tutorial.

How to Find the Definition of a Synonym Word with NLTK WordNet?

To find the definition of a synonym Word with NLTK WordNet by understanding its context, the “wordnet.synset(“word example”, pos = wordnet.POS TAG).definition()” method should be used. To see the two different meanings of the same word as synonyms, the word “love” will use as an example below.

wordnet.synset("love.v.01").definition()

OUTPUT >>>

'have a great affection or liking for'The example definition finding of a synonym of a word with NLTK WordNet above demonstrates the first verb example of the “love” as the “have a great affection or liking for”. The example below will show the second verb definition of “love”.

wordnet.synset("love.v.02").definition()

OUTPUT >>>

'get pleasure from'The second meaning finding example of a word with NLTK WordNet can be found above. The second meaning of the word “love” is “get pleasure from”. Thus, even if the synonym of a word has the same “strings” as a “phrase”, still the meaning can be different. Thus, a word can have multiple synonyms with the same shape but different meanings. NLTK WordNet can be found by finding the different contexts, meanings of synonyms with the help of the POS Tagging with NLTK and the Definition Finding of a word. To improve the contextual understanding of a sentence with NLTK, the word usage examples can be called. Finding a word definition with Python has other methods such as using PyDictionary, but NLTK WordNet provides other benefits such as finding sentence examples for the words or finding different contexts of a word with its antonyms and synonyms.

How to find the sentence examples for words within NLTK WordNet?

To find the sentence examples with NLTK WordNet, the “wordnet.synset.examples()” method is used. An example of sentence example extraction with NLTK WordNet can be found below.

for i in wordnet.synset("love.v.01").examples():

print(i)

OUTPUT >>>

I love French food

She loves her boss and works hard for himIn the example above, the first noun meaning of the “love” word is used with the “wordnet.synset().examples()” method. The “I love French Food” and “She loves her boss and works hard for him” sentences are examples of sentences that the word “love” is used with a specific meaning.

for i in wordnet.synset("love.v.01").examples():

print(i)

OUTPUT >>>

I love cookingThe first meaning of “love” as a “verb” is used to take an example as above. The sentence “I love cooking” is returned by the NLTK WordNet as an example of the first meaning of the verb “love”. NLTK WordNet “examples()” method is useful to see the exact context of the specific word and its POS Tag with its versioned numeric value.

How to Extract the Synonyms and their Definitions at the same time with NLTK WordNet?

To extract the synonyms and their definitions with NLTK WordNet, the “wordnet.synset” and the “lemmas()” method with the “definition()” method should be used. The instructions below should be followed for extracting the synonyms and their definitions at the same time with NLTK WordNet.

- Use the “wordnet.synset()” for a word such as “love”, or “phrase”.

- Take the lemmas of the specific synonym ring with the “lemmas()” method.

- Print the “lemma.name()” and “definition()” method at the same time.

Below, you can find the example output.

for i in wordnet.synsets("love"):

for lemma in i.lemmas():

print("Synonym of Word: " + lemma.name(), "| Definition of Synonym: " + i.definition())

OUTPUT >>>

Synonym of Word: love | Definition of Synonym: a strong positive emotion of regard and affection

Synonym of Word: love | Definition of Synonym: any object of warm affection or devotion

Synonym of Word: passion | Definition of Synonym: any object of warm affection or devotion

Synonym of Word: beloved | Definition of Synonym: a beloved person; used as terms of endearment

Synonym of Word: dear | Definition of Synonym: a beloved person; used as terms of endearment

Synonym of Word: dearest | Definition of Synonym: a beloved person; used as terms of endearment

Synonym of Word: honey | Definition of Synonym: a beloved person; used as terms of endearment

Synonym of Word: love | Definition of Synonym: a beloved person; used as terms of endearment

Synonym of Word: love | Definition of Synonym: a deep feeling of sexual desire and attraction

Synonym of Word: sexual_love | Definition of Synonym: a deep feeling of sexual desire and attraction

Synonym of Word: erotic_love | Definition of Synonym: a deep feeling of sexual desire and attraction

Synonym of Word: love | Definition of Synonym: a score of zero in tennis or squash

Synonym of Word: sexual_love | Definition of Synonym: sexual activities (often including sexual intercourse) between two people

Synonym of Word: lovemaking | Definition of Synonym: sexual activities (often including sexual intercourse) between two people

Synonym of Word: making_love | Definition of Synonym: sexual activities (often including sexual intercourse) between two people

Synonym of Word: love | Definition of Synonym: sexual activities (often including sexual intercourse) between two people

Synonym of Word: love_life | Definition of Synonym: sexual activities (often including sexual intercourse) between two people

Synonym of Word: love | Definition of Synonym: have a great affection or liking for

Synonym of Word: love | Definition of Synonym: get pleasure from

Synonym of Word: enjoy | Definition of Synonym: get pleasure from

Synonym of Word: love | Definition of Synonym: be enamored or in love with

Synonym of Word: sleep_together | Definition of Synonym: have sexual intercourse with

Synonym of Word: roll_in_the_hay | Definition of Synonym: have sexual intercourse with

Synonym of Word: love | Definition of Synonym: have sexual intercourse with

Synonym of Word: make_out | Definition of Synonym: have sexual intercourse with

Synonym of Word: make_love | Definition of Synonym: have sexual intercourse with

Synonym of Word: sleep_with | Definition of Synonym: have sexual intercourse with

Synonym of Word: get_laid | Definition of Synonym: have sexual intercourse with

Synonym of Word: have_sex | Definition of Synonym: have sexual intercourse with

Synonym of Word: know | Definition of Synonym: have sexual intercourse with

Synonym of Word: do_it | Definition of Synonym: have sexual intercourse with

Synonym of Word: be_intimate | Definition of Synonym: have sexual intercourse with

Synonym of Word: have_intercourse | Definition of Synonym: have sexual intercourse with

Synonym of Word: have_it_away | Definition of Synonym: have sexual intercourse with

Synonym of Word: have_it_off | Definition of Synonym: have sexual intercourse with

Synonym of Word: screw | Definition of Synonym: have sexual intercourse with

Synonym of Word: fuck | Definition of Synonym: have sexual intercourse with

Synonym of Word: jazz | Definition of Synonym: have sexual intercourse with

Synonym of Word: eff | Definition of Synonym: have sexual intercourse with

Synonym of Word: hump | Definition of Synonym: have sexual intercourse with

Synonym of Word: lie_with | Definition of Synonym: have sexual intercourse with

Synonym of Word: bed | Definition of Synonym: have sexual intercourse with

Synonym of Word: have_a_go_at_it | Definition of Synonym: have sexual intercourse with

Synonym of Word: bang | Definition of Synonym: have sexual intercourse with

Synonym of Word: get_it_on | Definition of Synonym: have sexual intercourse with

Synonym of Word: bonk | Definition of Synonym: have sexual intercourse with

The example above is for every variation of the word “love” with its possible synonyms, and their contexts. It shows that how content can be made richer with certain types of vocabularies, and how the context can be deepened further for improving the relevance. A possible Information Retrieval system can understand the content’s purpose with these synonyms and antonyms further. Thus, NLTK WordNet and synonym, antonym extraction along with examining the word’s definition and example sentences are important.

How to extract synonyms and antonyms from other languages besides English via NLTK Wordnet?

To extract synonyms and antonyms from other languages besides English via NLTK Wordnet, the “langs()” method should be used. With NLTK WordNet and the “lang” method, the ISO-639 Language Codes should be used. ISO-639 language codes contain the language codes with a shortcut. The language codes that can be used with NLTK WordNet can be seen below.

- eng

- als

- arb

- bul

- cat

- cmn

- dan

- ell

- eus

- fas

- fin

- fra

- glg

- heb

- hrv

- ind

- ita

- jpn

- nld

- nno

- nob

- pol

- por

- qcn

- slv

- spa

- swe

- tha

- zsm

To use the ISO-639 Language codes with NLTK WordNet to find synonyms and antonyms with the “lang” attribute, you can examine the example below.

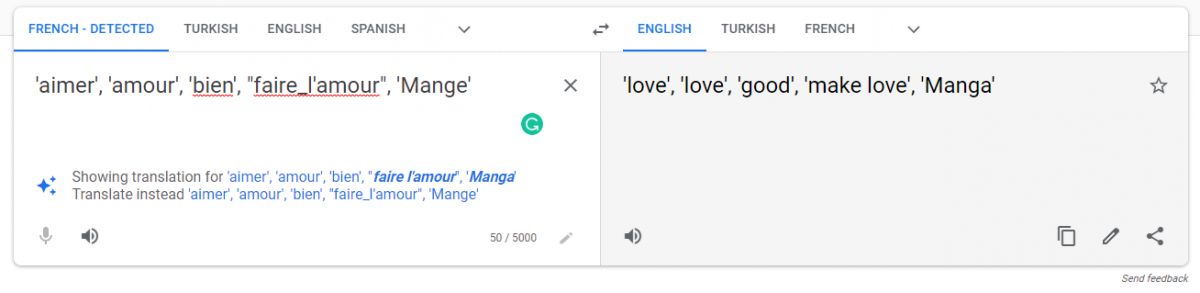

wordnet.synset("love.v.01").lemma_names("fra")

OUTPUT >>>

['aimer', 'amour', 'bien', "faire_l'amour", 'Mange']The example use of the “lang” method to find the synonym of “love” with the first verb meaning within the French language can be seen above. The synonyms of “love” as a verb within French can be seen below.

These types of language translations with different synonyms from different contexts can be used to find the contextual relevance between different documents from different languages. Thus, NLTK is a valuable tool for search engines. And, the ISO-639 Language Codes have been used for hreflang attribute in the context of SEO as in NLTK WordNet “lang” method.

What other Lexical Semantics can be extracted with NLTK WordNet besides Antonyms and Synonyms?

The other lexical semantics can be extracted with NLTK WordNet besides antonyms and synonyms are listed below.

- Hypernyms: Hypernym is the opposite (antonym) of the Hyponym. Hypnerym is the superior thing of a class of things. NLTK WordNet can be used for extracting the hypernyms of a word with the “hypnerym” attribute.

- Hyponyms: Hyponym is the opposite (antonym) of the Hypernym. Hyponym is the interior thing of a class of things. NLTK WordNet can be used for extracting the hyponym of a word with the “hyponym” attribute.

- Holonyms: Holonym is the opposite (antonym) of the Meronym. Holonym is the name of the whole thing that has multiple parts. NLTK WordNet can be used for extracting the hypernyms of a word with the “member_holonym” attribute.

- Meronyms: Meronym is the opposite (antonym) of the Holonym. It represents the part name within the thing. NLTK WordNet can be used for extracting the hypernyms of a word with the “hypnerym” attribute. NLTK WordNet has the “member_meronyms” for extracting the meronym of a word.

Lexical Semantics involves hypernyms, hyponyms, holonyms, meronyms, antonyms, synonyms, and more semantic word relations. Semantic Role Labeling and Lexical Semantics are directly connected to Semantic SEO and Natural Language Processing. In this context, NLTK WordNet and Lexical Relations such as hypernyms, hyponyms, meronyms are important for SEO and NLP.

How to Find Hypernym of a Word with NLTK WordNet and Python?

To find the Hypernyms of a word and to see its superior class names, the “hypernym()” method within the NLTK WordNet and Synset should be used. The Hypernym is a part of Lexical Relations in NLTK WordNet that explains a word’s upper and superior concepts. A hypernym can show the context of the word. An example of finding the hypernym of a word can be seen below.

for syn in wordnet.synsets("love"):

print(syn.hypernym_distances())

OUTPUT >>>

{(Synset('feeling.n.01'), 2), (Synset('attribute.n.02'), 4), (Synset('love.n.01'), 0), (Synset('entity.n.01'), 6), (Synset('abstraction.n.06'), 5), (Synset('state.n.02'), 3), (Synset('emotion.n.01'), 1)}

{(Synset('love.n.02'), 0), (Synset('cognition.n.01'), 3), (Synset('content.n.05'), 2), (Synset('psychological_feature.n.01'), 4), (Synset('entity.n.01'), 6), (Synset('abstraction.n.06'), 5), (Synset('object.n.04'), 1)}

{(Synset('whole.n.02'), 5), (Synset('physical_entity.n.01'), 7), (Synset('entity.n.01'), 8), (Synset('entity.n.01'), 5), (Synset('organism.n.01'), 3), (Synset('object.n.01'), 6), (Synset('beloved.n.01'), 0), (Synset('living_thing.n.01'), 4), (Synset('physical_entity.n.01'), 4), (Synset('lover.n.01'), 1), (Synset('person.n.01'), 2), (Synset('causal_agent.n.01'), 3)}

{(Synset('abstraction.n.06'), 6), (Synset('state.n.02'), 4), (Synset('sexual_desire.n.01'), 1), (Synset('attribute.n.02'), 5), (Synset('entity.n.01'), 7), (Synset('love.n.04'), 0), (Synset('feeling.n.01'), 3), (Synset('desire.n.01'), 2)}

{(Synset('score.n.03'), 1), (Synset('measure.n.02'), 4), (Synset('number.n.02'), 2), (Synset('entity.n.01'), 6), (Synset('abstraction.n.06'), 5), (Synset('love.n.05'), 0), (Synset('definite_quantity.n.01'), 3)}

{(Synset('sexual_activity.n.01'), 1), (Synset('organic_process.n.01'), 3), (Synset('process.n.06'), 4), (Synset('sexual_love.n.02'), 0), (Synset('entity.n.01'), 6), (Synset('physical_entity.n.01'), 5), (Synset('bodily_process.n.01'), 2)}

{(Synset('love.v.01'), 0)}

{(Synset('like.v.02'), 1), (Synset('love.v.02'), 0)}

{(Synset('love.v.03'), 0), (Synset('love.v.01'), 1)}

{(Synset('copulate.v.01'), 1), (Synset('sleep_together.v.01'), 0), (Synset('connect.v.01'), 3), (Synset('join.v.04'), 2)}The explanation of “how to find hypernym of a word with NLTK” code block is below.

- Import NLTK and WordNet

- Use “.synsets” method of wordnet.

- Use a for loop for all of the contexts of the phrases.

The example of discovering the hypernyms of the selected phrase represents different “noun” and “verb” contexts. Thus, there are many different hypernym paths. The hypernym distance represents different conceptual connections with a meaningful lexical hierarchy. For instance, the phrase “love” has “feeling” as hypernym, feeling with the first “noun” context while “attribute” is the second hypernym example for the second “noun” context. The context of the words can be seen with its definition as below.

wordnet.synset("love.n.01").definition()

OUTPUT>>>

'a strong positive emotion of regard and affection'WordNet says that the “love.n.01” means a strong positive emotion. Thus, the hypernym of the word “love” for the first noun context is “feeling” which is a synonym of “emotion”. For the hypernym of the second context which is the second “noun” version of the “love”, the example is below.

wordnet.synset("love.n.02").definition()

OUTPUT >>>

'any object of warm affection or devotion'The word “attribute” is the hypernym of the word “love” for the second noun meaning which is “any object of warm affection or devotion”. Thus, according to the context of a word, the meaning and the hypernyms will change. The WordNet hypernym paths and distances can affect the topicality score and semantic relevance of a content piece to a query or a context. Another “hypernym” finding example can be found below.

dog = wordnet.synset('dog.n.01')

print(dog.hypernyms())

OUTPUT >>>

[Synset('basenji.n.01'), Synset('corgi.n.01'), Synset('cur.n.01'), Synset('dalmatian.n.02'), Synset('great_pyrenees.n.01'), Synset('griffon.n.02'), Synset('hunting_dog.n.01'), Synset('lapdog.n.01'), Synset('leonberg.n.01'), Synset('mexican_hairless.n.01'), Synset('newfoundland.n.01'), Synset('pooch.n.01'), Synset('poodle.n.01'), Synset('pug.n.01'), Synset('puppy.n.01'), Synset('spitz.n.01'), Synset('toy_dog.n.01'), Synset('working_dog.n.01')]

The phrase “dog” with the first noun meaning has different hypernyms from “dalmatian” to the “griffon” or “puppy”, and “working dog”. All those hypernyms can be closer to the meaning of the dog within the document according to the general context of the document. Finding hypernyms and the hyponyms are connected to each other. Hyponyms can complete the meaning of a hypernym for the selected phrase within the NLTK WordNet.

How to Find Hyponym of a Word with NLTK WordNet and Python?

To find hyponyms of a word with NLTK WordNet and Python, the “hyponyms()” method can be used. Hyponym finding is beneficial to see the lexical relations of a word as a hypernym. Hyponyms comprise the inferior types of inferior versions of a specific phrase with different contexts. To find hyponyms with NLTK and NLP, follow the instructions below.

for syn in wordnet.synsets("love"):

print(syn.hyponyms())

OUTPUT >>>

[Synset('agape.n.01'), Synset('agape.n.02'), Synset('amorousness.n.01'), Synset('ardor.n.02'), Synset('benevolence.n.01'), Synset('devotion.n.01'), Synset('filial_love.n.01'), Synset('heartstrings.n.01'), Synset('lovingness.n.01'), Synset('loyalty.n.02'), Synset('puppy_love.n.01'), Synset('worship.n.02')]

[]

[]

[]

[]

[]

[Synset('adore.v.01'), Synset('care_for.v.02'), Synset('dote.v.02'), Synset('love.v.03')]

[Synset('get_off.v.06')]

[Synset('romance.v.02')]

[Synset('fornicate.v.01'), Synset('take.v.35')]The explanation of the hyponym finding with the NLTK code example is below.

- Import the NLTK and WordNet

- Call the “wordnet.sysnset” for the selected phrase.

- Call every “hyponym” for every context of the word.

The example above for the phrase “love” shows that there are different types of hyponyms for different types of meanings of “love”. For the first noun context, the hyponym of love is “agape”. “Agape” is a hyponym for the second meaning of “love” as a noun at the same time. In WordNet, a word can have different hypernyms for different noun versions while having the same hyponym for both of them such as love. There can be multiple hyponyms for a specific word within the NLTK such as “amorousness”. Amarousness is the hyponym of “love” for the first noun meaning. It means that when we check the hypernym of a hyponym, the same concept will appear to complete the hypernym path. An example of bidirectional hypernym-hyponym control for NLTK WordNet is below.

for syn in wordnet.synsets("amorousness"):

print(syn.hypernyms())

OUTPUT >>>

[Synset('love.n.01')]

[Synset('sexual_desire.n.01')]The hypernym of the “amorousness” is the phrase “love”. And, the second hypernym of the “amorousness” is the “sexual desire” which is a signal of the connection’s context between the “love” and the “amorousness”. The same process can be followed for the meaning of the first hyponym of love which is “agape”.

for syn in wordnet.synsets("agape"):

print(syn.hypernyms())

OUTPUT >>>

[Synset('love.n.01')]

[Synset('love.n.01')]

[Synset('religious_ceremony.n.01')]

[]“Agape” has the “love” as the hypernym naturally. It has “religious ceremony” as a hypernym as well which shows the context of the connection to the phrase “love”. If we check the synonyms and the definition of “agape”, this connection will be more clear.

wordnet.synset("agape.n.01").definition()

OUTPUT >>>

'(Christian theology) the love of God or Christ for mankind'The definition of the “agape” shows the “religious ceremony” connection for the word “love” and its hyponym. The synonyms of the “agape” can make this connection’s context more clear.

for syn in wordnet.synsets("agape"):

for l in syn.lemmas():

print(l.name())

OUTPUT >>>

agape

agape

agape_love

agape

love_feast

agape

gapingThe synonyms of the “agape” represent its “Christian Love” context as a hyponym for the word “love”. Because the “love feast” is one of the synonyms of the word “love”. And, the “love feast” is actually a term for Christian Mythology.

The NLTK WordNet Hypernyms and Hyponyms show the context of the word and the possible topicality association of the concept. Hyponym finding via NLTK and NLP can be supported by auditing the hypernyms and synonyms, along with the definitions of the words. Topic Modeling is an important part of the NLTK Hypernym and Hyponym connections. In this context, the Topic Modeling with Bertopic can be given as an example.

How to Find Verb Frames of a Verb with NLTK WordNet and Python?

To find the verb frames of a verb with NLTK WordNet can be found with the “frame_ids” and “frame_strings” methods. A verb-frame involves the meaning of the specific verb with an example sentence. Below, you can see an example usage of the “frame_ids” and “frame_strings” with NLTK WordNet to find the verb frames.

for lemma in wordnet.synset('run.v.02').lemmas():

print(lemma, lemma.frame_ids())

print(" | ".join(lemma.frame_strings()))

OUTPUT >>>

Lemma('scat.v.01.scat') [1, 2, 22]

Something scat | Somebody scat | Somebody scat PP

Lemma('scat.v.01.run') [1, 2, 22]

Something run | Somebody run | Somebody run PP

Lemma('scat.v.01.scarper') [1, 2, 22]

Something scarper | Somebody scarper | Somebody scarper PP

Lemma('scat.v.01.turn_tail') [1, 2, 22]

Something turn_tail | Somebody turn_tail | Somebody turn_tail PP

Lemma('scat.v.01.lam') [1, 2, 22]

Something lam | Somebody lam | Somebody lam PP

Lemma('scat.v.01.run_away') [1, 2, 22]

Something run_away | Somebody run_away | Somebody run_away PP

Lemma('scat.v.01.hightail_it') [1, 2, 22]

Something hightail_it | Somebody hightail_it | Somebody hightail_it PP

Lemma('scat.v.01.bunk') [1, 2, 22]

Something bunk | Somebody bunk | Somebody bunk PP

Lemma('scat.v.01.head_for_the_hills') [1, 2, 22]

Something head_for_the_hills | Somebody head_for_the_hills | Somebody head_for_the_hills PP

Lemma('scat.v.01.take_to_the_woods') [1, 2, 22]

Something take_to_the_woods | Somebody take_to_the_woods | Somebody take_to_the_woods PP

Lemma('scat.v.01.escape') [1, 2, 22]

Something escape | Somebody escape | Somebody escape PP

Lemma('scat.v.01.fly_the_coop') [1, 2, 22]

Something fly_the_coop | Somebody fly_the_coop | Somebody fly_the_coop PP

Lemma('scat.v.01.break_away') [1, 2, 22]

Something break_away | Somebody break_away | Somebody break_away PPThe example above demonstrates how to find the different meanings of a verb with its variations. The second meaning of the verb “run” has other variations and synonyms such as “turn_tail”, “scat”, “breakaway”, “escape” and other contextual synonyms. The verb frames are helpful to find the possible word replacements and contextual connections between the sentences. If the specific verb is replaced by one of the examples within the verb frame without changing the meaning of the sentence or the context of the paragraph, it means that the verb frames are used properly.

How to Find Similar Words for a targeted Word with NLTK WordNet and Python?

To find similar words to each other with NLTK Wordnet and Python, the “lch_similarity” and the “path_similarity” are used. The NLTK WordNet measures the word similarity based on the hypernym and hyponym taxonomy. The distance between the words within the hypernym and hyponym paths represents the similarity level between them. The similarity types and methods that can be used within the NLTK WordNet to measure the word similarity are listed below.

- Resink Similarity with “synset1.res_similarity(synset2, ic)”.

- Wu-Palmer Similarity with “synset1.wup_similarity(synset2)”.

- Leacock-Chodorow Similarity with “synset1.lch_similarity(synset2)”.

- Path Similarity with “synset1.path_similarity(synset2)”.

Example measurement of the word similarity with NLTK WordNet can be found below.

wordnet.synset("dog.n.01").path_similarity(wordnet.synset("cat.n.01"))

OUTPUT >>>

0.2The word similarity score within the NLTK WordNet represents the similarity between the words. The word similarity score within NLTK WordNet is between 0 and 1. 0 represents there is no similarity, while 1 represents the exact identical similarity. Thus, the example measurement for word similarity with Python above shows that the word “cat” and word “dog” as “noun” are similar to each other 20%.

The “Leacock-Chodorow Similarity” takes the hypernym and hyponym distance for the similarity calculation while taking the shortest path into account. The shortest hypernym and hyponym path between two words and the total depth of the path will represent the similarity for Leacock-Chodorow similarity measurement. Below, you can find example usage of the Leacock-Chodorow Similarity with NLTK WordNet.

wordnet.synset("dog.n.01").lch_similarity(wordnet.synset("cat.n.01"))

OUTPUT >>>

2.0281482472922856The example above shows the score of the word similarity based on the Leacock-Chodorow Similarity with NLTK WordNet. Finding similar words with Python and NLTK WordNet is a broad topic that can be handled with formulas like “-log(p/2d)” and other similarity measurements, or root node attributes. It is useful to see the word predictions and replacements with success. An NLP algorithm can replace the words based on their similarity to check the context shifts. If the context shifts too much, it means that the content is relevant to the first context candidate. And, word similarity with NLTK can be used for relevance calculation, or Information Retrieval systems.

How to Find Topic Domains of a Word with NLTK WordNet and Python?

NLTK WordNet has a “topic domain” metric for a specific word. The topic domain shows the word’s context and its value for a knowledge domain. The NLTK WordNet can be used to understand the topicality and topical relevance of content to another. All of the document’s from a website, or a book or all of the sentences from content with their words can be taken to calculate the topic domains. The dominant topic domain can signal the main context of the document. For a search engine, thus NLTK WordNet, or Semantic Networks with a proper dataset is useful.

To find the topic domains of a word with NLTK WordNet, and Python follow the steps below.

- Import the NLTK.corpus and wordnet to find the topic domain.

- Choose an example word or phrase to take the topic domain.

- Use the “synset” method of Wordnet for the chosen word.

- Use the “topic_domains()” method of the “synset” object.

- Read the output of the “topic_domains()” example.

Example usage of the NLTK WordNet to find the topic domain of a word can be found below.

wordnet.synset('code.n.03').topic_domains()

OUTPUT >>>

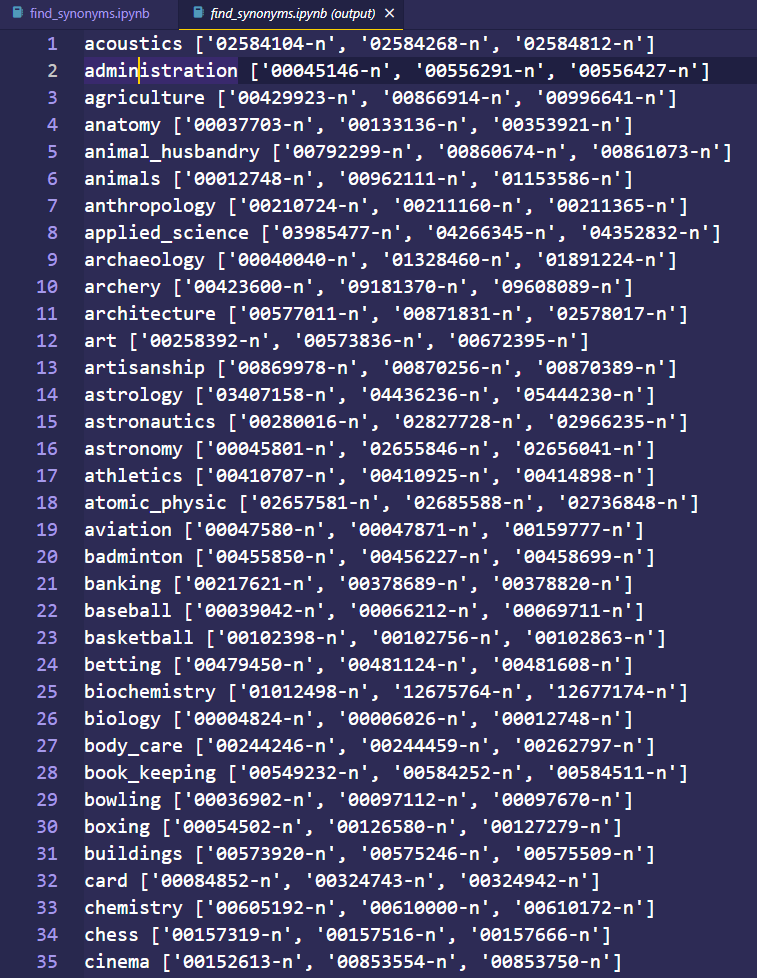

[Synset('computer_science.n.01')]The example above shows that the topic domain of the word “code” as a noun with the third version is “computer science”. One of the problems for diagnosing the topic domains for words from NLTK WordNet is that the topic modeling and hierarchy might not be detailed enough. To make it up, the Wordnet Domains can be used. To use the WordNet Domains, an application is necessary with the email address and accepting the Creative Common Licence. With the WordNet Domains, more than 400 topic domains can be explored. To print the topic domains within the WordNet Domains, use the code example below.

from collections import defaultdict

from nltk.corpus import wordnet as wn

domain2synsets = defaultdict(list)

synset2domains = defaultdict(list)

for i in open('wn-domains-3.2-20070223', 'r'):

ssid, doms = i.strip().split('\t')

doms = doms.split()

synset2domains[ssid] = doms

for d in doms:

domain2synsets[d].append(ssid)

for ss in wn.all_synsets():

ssid = str(ss.offset).zfill(8) + "-" + ss.pos()

if synset2domains[ssid]:

print( ss, ssid, synset2domains[ssid])

for dom in sorted(domain2synsets):

print(dom, domain2synsets[dom][:3])

OUTPUT >>>

acoustics ['02584104-n', '02584268-n', '02584812-n']

administration ['00045146-n', '00556291-n', '00556427-n']

agriculture ['00429923-n', '00866914-n', '00996641-n']

anatomy ['00037703-n', '00133136-n', '00353921-n']

animal_husbandry ['00792299-n', '00860674-n', '00861073-n']

animals ['00012748-n', '00962111-n', '01153586-n']

anthropology ['00210724-n', '00211160-n', '00211365-n']

applied_science ['03985477-n', '04266345-n', '04352832-n']

archaeology ['00040040-n', '01328460-n', '01891224-n']

archery ['00423600-n', '09181370-n', '09608089-n']

architecture ['00577011-n', '00871831-n', '02578017-n']

art ['00258392-n', '00573836-n', '00672395-n']

artisanship ['00869978-n', '00870256-n', '00870389-n']

astrology ['03407158-n', '04436236-n', '05444230-n']

astronautics ['00280016-n', '02827728-n', '02966235-n']

astronomy ['00045801-n', '02655846-n', '02656041-n']

athletics ['00410707-n', '00410925-n', '00414898-n']

atomic_physic ['02657581-n', '02685588-n', '02736848-n']

aviation ['00047580-n', '00047871-n', '00159777-n']

badminton ['00455850-n', '00456227-n', '00458699-n']Finding topics within the documents with the topic domains of the words via NLTK WordNet can be done in a better way by using the WordNet Domains. Below, you can see the output of the WordNet Domains with Python.

Google Search Engine has a similar topicality and topic domain understanding to the NLTK WordNet and the WordNet Domains. Google NLP API gives more than 100 topics for a specific section. In this context, reading using the Google Knowledge Graph API and Python tutorial and guideline is beneficial to see the topics, entities, and their classification based on the text.

To learn more, read the WordNet Domains Guideline.

How to Find Region Domains of a Word with NLTK WordNet and Python?

Region domains represent the region of the specific word that is used. It is useful to see the cultural affinity of the word. A region domain can signal the topic domain. But, the difference between the region domain and the topic domain is that it represents the geographical and cultural category more than its main topic. To find the region domain with NLTK WordNet, the “region_domains()” method is used. The instructions to find the region domains of a word with NLTK WordNet are below.

- Import the NLTK Corpus and WordNet to find the region domain of a word.

- Choose a word to find the region domains.

- Use the “WordNet.synset()” for the example word.

- Use the “region_domains()” method.

An example of finding region domains with NLTK WordNet and Python can be found below.

wordnet.synset('pukka.a.01').region_domains()

OUTPUT >>>

[Synset('india.n.01')]The example above shows that the word “Pukka” as an adjective has India as the region domain. The same process can be implemented for all of the words from a document to find the overall region signals of a document with NLTK WordNet.

The topic domain and region domain difference is that the topic domain focuses on the meaning of the word while the region domain focuses on the word’s geography and culture. Similarly, the “usage domain” focuses on which language style uses the specific word. For instance, a word can be from a medicine topic, and Japan as a region while being used in scientific language. Thus, NLTK WordNet is to provides information for exploring the language tonality, region signals, and topicality understanding. The next section will demonstrate an example for the NLTK WordNet usage domains.

How to Find Usage Domains of a Word with NLTK WordNet and Python?

Usage domain involves the word’s used language style. A word can be used by scientists, or it can be used within the slang language. To learn the content’s authenticity, target audience, or the author’s writing character, the usage domain can be used. In this context, the accent of a textual language can be seen. To find the usage domain of a word with the NLTK WordNet, the “usage_domains()” method should be used. The instructions for finding usage domains with NLTK WordNet are below.

- Import the NLTK Corpus and WordNet

- Choose a word to find the usage domains.

- Use the “WordNet.synset()” for the word.

- Use the “usage_domains()” method.

Example usage for the NLTK WordNet usage domain finding is below.

wn.synset('fuck.n.01').usage_domains()

OUTPUT >>>

[Synset('obscenity.n.02'), Synset('slang.n.02')]The example of finding the usage domain of a word with NLTK WordNet and Python above demonstrates a word’s usage domain from “obscenity” and the “slang” language. NLTK WordNet usage domains can be a good signal to see the overall content character of a website, or a document and book.

How to Use WordNet for other languages with Python NLTK?

To use the WordNet NLTK within another language, the “wordnet.lang”, or “lemma_names” method is used. The ISO-639 language codes are used to identify the language that will be used for the WordNet NLTK. Below, you can find example usage of NLTK WordNet for other languages to find the synonyms or the antonyms along with other lexical relations with Python.

wordnet.synset("love.v.01").lemma_names("jpn")

OUTPUT >>>

['いとおしむ',

'いとおしがる',

'傾慕+する',

'好く',

'寵愛+する',

'愛しむ',

'愛おしむ',

'愛好+する',

'愛寵+する',

'愛慕+する',

'慕う',

'ほれ込む']The example of finding the synonyms for the word “love” within Japan with NLTK Wordnet and Python can be seen above. NLTK WordNet can be used for finding synonyms and lemmas of English Words via words from other languages. The example below shows how to find the synonyms of the word “macchina” in English which is Italian.

wordnet.lemmas('macchina', lang='ita')

OUTPUT >>>

[Lemma('car.n.01.macchina'),

Lemma('locomotive.n.01.macchina'),

Lemma('machine.n.01.macchina'),

Lemma('machine.n.02.macchina')]Using other language words for finding synonyms within the English language via NLTK WordNet is useful to see the possible connections within the English from other languages. A word from Italian can have different types of lexical relations within English. The cross-language synonym finding shows the understanding of the semantics in a language-agnostic way. Thus, using NLTK WordNet for multi-language applications such as search engines are useful to see a topic with more layer.

The NLTK WordNet-related other NLTK tasks for NLP can be found below.

- NLTK Tokenize is related to NLTK WordNet, because every word that is tokenized via NLTK can be audited with its hypernyms, hyponyms or synonyms within the WordNet.

- NLTK Lemmatize is related to NLTK WordNet as an NLP Task because it provides the different variations and versions of the same word to understand its context.

- NLTK Stemming is related NLTK WordNet task for NLP because it gives the different stemmed versions of the words.

- NLTK Part of Speech Tag is related to NLTK WordNet as NLP task because it gives the different roles for a word within a sentence by protecting its context.

Related terms to the WordNet from NLTK comprise the lexical relations and semantic relevance along with the similarity. Natural Language Toolkit for a WordNet is connected to the terms below.

- FrameNet: FrameNet is connected to the NLTK WordNet bcause it involves the semantic role labels based on the predicates of the sentences and their meanings.

- Lexical Relations: Lexical relations is connected to WordNet NLTK because it provides lexical similarities and connections between different terms and concepts.

- Semantic Relevance: Semantic Relevance is connected to NLTK WordNet because it shows how a word is relevant to another one based on semantic relations.

- Semantic Similarity: Semantic Similarity is connected to NLTK because it provides similarity between two words based on their contexts.

- Hypernyms: Hypernyms is connected to WordNet because it involes the upper and superior parts of a word.

- Hyponyms: Hyponyms is connected to WordNet bcause it involves the inferior and lower parts of a word.

- Synonyms: Synonyms is connected to WordNet bcause it involves the other words that have the same meaning.

- Antonyms: Antonyms is connected to WordNet bcause it involves the opposite meaning words of a word.

- Holonyms: Holonyms is connected to WordNet bcause it involves the whole of a thing.

- Meronyms: Holonyms is connected to WordNet bcause it involves the sub-part of a thing.

- Partonym: Partonym is connected to WordNet bcause it involves the change of a word to another one with different suffixes or prefixes.

- Polysemy: Polysemy is connected to WordNet bcause it provides same phrases with different meanings.

- Natural Language Processing is connected to WordNet bcause it is the process of understanding human language with machines.

- Semantic Search is connected to WordNet bcause it provides meaningful connections between different words within a semantic map.

- Semantic SEO is connected to WordNet bcause WordNet can be used for better content writing practices.

- Semantic Web is connected to WordNet bcause semantic web behavior patterns have meaningful word relations.

- Named Entity Recognition is connected to WordNet bcause it provides recognition of the named entities.

Last Thoughts on NLTK WordNet and Holistic SEO

NLTK WordNet and Holistic SEO should be used together. The Holistic SEO contains every vertical and angle of the search engine optimization. NLTK WordNet can provide different contexts for a specific word for an SEO to check the possible contextual connections between different phrases. NLTK WordNet is a prominent tool to understand the text along with text cleaning and text processing. Google and other semantic search engines such as Microsoft Bing can use synonyms, antonyms, and hypernyms or hyponyms for query rewriting. A search engine can process a query while tokenizing it and replacing the words with other related words with different contexts. NLTK WordNet can understand the topical relevance of a specific content piece to a query, or query cluster. Based on this, NLTK WordNet and Holistic SEO should be taken and processed together.

The NLTK Guide will continue to be updated regularly based on the new NLP and NLTK updates.

- Sliding Window - August 12, 2024

- B2P Marketing: How it Works, Benefits, and Strategies - April 26, 2024

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024