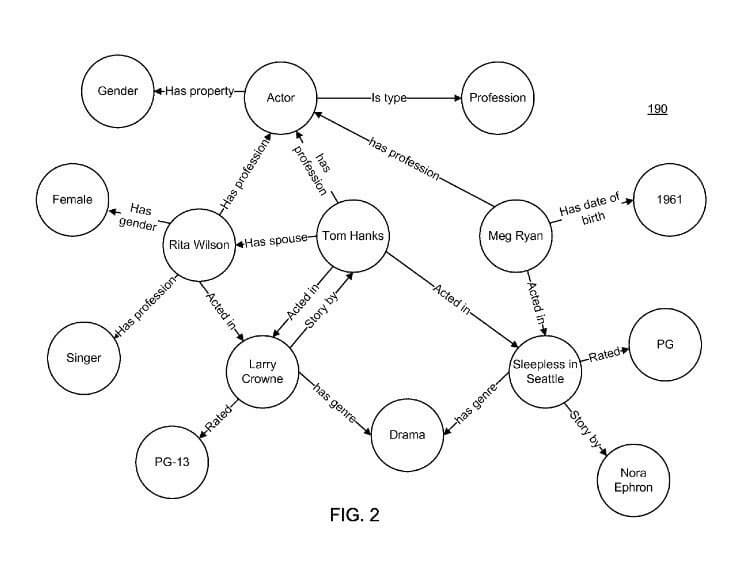

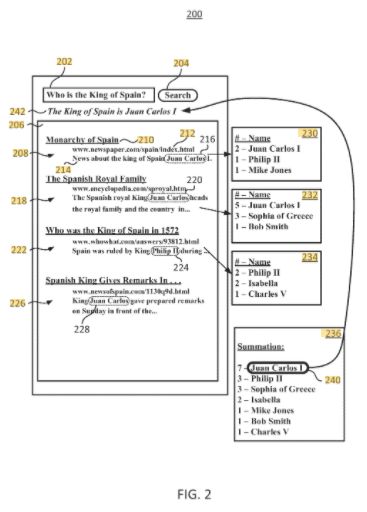

Google Knowledge Graph produces Knowledge Panels for entities that are reliable and have enough “Search Demand”. Knowledge Panels that appear in SERP show the relationships of related entities and how Google interprets the character of queries and search intent. More information is available on Knowledge Graph and Knowledge Panel features that are of great value to SEO. However, this article will mainly focus on the Google Knowledge Graph API. You can see the difference between a “string” and a “thing” with the Google Knowledge Graph API.

Google is a Hybrid Search Engine. In other words, it is a Search Engine that offers a Personalized Search Experience with Phrase-based, Entity-based, and Hypertextual features and integrates Artificial Intelligence Systems into its algorithms. Amit Singhal said a nice word in 2012 when the Knowledge Graph was announced.

“Not strings, things”.

Amit Singhal, Google Fellow

If you do not know what an entity is for a Search Engine and how can it help a Search Engine to understand a “content” better, you may check Google’s AI Blog to learn more about related terms such as Contextual Information Retriever or you may read our related Guidelines such as “Information Extraction with Python“. You can also read our “PyTrend Guideline” article to see how related entities are registered in Google Trends and how they are associated with which query.

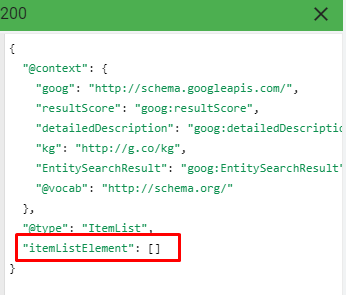

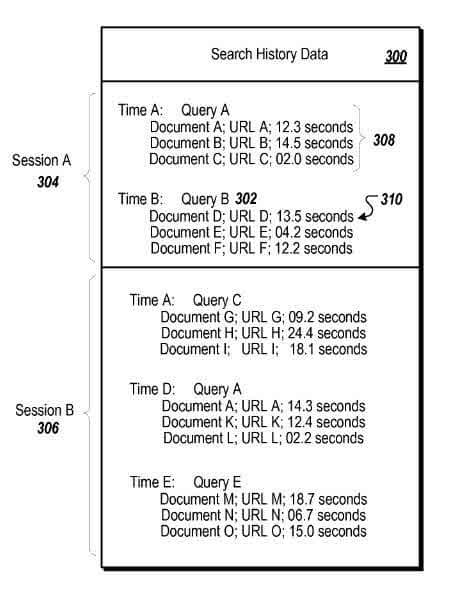

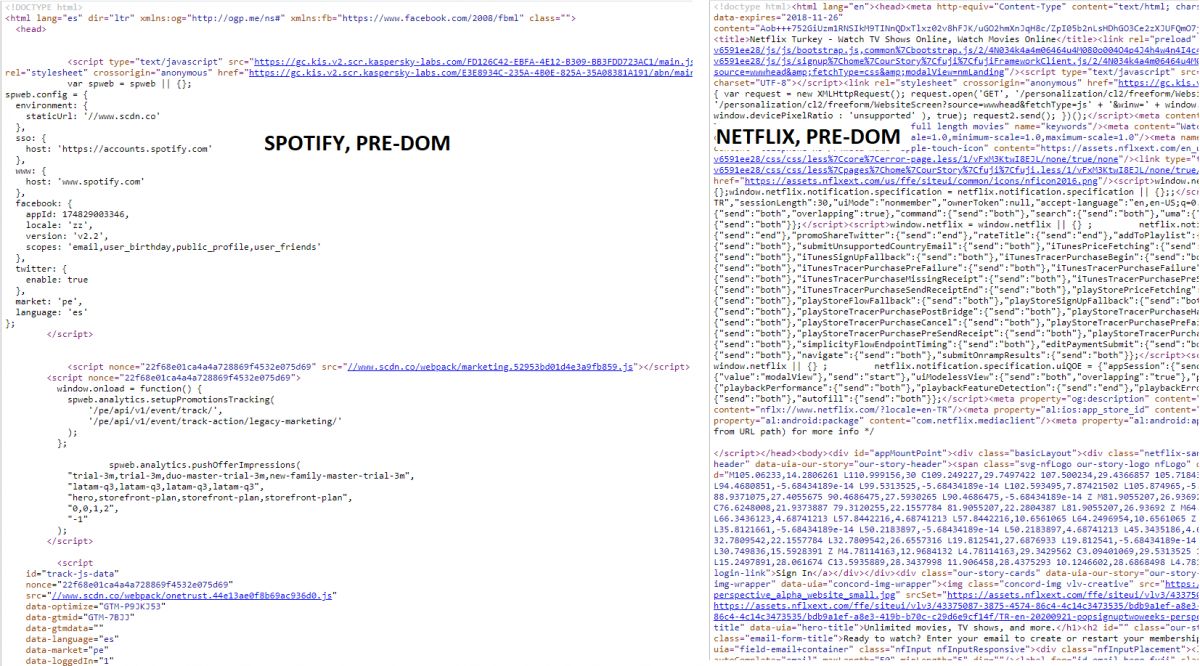

In this article, we will connect to the Google Knowledge Graph API with Python and Advertools, converting the most relevant Entities in specific queries into a data frame. We will examine entities’ IDs, their URLs in the Knowledge Base, how relevant they appear to be with which query, and how they are perceived in what language. We will also talk about the importance of perceiving a company as a real-world entity for SEO along with Semantic Search Engine Features and the Importance of Entities in the Ranking Algorithms.

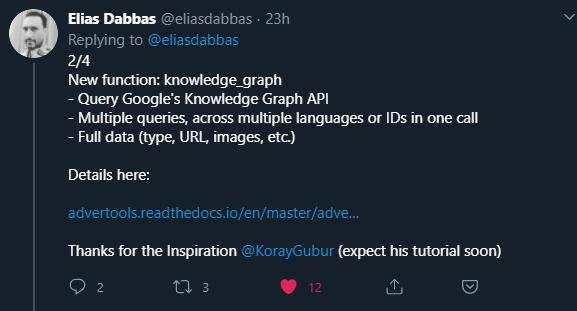

Before going any further, I must say what Advertools is. Advertools is a Python Package developed by Elias Dabbas. Advertools is a technology that combines Coding and Marketing concepts with a Data Science perspective and facilitates many processes for Holistic SEOs.

I thank Elias Dabbas that he developed the Advertools and he created this function by being inspired by an SEO Case Study that I have written in Serpstat for the Unibaby with the question of “Is your brand is a string or a thing?”.

Using Google Knowledge Graph API with Advertools to Analyze Entity Profiles

After telling the importance of the Google Knowledge Graph, Knowledge Base, and Entities for the SEO, we may start to use Advertools for our analysis. If you haven’t downloaded Advertools before, you can download it by typing the following command in terminal.

pip install advertools

#or

pip3 install advertoolsTo use the Google Knowledge Graph API with Python and see which query comes up with which meaning for Google in which confidence and relevance scores, we will follow the steps below.

- Reading the Warning of the Google Knowledge Graph API

- Installing or Updating the Advertools

- Opening a Google Developer Console Account and Creating the Credentials

- Running our Function with Different Parameter Variations to Analyse Google’s Perception of Real-World Entities

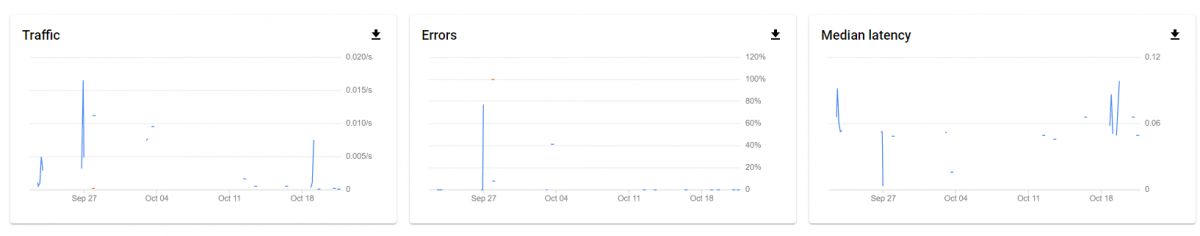

1.Is Google Knowledge Graph API Suitable for Using in a Production-Critical Service?

Google Knowledge Graph API has the following warning in its official document, as well as in its own official document, Advertools.

This API is not suitable for use as a production-critical service. Your product should not form a critical dependence on this API.

Google Knowledge Graph API Documentation

In other words, while using the Google Knowledge Graph API, you may notice some changes in the Google Knowledge Graph API’s responses. Also, Google KG API doesn’t have an internal team to support it or there is no one for contacting in the context of the Knowledge Graph (KG) API of Google. When I ask this warning to the lovely Martin Splitt, he didn’t say that the results are not reliable, he said that approached the results carefully. Also, a warning might be relevant for the Google Knowledge Graph API’s future, according to Martin Splitt, API is experimental, it can change anytime.

In this context, the reliability of the results or the technical issues with the Google KG API is not clear, but in any case, we should be careful while interpreting the information that we acquired via Google KG API.

2. Installing or Updating the Advertools

pip install advertools #for downloading it

pip install --upgrade advertools #for updating it

"""--user option can be used if you want to download the Advertools only for the specific user and its permission priviliages."""If you have downloaded the Advertools before, be sure that it is version 0.10.7 or further. To be sure, you can use the command below for checking the version of the Advertools.

pip show advertools

OUTPUT>>>

Name: advertools

Version: 0.10.7

Summary: Productivity and analysis tools for online marketing

Home-page: https://github.com/eliasdabbas/advertools

Author: Elias Dabbas

Author-email: [email protected]

License: MIT license

Location: c:\python38\lib\site-packages

Requires: scrapy, pandas, twython, pyasn1

Required-by:As we can see, the version of the Advertools is 0.10.7.

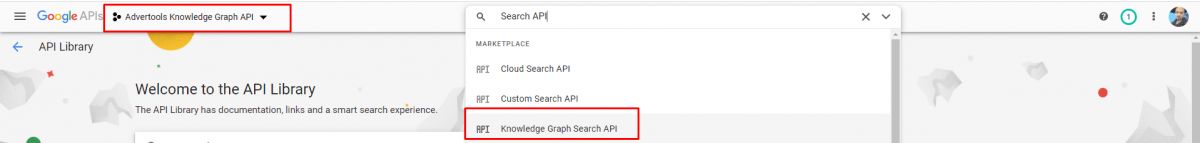

3. Opening a Google Developer Console Account and Creating Credentials

In order to preserve the integrity of the Guideline, I will only talk about this section shortly. Below are the steps to follow in order to open a Google Developer Console Account, Create a Project, and Credentials.

- Open a Google Developer Console Account (Requires a Gmail Account)

- Create a new project under the same Google Developer Console Account

- Include the Google Knowledge Graph API in the project.

- Create and Copy the Credentials (Read the Security and Billing Warnings)

After creating the Google Knowledge Graph Project, do not share the created Credentials with anyone, and giving your project a name according to your purpose can prevent future confusion.

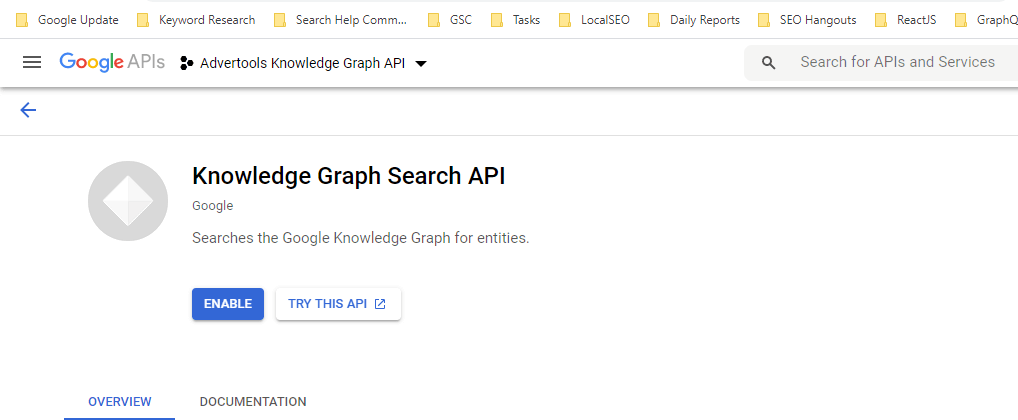

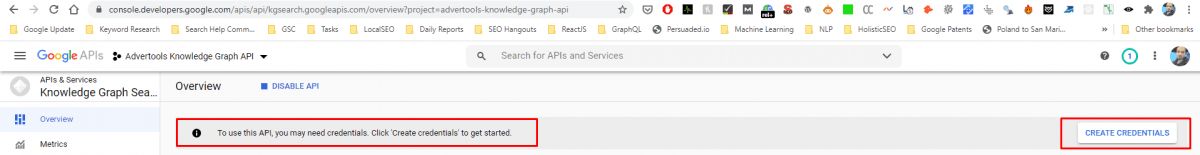

After creating the project (Advertools Knowledge Graph API in my case as in the image) and selecting the related API from the search bar, you will see the screen below for activation of the Google Knowledge Graph API.

The best section in the image above is the sentence of that “Searches the Google Knowledge Graph for Entities”. Isn’t it beautiful? To continue, you should enable (activate) the Knowledge Graph Search API for the created project. After you have activated the API, you will see the screen below to create and copy the credentials.

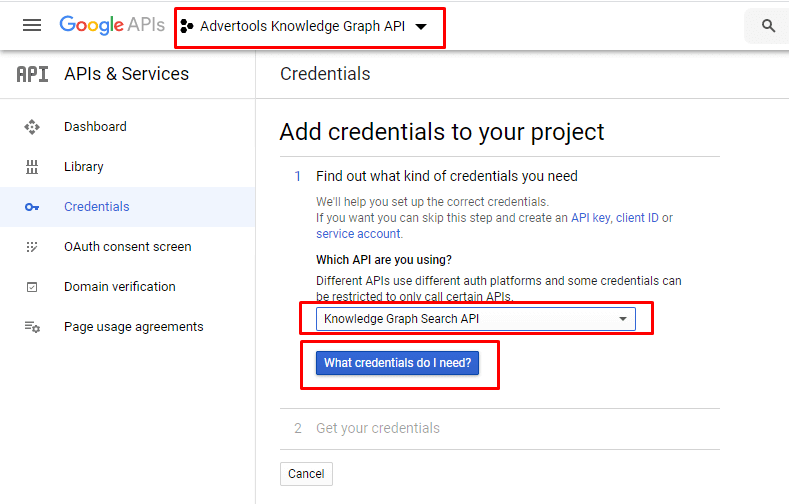

After clicking the Create Credentials, you will see the screen below for determining the last conditions for your API Usage.

After clicking the “What Credentials do I need?” button, you will see your API Key as below.

After creating the Credentials, you may see your API Key with a name and a restriction warning as below.

You also should pay attention to the “Remember to configure the OAuth Consent Screen with information about your application.” warning. It is about restricting the usage of the API Key we have generated. If you share the key with others without any restriction, it can consume your quota and also can create a costly bill for you along with some data breaches. I have restricted my key and also, and before publishing this Guideline, I also will shut down this project so that the key can’t be used by others in the name of me.

Also, I recommend you to use the Other Google Developer Console APIs, there are lots of useful APIs for Holistic SEOs, Marketers, and Coders there like VisionAI API, Google Sheets API or Search Console API. Since we have our Credentials now, we can start with the most important and fun section, Usage of the Knowledge Graph API with Python.

4. Using Advertools for Querying in Knowledge Graph API

Advertools’ designed and developed function for the Google Knowledge Graph API is the “knowledge_graph()”. Elias Dabbas always pay attention to making Advertools easier to understand and use with a better speed. So, that’s why the “name” is so clear. You will see a basic use case of “knowledge_graph()” with VSCode in an “ipynb” file.

from advertools import knowledge_graph

key = 'AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U'

knowledge_graph(key=key, query='Apple')

OUTPUT>>>

2020-09-19 19:23:56,971 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Apple- In the first line, we have imported the necessary function from the Advertools.

- In the second line, we have assigned our API Key from the before section to a variable.

- We have used the “knowledge_graph()” function with “key” and “query” parameters. The Key is equal to our API Key, while “query” is equal to “Apple” in our first example.

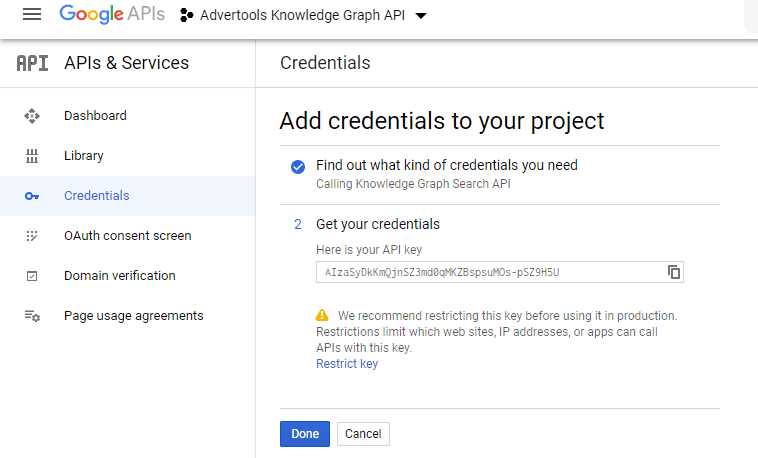

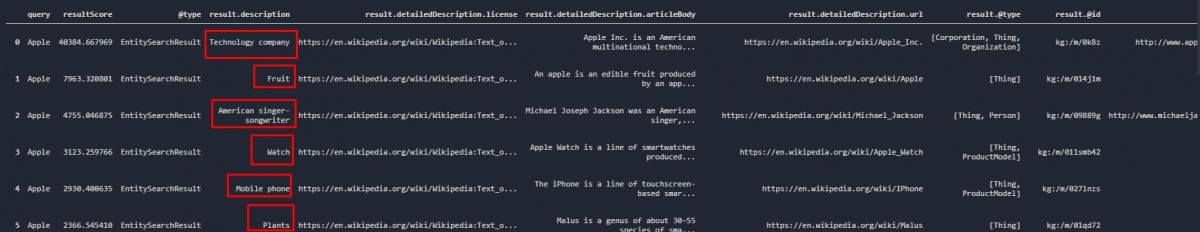

Below, you will see the output of our “knowledge_graph()” function call.

kg_df = knowledge_graph(key=key, query='Apple')

#We have assigned the function into a variable to use its output in the future.

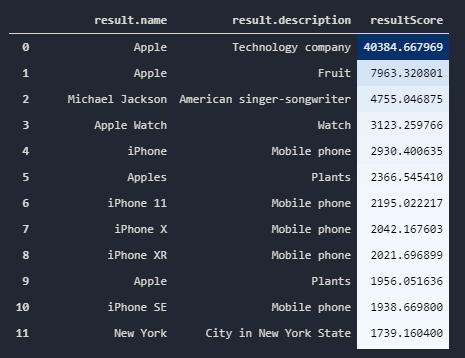

kg_df[['result.name','result.description', 'resultScore']]We have different Entity Results for the string of “Apple”. You may see the entity results with their “resultScore” for the “Apple” string.

For small screen devices, if you might not see the result scores in the given image, you may check the results from the table below.

| Result Description | Result Score |

| Technology Company | 40384.667969 |

| Fruit | 7963.320801 |

| American Singer-songwriter | 4755.046875 |

| Watch | 3123.259766 |

| Mobile Phone | 2930.400635 |

| Plants | 2366.545410 |

To understand these scores and words in terms of Entity-relations, we should open a new sub-section so that Advertools’ “knowledge_graph()” function’s importance can be understood deeply.

How to Interpret Entities in the Google Knowledge Graph for Given Query with Advertools

We actually have 14 more results, but for making a quick analysis, these are enough for now. “resultScore” is actually the score of the “relevance” and “confidence” of the Entity for the given string. In this example, we see that the “Apple” string has different Entity Profiles that are entirely different from each other and also in a deeper hierarchic order.

Apple is the name of a “Technology Company” that produces “Mobile Phones” and “Watch”. We have known that, but let me give you an example. Try to read the sentence below to understand why entities and their meanings, and relations are important for a Search Engine’s understanding capacity.

Apple is the name of a “Technology Company” that produces “plants” and “fruits”.

Did it sound weird? In the old times, it wasn’t weird for Search Engines as much as for Human-beings. Entities and their side and sub-profiles, and relations to each other help for Natural Language Processing (NLP), Natural Language Understanding (NLU), and Natural Language Generation (NLG) along with Search Engines to create better Search Engine Results Pages (SERP) with more relevant and logical contents.

We have shown you a “bizarre” example, but if you think a little bit, among the trillions of facts and entities, Search Engines have lots of gray areas, so using relevant entities with related entities and their sub-profiles and side-profiles is important to create a better “context” and “comprehensive” content.

This is the first useful side of the Advertools’ Knowledge Graph API. It shows the relevant Entities with relevancy and confidence level for a string. Most SEOs only focus on “search volume of strings”, but how about the context of “relevant entities” to a searched string? Let me give you one more example below.

Let’s say, you have a short sentence in your travel article like the one below.

“We have gone to the Bellagio Hotel from New York in two hours “.

The sentence above has a landmark that is “Bellagio Hotel” which is in Las Vegas. Technically, no one can go to the Bellagio Hotel from New York in Las Vegas, for 2020, it is not possible with valid technology. In this context, the Search Engine can not give reliability to the sentence because it has a clearly missing point. So, entities help Search Engine to understand “what really is the Bellagio Hotel?”. In reality, also there are other entities that have close names to the “Bellagio Hotel” and “New York” strings together.

Someone can’t go Bellagio Hotel from the City of New York, but actually, they can go to the Bellagio Hotel from the “New York Hotel & Casino” in two hours because both of the hotels are in the same city, Las Vegas. But, I assume that “New York Hotel & Casino” has no real importance for the “New York” string according to the “City of New York” and also since the “City of New York” and “travel” related queries have a long history, Google won’t make the assumption I did. Even if Google can configure this connection, these types of calculations will cost more time and evaluation costs. Below, you will see another example from Google AI’s blog with the term “Integrating Retrieval into Language Representation Models”.

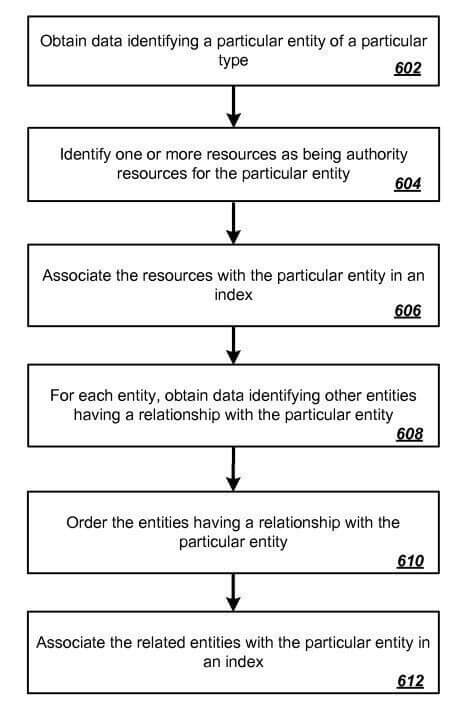

Is Your Brand a String or A Thing? Entity Association Process for Targeted Industries and Search Terms

This question was the one that inspired Advertools’ “knowledge_graph ()” function. “Are your Brand is string or thing?” I have asked this question in my Unibaby SEO Case Study which was a major success. I have written a case study article about this project in Serpstat.

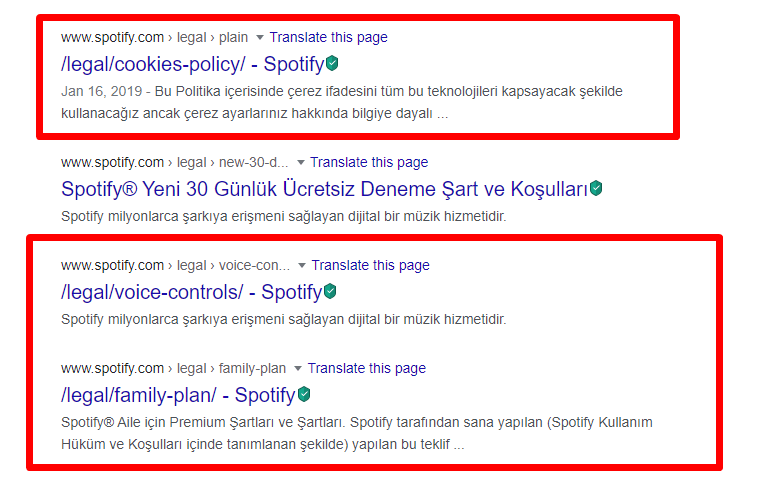

In the Unibaby SEO Project and Success, the first thing I audited was whether the brand is a string or an entity? If it is an entity, which other entities are related to it? And how it is positioned in Google’s real-world perception according to its competitors? The same audit also is performed for the “related queries” and “related phrases”. Initially, the firm was not an entity, but the same firm was a subsidiary of a large holding called Eczacıbaşı Holding, which became authoritative in health and baby products. Transforming Unibaby, which is not in the Google Knowledge Graph, from a “string” to an Entity was the first goal of the entire SEO Case Study.

In this context, when Unibaby.com.tr is an Entity, it was also considered with which other Entities it would be associated with. These were Eczacıbaşı Holding, Founder of Eczacıbaşı Holding, Board Members, the products Unibaby.com.tr sells, the services it provides, and all the subjects the target audience needs information. Thus, the company has been associated with certain types of entities and entities on certain subjects, just as it is described in Google Patents, appears in Google SERP, and can be observed with Advertools’ “knowledge_graph ()” function in Google Knowledge Graph API. The main goal here was to get more trust and relevance scores than the entity-based Algorithms. Also, a real-world entity is way much authoritative and trustworthy according to a “non-recognized string” since it has a real-world existence and provable existence.

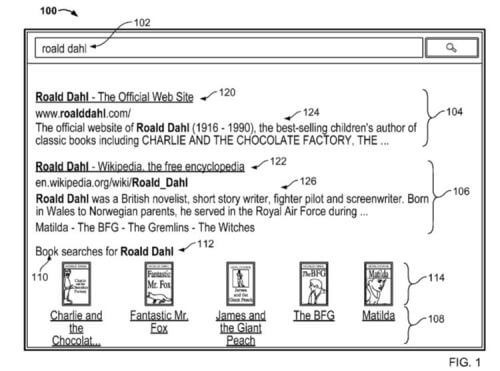

Like in the “Roald Dahl” example of Google’s “Related Patents”, being a “real-world entity” that can be related to the target market’s search terms such as “baby products” or “baby growth process” is the most important phase of this task.

This is a view from the SEO Case Study that shows how I searched in Google Knowledge Graph API before Advertools. You may read the Unibaby SEO Case Study, to see more about the case and its “entity-based SEO” intersections.

Importance of Sub-sides and Sub-profiles of Entities in a Knowledge Base Hierarchy

The second importance of the Advertools’ “knowledge_graph()” function, it gives the most related entities along with their sub-profiles and side-profiles. For instance, “Apple” is a “fruit” and “plant” at the same time. “Plant” here is “fruit”s hierarchic inclusive parent here. In content, there can be different features and profiles of an entity along with related entities. This helps Google to understand what is the theme of the content and also which content has a comprehensive angle along with which content has a better fit for the given query.

For instance, in our example, we have used the string of “Apple”, that’s why our “Watch” and “Mobile Phone” entities had a lower rank than the “Fruit”. But also, they had a higher rank than the “plants”. This shows the different relevance scores are determined for the different entity profiles for the given query. So, to show this in a more concrete way, let’s use Advertools.

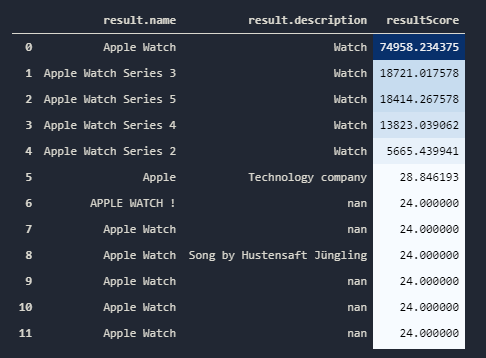

knowledge_graph(key=key, query='Apple Watch')[['result.name', 'result.description', 'resultScore']].style.background_gradient(cmap='Blues')

OUTPUT>>>

2020-09-19 20:44:29,018 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Apple WatchYou will see the results for the “Apple Watch” string.

The result scores along with the results have changed completely, as you may see below.

If you have a really small screen and can’t see the result scores properly, you may use the table below instead of the image above.

| Result Description | Result Score |

| Apple Watch | 74958.234375 |

| Apple Watch Series 3 | 18721.017578 |

| Apple Watch Series 5 | 18414.267578 |

| Apple Watch Series 4 | 13823.039062 |

| Apple Watch Series 2 | 5665.439941 |

| Apple | 28.846193 |

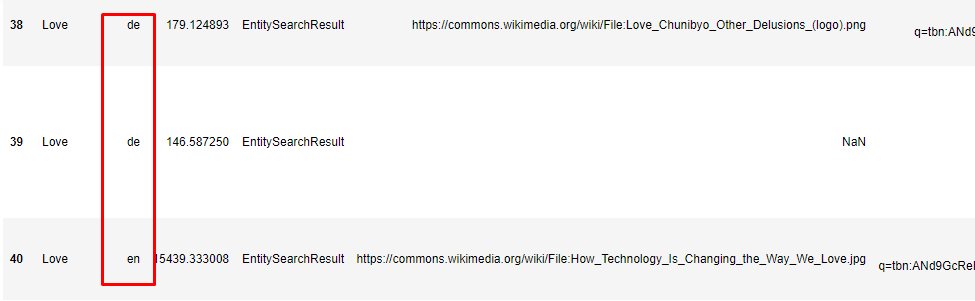

You may see that for the “Apple Watch” string, we have different results with different “resultScore”. Also, there are not any “fruits” or “plants” for this query. And we also have side profiles of the “Apple Watch” entities such as “Music Recording” features. Also, we have a song named “Apple Watch” that is signed by “Hustensaft Jüngling”, I believe it is a kind of local marketing song for Germany (or, at least I hope).

While looking at these results, I remembered one of the Google Patents whose name is “Detecting Product Lines Within Product Search Queries”. It was also related to detecting new products and new product queries to show the new products in the Search Results for related search trends. It also helps Google to improve its Knowledge Base along with Organic and Consistent Shopping Results. But here, I wonder why is the “Apple Watch Series 3” and “Apple Watch Series 5” have away much more relevance and confidence score than the “Apple Watch Series 2” and the “Apple Watch Series 4”. And, this brings us to the first question before the last one.

How is Google Knowledge Graph Entities Registered and By What Score?

Before explaining it, let me show you how you can design your Search Results with only the “URL Queries and Parameters” in Google to show how Google creates knowledge panels for every entity. Let’s choose an entity first from our Advertools Function “knowledge_graph()”.

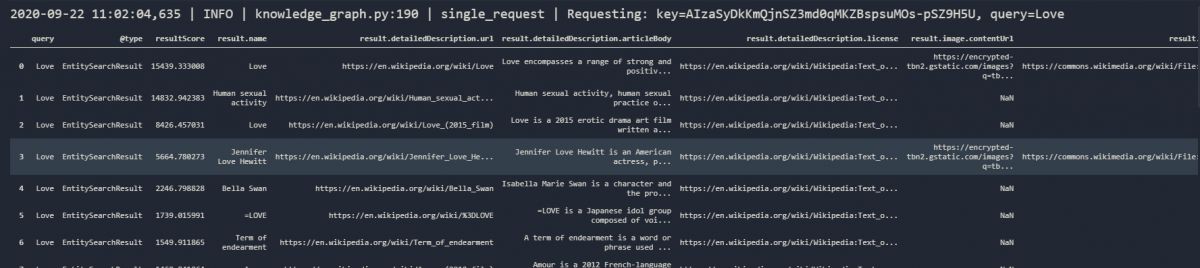

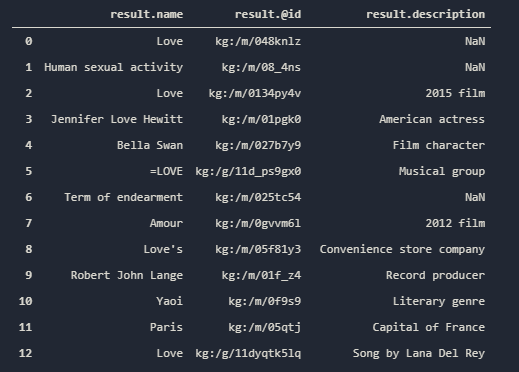

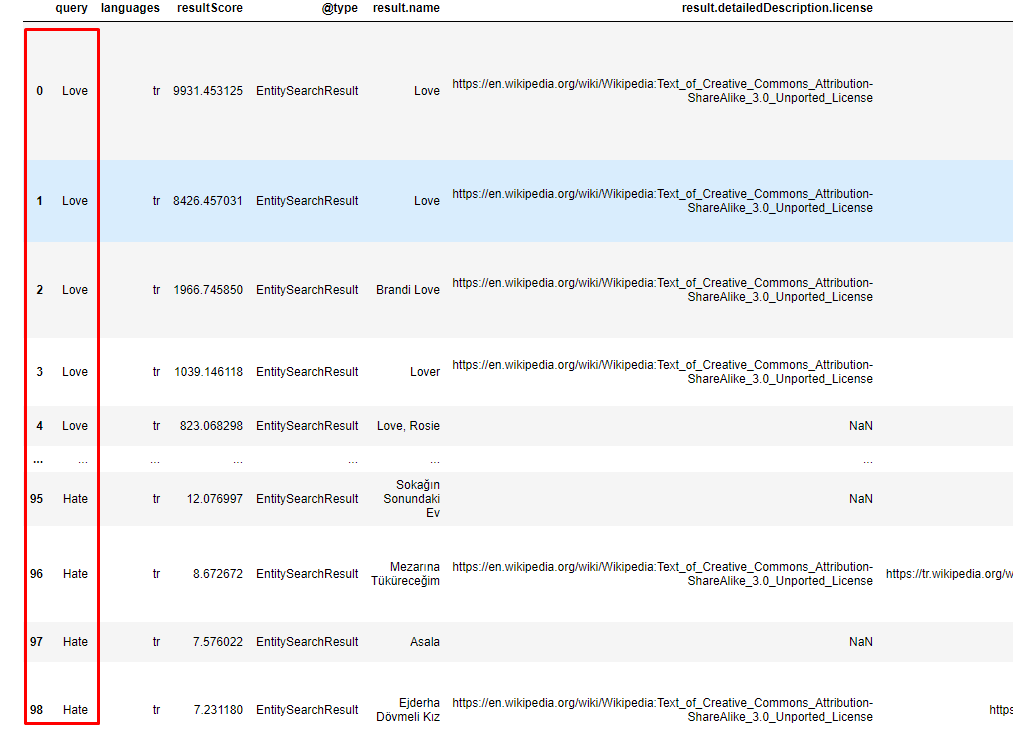

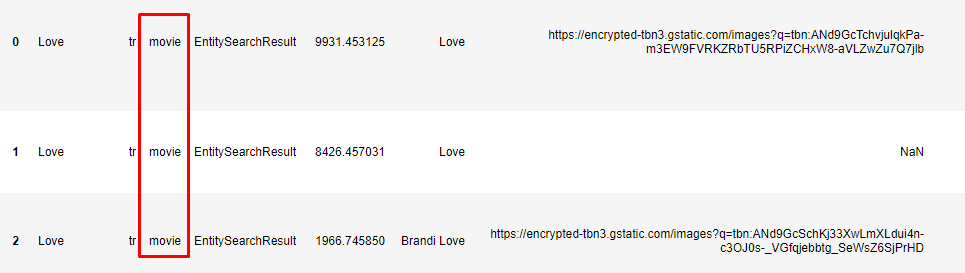

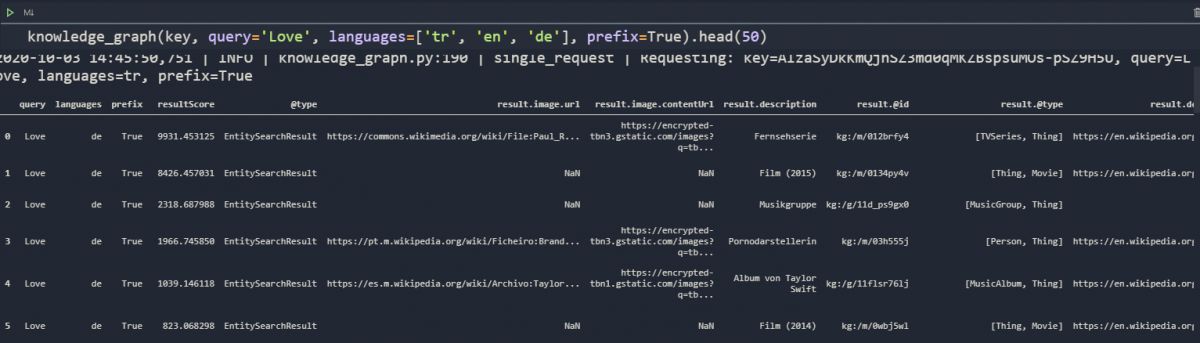

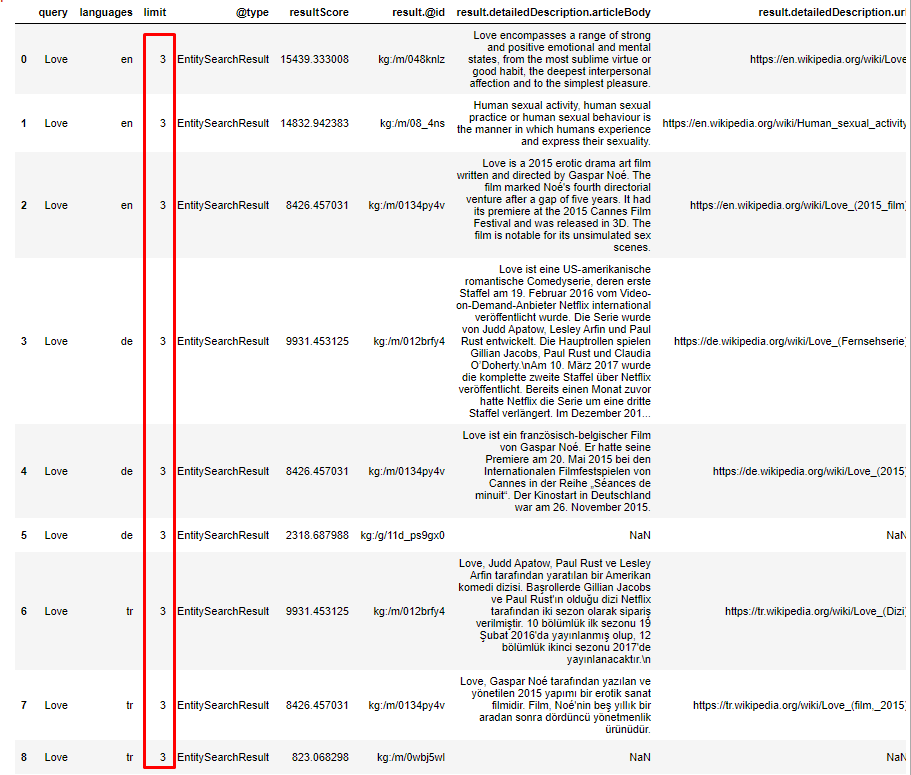

knowledge_graph(key=key, query='Love')

OUTPUT>>>

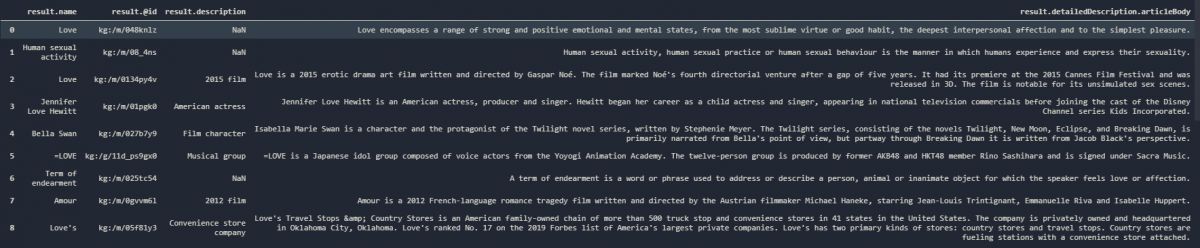

2020-09-22 11:02:04,635 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=LoveYou will see the output of our call for the query “Love”.

To show how Google defines, profiles categorize, and rank them according to their relevance and confidence scores, one should examine the Advertools’ “knowledge_graph()” function’s output columns.

Which Columns and Details Do Exist for an Entity in Knowledge Graph?

Let’s check our columns of the data frame.

kg_df = knowledge_graph(key=key, query='Love')

#We have assigned our function's output to a variable so that we may avoid creating unnecessary bandwidth consumption for Google. Also, making a function work every time may create a puffy bill. So, using a variable is morer logical option.

#Big thanks to Elias Dabbas for his suggestions on this subject.# knowledge_graph(key=key, query='Love').columns, since our output is actually a Pandas Dataframe, you also may use Pandas Attributes directly, but for the reasons that we showed before, it is a unnecessary work.

kg_df.columns

OUTPUT>>>

2020-09-22 11:07:50,145 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Love

Index(['query', 'resultScore', '@type', 'result.image.url',

'result.image.contentUrl', 'result.@id',

'result.detailedDescription.license',

'result.detailedDescription.articleBody',

'result.detailedDescription.url', 'result.@type', 'result.name',

'result.description', 'result.url', 'query_time'],

dtype='object')We have the columns below from our “Knowledge Graph Search API and Knowledge Graph Search Function from Advertools”.

- “query” which is the string we have searched for in the Knowledge Graph Search API.

- “@type” of the entities in our result data frame.

- “result.image.url” is the URL of the images of the entities in our result data frame.

- “result.image.contentUrl” is the direct image URL of the image of the entity.

- “result.@id” is the id number of the entity which is a quite important detail of a Holistic SEO.

- “result.detailedDescription.licence” is the license of the entity information source attribute.

- “result.detailed.Description.articleBody” is a detailed explanation of the entity.

- “result.detailedDescription.url” is the URL of the detailed explanation of the entity.

- “result.@type” is the type of entity with profile information such as “thing, person or thing, the company”.

- “result.name” is the name of the entity.

- “result.description” is the short definition of the entity such as “Film in 2005, Musical Group, or Film Character”.

- “result.url” is the URL of the best definitive source of the entity which is also quite important for a Holistic SEO.

- “query_time” is the time that we have performed the function call.

After explained the details of the Knowledge Graph Entities via Advertools’ “knowledge_graph()” function, we can focus on Google’s working style on them.

How to Show Knowledge Panels for Entities in Google with URL Parameters and Queries?

To show one of our entities in a Knowledge Panel of Google, we need the “entity id”.

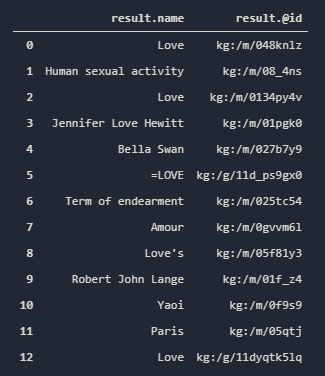

#knowledge_graph(key=key, query='Love')[['result.name',"result.@id"]]

kg_df[['result.name',"result.@id"]]

OUTPUT>>>

2020-09-22 11:31:08,080 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Love

result.name result.@id

0 Love kg:/m/048knlz

1 Human sexual activity kg:/m/08_4ns

2 Love kg:/m/0134py4v

3 Jennifer Love Hewitt kg:/m/01pgk0

4 Bella Swan kg:/m/027b7y9

5 =LOVE kg:/g/11d_ps9gx0

6 Term of endearment kg:/m/025tc54

7 Amour kg:/m/0gvvm6l

8 Love's kg:/m/05f81y3

9 Robert John Lange kg:/m/01f_z4

10 Yaoi kg:/m/0f9s9

11 Paris kg:/m/05qtj

12 Love kg:/g/11dyqtk5lq

13 Tomato kg:/m/07j87

14 Bali kg:/m/01bkb

15 Philadelphia kg:/m/0dclg

16 Prince kg:/m/01vvycq

17 Love kg:/m/0fhr1

18 Love kg:/g/11g7kqqnph

19 Love kg:/g/1yfp3mlzmYou may see the entity names and entity ids side by side below.

We will choose one of those entities to show the Knowledge Panel for it as below.

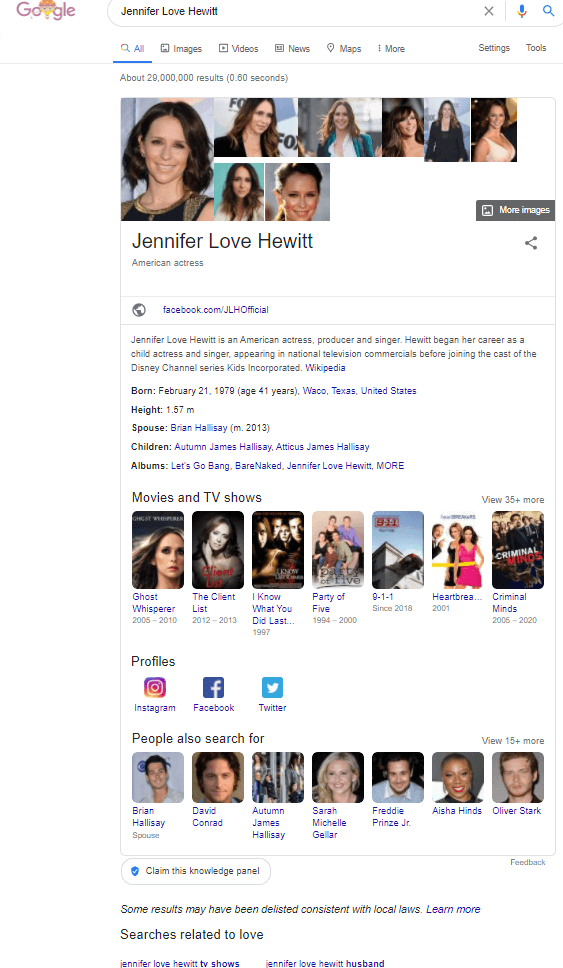

https://www.google.com/search?q=love&kponly&kgmid=/m/01pgk0

#You also may use the URL below, in a clear structure as suggested by Elias Dabbas.

https://www.google.com/search?kgmid=/m/01pgk0&kponlyThe URL here has queries and parameters to show the chosen entity’s knowledge panel on the SERP as alone. “search?=love” is for the query that we seek. In other meaning, it is the string we search for. “&kponly” means the “knowledge panel only”, in short, it says that shows only the knowledge panel in the results. “&kgmid” means the “knowledge panel entity id” which says shows the results for this entity. “/m/01pkg0” is for the entity id. In short, the URL above says that “search for the string of ‘love’, show me only the knowledge panel for the entity that I gave you”. You may see the result below.

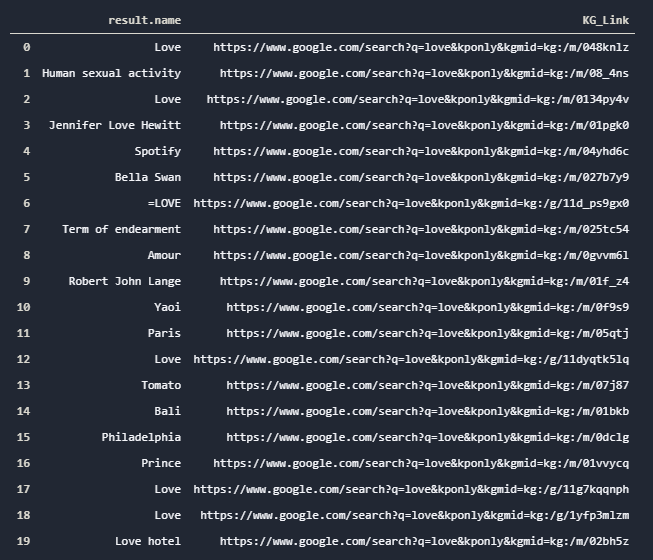

You may use the code below to create “Knowledge Panel Only Links” for the given entity so that you may examine every related entity’s Google-made profile on SERP.

kg_df = knowledge_graph(key=key, query='Love')

kg_df['KG_Link'] = "https://www.google.com/search?q=love&kponly&kgmid=" + kg_df['result.@id'].astype(str)

kg_df[['result.name','KG_Link']].style.set_properties(**{'background_color': '#353b48', 'color':'#f5f6fa'})

#Many thanks to Elias Dabbas for this string manipulation method for the Knowledge Graph Entity Links.

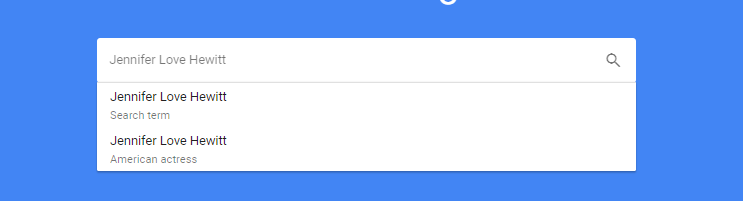

You may find a “Structured Search Engine” mindset with “Semantic Search” principles in the Knowledge Panel above. To see the difference between a string and an entity, you also may check the Google Trends as below.

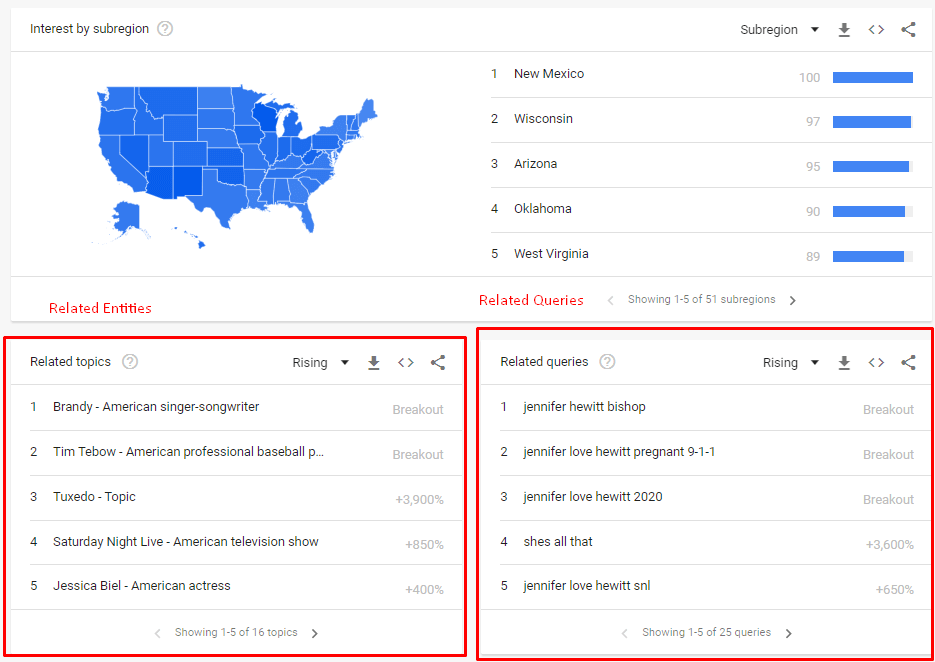

You see two different options in Google Trends for the given query. One of them is a “search term” which means just a “string”. It uses the “string matching results” to calculate the search trends while “American Actress” is for the entity profile of the Jennifer Love Hewitt, it includes all types of related searches for the actress such as tv shows, television programs, interviews, husband, children or friends and related entities along with similar entities.

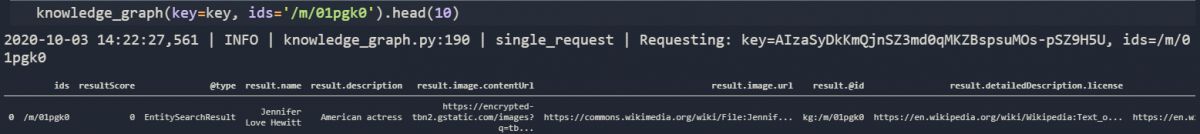

Also, let’s see where this “American Actress” description came from the Google Trends via our Advertools’ “knowledge_graph()” function.

kg_df[['result.name',"result.@id", "result.description"]]

OUTPUT>>>

2020-09-22 11:53:34,288 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=LoveYou may see the result for the “result.description” details below.

You may see that we have the “American Actress” description for the Jennifer Love Hewitt and “kg:/m/01pgk0” entity. Now, let’s check the Google Trends results for the same entity.

Google uses the related strings and queries together for defining and explaining another entity. Now, let’s check the URL for the given entity.

https://trends.google.com/trends/explore?q=%2Fm%2F01pgk0&geo=USThe only section, one should pay attention to is the “01pgk” section. If you look carefully, you will see that it is the entity id for the “Jennifer Love Hewitt” in the Knowledge Base of Google. So, Google uses a Semantic Structure for Semantic Search while structuring all the information on the web according to the Thinking Patterns of Human-beings. This brings us to another question, “What is a Structured Search Engine, and what is its relation to the Semantic Search?”.

You may see the most related entities with other entities also thanks to PyTrend’s “related_topics()” method.

What is a Structured and Semantic Search Engine?

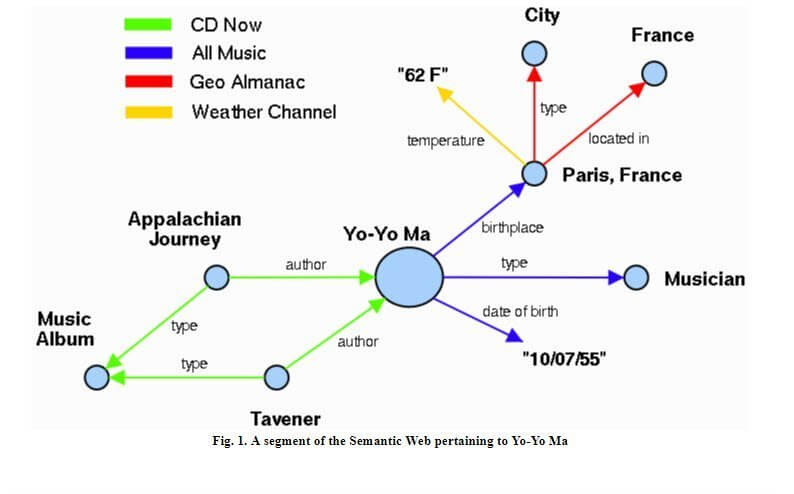

A structured Search Engine is a search engine that profiles, categorizes, and organizes all the information on the web according to the search patterns and relevance of the topics to each other. A semantic Search Engine is the result of the structured data on the web. Semantic Search Engine creates semantic relations between different entities, entity profiles, and features. Semantic Search Engine creates semantic queries, maps all the search intent for a given query and entity along with sub-intents, and organizes the best possible Search Engine Results Page for the user.

Semantic Search Engine creates topical clusters and entity graphs to satisfy the different sub-intents and micro-intents along with mixed search intents for the same topic. Topical Authority and Entity-based Search Engine where comes in. Topical Authority is the authority of a content publisher for a given topic, to increase the topical authority, a topic should be handled with high expertise, detailed explanations, and structured navigation in a web entity. Entity-based Search Engine realizes the difference between real-world entities and “strings”. To create a “confidence” and “relevance” between an entity, topic, and a brand, Topical Authority is the key approach, Entity-based Search Engine is the key difference.

The structured Search Engine term doesn’t belong to me, you will see an explanation from Google in 2011 related to this term.

A structured Search Engine is explained in detail in 2011. Structured Search Engine, Entity-based Search Engine, or Semantic Search Engine terms brings us to another topic, Semantic Search Engine Optimization. To improve a topical authority for a given topic, one should give consistent signals and detailed common and unique information about sub-topics and related topics, Semantic SEO is the key methodology here. Since it is a topic for another day, we should move our last question.

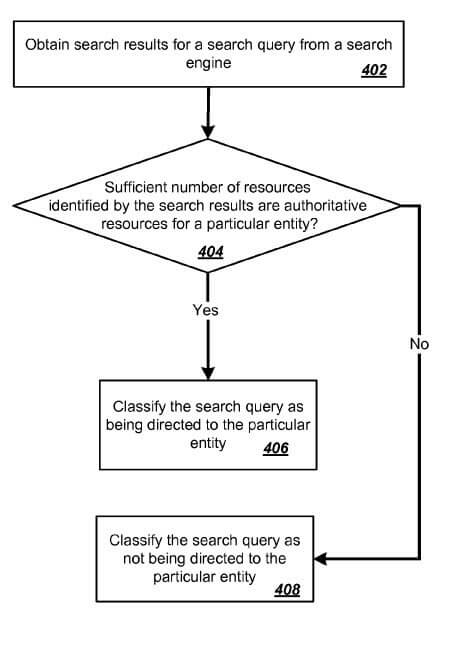

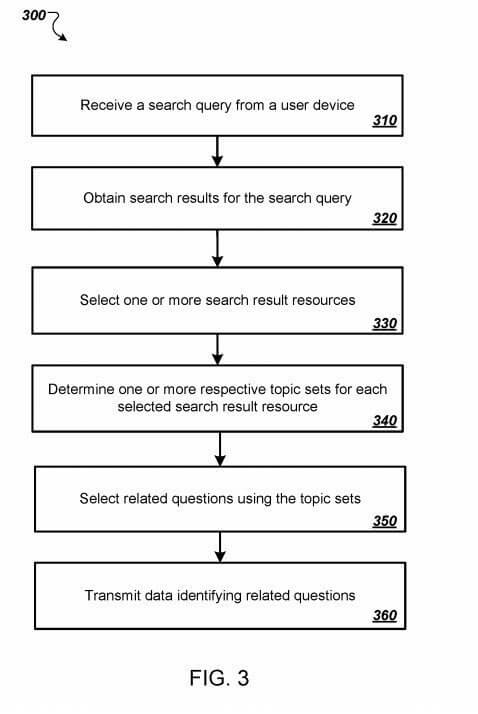

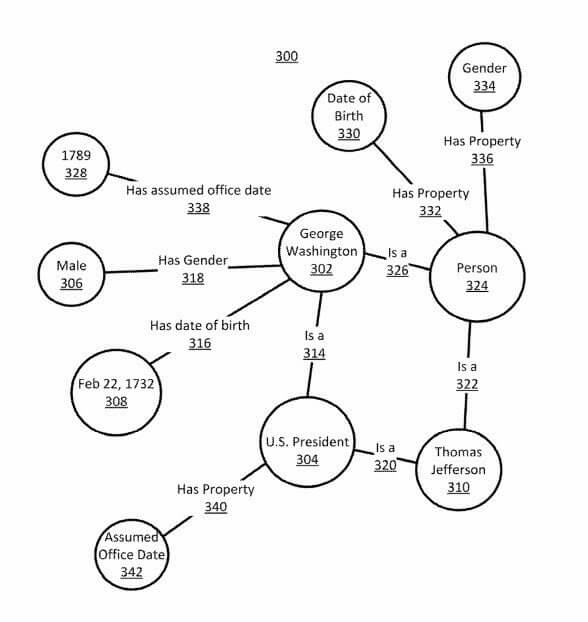

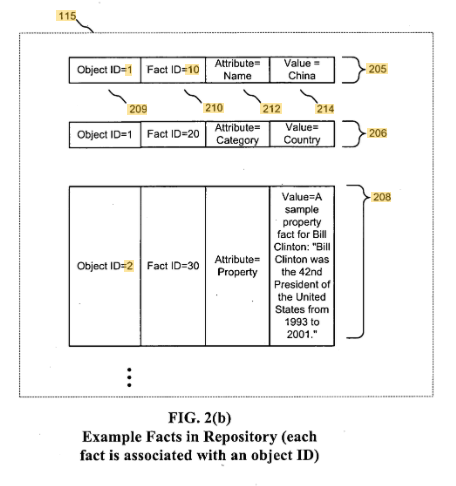

How Does Google Realize and Record Different Entities and Different Profiles of Entities?

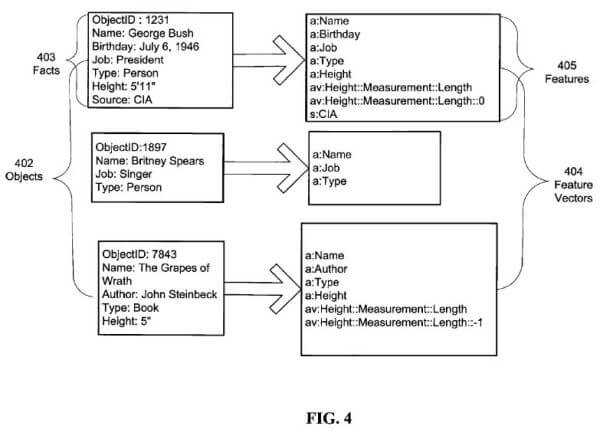

Since this is actually a guideline about Python SEO, I will give a brief summary of Entity Profiling and Recognition.

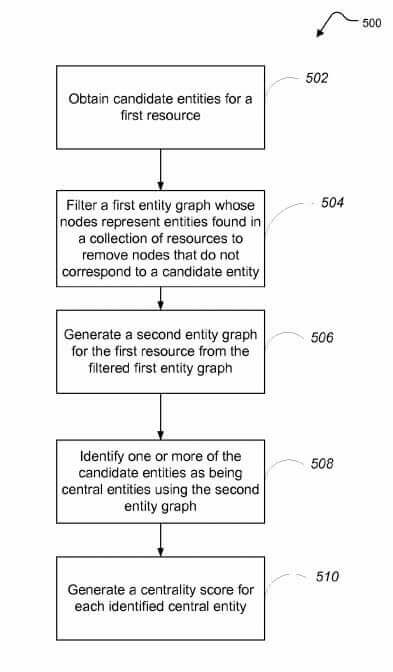

Google has tons of patents for realizing the real-world entities and answering the questions about them, some of the possible and valid methods for extracting entities and entity attributes for Knowledge Base are below.

- Using queries for a given entity (entity-seeking queries) to extract questions.

- Using search trends for entity-attribution and entity-relation profiling.

- Using FAQ Pages to see the different sides of a given entity along with answers.

- Structuring the information in the unstructured database (web) via mutual points and popular patterns for a given query group.

- Grouping the entity types for grouping the related questions and queries.

- Extracting HTML Tables for grouping statistical data and exploring relational data.

- Using Entity-graphs to answer missing facts about another entity.

- Using Ontology for understanding and defining the merged attributes of an entity via web documents.

- Classifying the queries with micro-intents and characteristics along with “click satisfaction” models and user preferences.

- Defining the different phrases for the same entity, determining the phraserank for a given entity.

- Generating different and related questions for a given entity via the web documents.

- Using Context-vectors and Word2vec for the Named Entity Disambiguation.

- Using images on the web documents to identify the entities on the web pages and their relations.

- Using quality and authority scores for the images to strengthen the confidence score for a given entity-query relation.

- Using web documents, links, texts, images, videos, subtitles, mentions, comments, views, music, apps, search queries, trends, or any kind of web existing particle for realizing real-world things for organizing the information of all cosmos.

Most of the cornerstones of the Fact Extracting Methodologies of the Search Engines are summed up above. These methodologies are being also used for Knowledge Graph API, it shows the relations of the entities with each other along with their attributes and relations for other topics and “strings”. For Understanding a Semantic Human-thinking Pattern towards specific topics, concepts, and Search Patterns, and using this Semantic Search Engine Feature in SEO Projects, Advertools’ Knowledge Graph API presents a deep value.

Before, explaining the Advertools’ “knowledge_graph()” function with the rest of its attributes, let me show you a pattern in the Knowledge Base.

Question and Answer Patterns for Different Entity Types in the Knowledge Base of Google

If you check the answers in the Knowledge Graph, you will see a pattern in the results. These patterns are also valid for the Featured Snippets and authoritative content publishers with high expertise. Let me show the answers from the Knowledge Base of Google, via Advertools’ “knowledge_graph()” function.

import pandas as pd

pd.set_option('display.max_colwidth', 500)

kg_df[['result.name',"result.@id", "result.description", "result.detailedDescription.articleBody"]]

OUTPUT>>>

2020-09-22 13:42:00,224 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Love

We have expanded the column width via the “pd.set_option()” function so that we can see the full article body. Let’s check the output below.

If one examines the “detailed article body of the entities”, one will notice that every sentence is in the same pattern. All of these sentences are the result of the “What” and “Who” questions with other types of information. Let’s sum up the answers for the given entity descriptions.

- All the entity descriptions are definitive.

- All the entity descriptions are answers to the main questions of “what” and “who”.

- All the entity descriptions include related entities as “places”, “dates”, “television channels”, “music albums”, “events” or the “movies”.

- All of the entity descriptions also answer the most related questions for the given entities.

- All of the entity descriptions are in the same form in terms of grammar.

- All of the entity descriptions have exact answers, they speak with expertise.

- All of the entity descriptions do not include any “subjective” opinions.

- All of the entity descriptions do not include any unnecessary words.

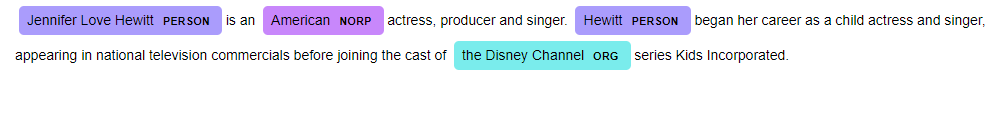

Let’s choose a detailed entity description for the analysis.

Jennifer Love Hewitt is an American actress, producer, and singer. Hewitt began her career as a child actress and singer, appearing in national television commercials before joining the cast of the Disney Channel series Kids Incorporated.

Google Knowledge Graph Search API

Let’s see which questions can be generated from here and which answers can be found.

- “Is Jennifer Love Hewitt an actress?”

- “Is Jennifer Love Hewitt a producer?”

- “Is Jennifer Love Hewitt a singer?”

- “Is Jennifer Love Hewitt an American?”

- “When did Jennifer Love Hewitt start her career?”

- “How did Jennifer Love Hewitt start her career?”

- “Where did Jennifer Love Hewitt start her career?”

- “What was the first show that Jennifer Love Hewitt played in?”

- “What was the first television channel that Jennifer Love Hewitt showed in?”

- “Did Jennifer Love Hewitt play in commercials?”

- “Which type of commercials did Jennifer Love Hewitt play in when she was a child?”

- “Did Jennifer Love Hewitt play on national tv when she was a child?”

These are some of the semantic questions that can be generated just from a single line of definition sentences for the given entity. So, if you want to have a featured snippet or if you want to appear in Knowledge Panel as a source, or if you want to turn your brand into an entity from a string, you should give the consistent, comprehensive, and semantic answers in a structured content network that has a logical hierarchy with contextual links for the Search Engine’s perceptional algorithms.

The entity description gives the most important information about the entity itself in a single line of the sentence that we can produce more than 10 questions. If you want to be an authority for the given entity, you should answer these questions and much more in a semantic structure, I assume that you may notice that, these manually generated questions are similar to that one that is generated by the Search Engine’s itself.

How to Use Python and NLP for Understanding Semantic Structure of Entity Definitions in terms of Content Marketing?

All of the sentences that are created for the entity descriptions are in the same pattern and understanding, as we mentioned earlier, you may also approve this thanks to NLP with Python. You will see an Information Extraction and Part-of-speech Example for the given sentences below.

To visualize the sentence pattern in the Knowledge Base of Structured Search Engine, I will use Spacy and Displacy, so you may see what the Search Engine wants from a clear and descriptive entity definition in content.

import spacy

love_entities = knowledge_graph(key=key, query='Love')

nlp = spacy.load("en_core_web_sm")

doc = nlp(love_entities['result.detailedDescription.articleBody'][3])

for token in doc:

print(token.text, token.lemma_, token.pos_, token.tag_, token.dep_,

token.shape_, token.is_alpha, token.is_stop)Let me explain the codes from Spacy, here.

- In the first line, we have imported the Spacy Python Package for Natural Language Processing (NLP).

- We also created a new variable with “love_entities” with Advertools’ “knowledge_graph()” function.

- In the second line, we have assigned the trained English Language Model for Spacy into the “nlp” variable via the “spacy.load()” method.

- We have created a doc element with our “Jennifer Love Hewitt” entity’s description from our Advertools’ “Knowledge_graph()” function’s output via Google’s Knowledge Graph Search API.

- In the last line, we have started a for loop, for taking the “part-of-speech” information from our sentence.

There are other language models in Spacy, such as “nl_core_news_sm” for Dutch, “es_core_news_sm” for Spanish. At the moment, there are 15 languages that can be used for NLP via Spacy.

You may see the result below.

OUTPUT>>>

Jennifer Jennifer PROPN NNP compound Xxxxx True False

Love Love PROPN NNP compound Xxxx True False

Hewitt Hewitt PROPN NNP nsubj Xxxxx True False

is be AUX VBZ ROOT xx True True

an an DET DT det xx True True

American american ADJ JJ amod Xxxxx True False

actress actress NOUN NN attr xxxx True False

, , PUNCT , punct , False False

producer producer NOUN NN appos xxxx True False

and and CCONJ CC cc xxx True True

singer singer NOUN NN conj xxxx True False

. . PUNCT . punct . False False

Hewitt Hewitt PROPN NNP nsubj Xxxxx True False

began begin VERB VBD ROOT xxxx True False

her -PRON- DET PRP$ poss xxx True True

career career NOUN NN dobj xxxx True False

as as SCONJ IN prep xx True True

a a DET DT det x True True

child child NOUN NN compound xxxx True False

actress actress NOUN NN pobj xxxx True False

and and CCONJ CC cc xxx True True

singer singer NOUN NN conj xxxx True False

, , PUNCT , punct , False False

appearing appear VERB VBG advcl xxxx True False

in in ADP IN prep xx True True

national national ADJ JJ amod xxxx True False

television television NOUN NN compound xxxx True False

commercials commercial NOUN NNS pobj xxxx True False

before before ADP IN prep xxxx True True

joining join VERB VBG pcomp xxxx True False

the the DET DT det xxx True True

cast cast NOUN NN dobj xxxx True False

of of ADP IN prep xx True True

the the DET DT det xxx True True

Disney Disney PROPN NNP compound Xxxxx True False

Channel Channel PROPN NNP compound Xxxxx True False

series series NOUN NN pobj xxxx True False

Kids Kids PROPN NNP compound Xxxx True False

Incorporated Incorporated PROPN NNP appos Xxxxx True False

. . PUNCT . punct . False FalseIf you want a more organized result, you may use “termcolor” and “string handling” methods. With the suggestion of Elias Dabbas, I have prepared the “for loop” and “f string” code blocks for a better result visualization.

import spacy

from termcolor import colored

nlp = spacy.load("en_core_web_sm")

doc = nlp(love_entities['result.detailedDescription.articleBody'][3])

texts = [token.text for token in doc]

lemmas = [token.lemma_ for token in doc]

poses = [token.pos_ for token in doc]

tags = [token.tag_ for token in doc]

deps = [token.dep_ for token in doc]

shapes = [token.shape_ for token in doc]

is_alphas = [token.is_alpha for token in doc]

is_stops = [token.is_stop for token in doc]

for text, lemma, pos, tag, dep, shape, is_alpha, is_stop in zip(texts, lemmas, poses, tags, deps, shapes, is_alphas, is_stops):

print(colored(f'{text:<12}', 'green'), colored(f'Lemmatizated => {lemma:<12}', 'red'), colored(f'Tag => {tag:<7}', 'blue'),colored(f'POS => {pos:<8}', 'cyan'), colored(f'Dependency => {dep:<8}', 'magenta'), colored(f'Shape => {shape:>5}',on_color='on_grey', attrs=['bold', 'blink']), colored(f'Is Alphabetic => {is_alpha:<8}','red', on_color='on_yellow'), colored(f'Is stop word => {is_stop:>5}', 'yellow', on_color='on_blue') )#f'{variable:<5} means that write the string as left-aligned with the width of the five characters. It helps for creating a more organized string output.

I didn’t explain what “part-of-speech” is here since it will take more time, to learn about it you may read the “Information Extraction with Python and Spacy” guideline. But it simply checks every word’s role, definition, function, and actual form in the sentence. For instance, if you check every entity description in the Knowledge Graph of Google, you will see that it has an excellent grammar structure with proper “noun”, “adjective”, “verb”, “punctuation” etc.

Since this output is visually complicated, let me show you another example below.

displacy.serve(doc, style="dep")This single line of code is being used for showing the relations between words in an entity description.

You may see which words are being perceived as compound and which word is the attribute of which one. Since this visualization is also not enough, let me show you a more simplified version.

spacy.displacy.serve(doc, style="ent")

OUTPUT>>>

c:\python38\lib\runpy.py:194: UserWarning: [W011] It looks like you're calling displacy.serve from within a Jupyter notebook or a similar environment. This likely means you're already running a local web server, so there's no need to make displaCy start another one. Instead, you should be able to replace displacy.serve with displacy.render to show the visualization.

return _run_code(code, main_globals, None,This single line of code is being used for Named Entity Recognition, it will help you to see the entities in a sentence with clear vision.

So, in Google’s Knowledge Graph, we can simply choose all the related entities from the entity descriptions along with attributes of the entities with clear sentence patterns. To create an authoritative, conceptually hierarchic semantic content structure, following these sentence patterns are important in terms of Semantic Search Engine Optimization.

Advertools’ Knowledge Graph Function can be used for creating an automated “sentence pattern” and “sentence structure” checking engine with simple addons. (Maybe in the future, with another guideline.)

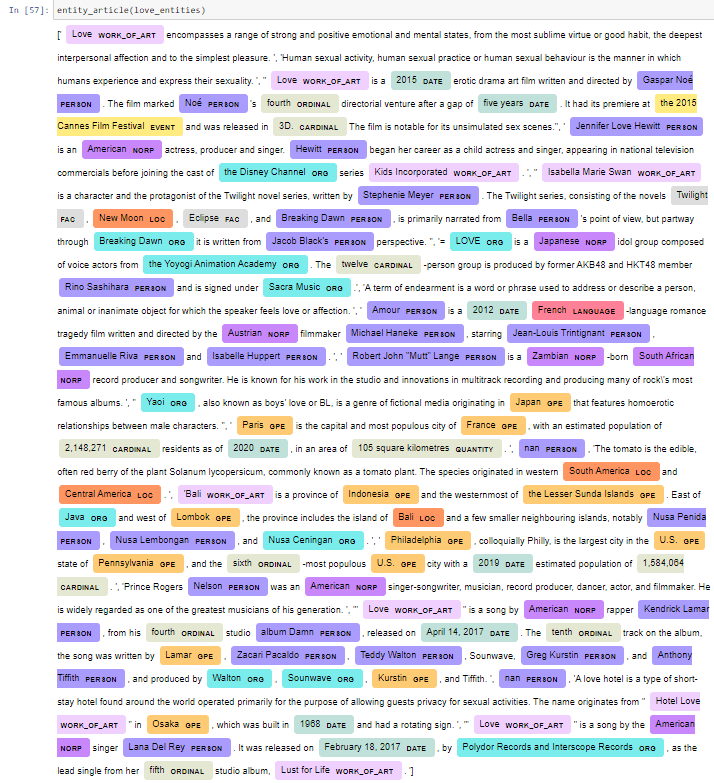

To show the clear sentence patterns and features from Google’s Knowledge Graph, you may use a for loop like the below.

import spacy

nlp = spacy.load("en_core_web_sm")

""" You can call these two lines in the function if you want but since these two lines are not necessary for repeated usage, we have pu thtem outside of the function. """

def entity_article(kg_df):

b = str(kg_df['result.detailedDescription.articleBody'].explode().to_list())

doc = nlp(b)

spacy.displacy.render(doc, style="ent")

entity_article(love_entities)- We have united all of the “result.detailedDescription.articleBody” column of our Advertools’ function output with “explode” and “list” methods.

- We have turned it into a string from a list via the “str” command.

- We have used our “spacy.load” method for using the English Language Model with Spacy.

- We have called our function with our prebuilt variable.

You may see the result below.

If you want to separate different entities’ different results while using NLP on their description, you can use the code block below which is prepared by Elias Dabbas.

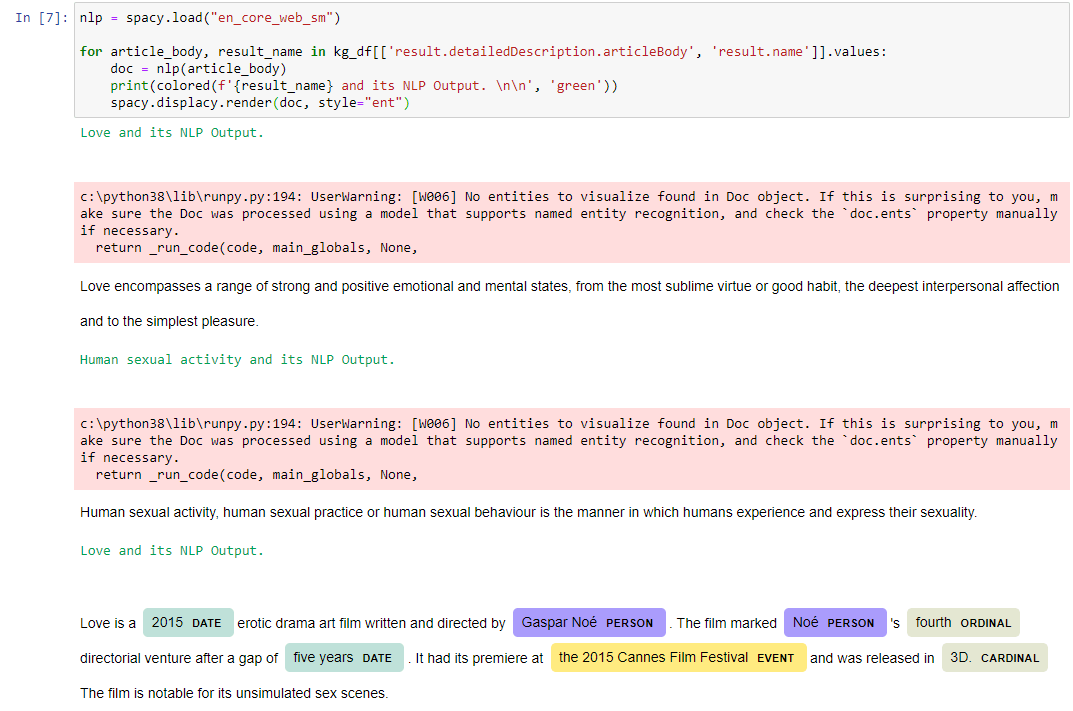

for article_body, result_name in kg_df[['result.detailedDescription.articleBody', 'result.name']].values:

doc = nlp(article_body)

print(colored(f'{result_name} and its NLP Output. \n\n', 'green'))

spacy.displacy.render(doc, style="ent")Not: Using a for loop over Entity Descriptions sometimes can create an error like the below. It might be due to the splitting of the corpus.

Thanks to Natural Language Processing, Advertools, and Google’s Knowledge Graph API, the questions below can simply be answered.

- What type of sentences does Google use for defining an entity?

- What are the most related entities for an entity?

- What are the attributes of an entity in an entity description?

- Which questions can be answered from an entity description?

- Which points and angles are most important for an entity’s profile?

- What Semantic and Hierarchic Structure can be used to create a content network that can comply with Search Engines’ Entity-Map?

Also, we can improve this analysis by simply putting all of these results into data frames via Pandas and checking which entity has which attributes, which words are compound, which related entities exist in entity descriptions, and which sentence structure is used for which entity type, etc…

Before finishing, this section, you also can show the Entity Descriptions, Entity Attributes, and Entity Relations with “Information Extraction Visualization” below to see the most important definitive points of an entity according to Google’s reality perception.

Note: All of the functions (“getSentence”, “processSentence”, “printGraph”) below are from the “Information Extraction and Visualization via Python” article.

if __name__ == "__main__":

text = str(love_entities['result.detailedDescription.articleBody'][3])

sentences = getSentences(text)

nlp_model = spacy.load('en_core_web_sm')

triples = []

print(text)

for sentence in sentences:

triples.append(processSentence(sentence))

printGraph(triples)You may see the output below:

Jennifer Love Hewitt is an American actress, producer and singer. Hewitt began her career as a child actress and singer, appearing in national television commercials before joining the cast of the Disney Channel series Kids Incorporated.

Jennifer -> compound

Love -> compound

Hewitt -> nsubj

is -> ROOT

an -> det

American -> amod

actress -> attr

, -> punct

producer -> appos

and -> cc

singer -> conj

. -> punct

Hewitt , be american actress ,

Hewitt -> nsubj

began -> ROOT

her -> poss

career -> dobj

as -> prep

a -> det

child -> compound

actress -> pobj

and -> cc

singer -> conj

, -> punct

appearing -> advcl

in -> prep

national -> amod

television -> compound

commercials -> pobj

before -> prep

joining -> pcomp

the -> det

cast -> dobj

of -> prep

the -> det

Disney -> compound

Channel -> compound

series -> pobj

Kids -> compound

Incorporated -> appos

. -> punct

Hewitt , begin national , career actress commercials cast series

What are the Relevance and Confidence Scores in Google Knowledge Graph API Search Results?

Relevance and Confidence Scores in the Google Knowledge Graph represent the relevancy of the given query (string) to the shown entity in that specific search intent. Confidence Scores are actually similar to the relevancy, it is the confidence level of the Search Engine for the given entity and query relation angle.

For instance, relevance scores can be shared between entities in a news story about the economy in the US. If there are 20 different politician names in the news article, the relevance score will be shared among these entities but according to the news coverage, some names will have a higher relevancy score since they are mentioned more and the news also about them along with their attributes and facts. The confidence scores will be determined according to the entity type and identity correctness possibility. Even if the relevance score is high but the entity type, attributes, or entity relations are not clear for the algorithms, the content can’t be clearly connected to those entities. That’s why Relevance and Confidence Scores are important in NLP and “resultScore” is a numeric representation of these two scores.

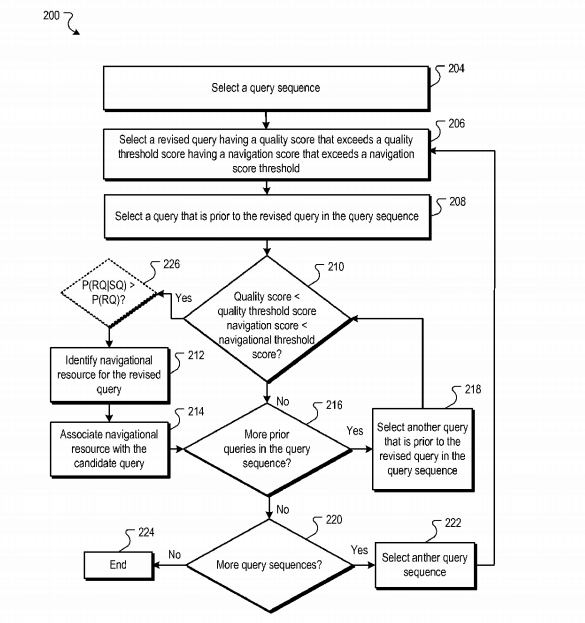

In this section, we also need to pay attention to the Different Search Intents, Sub-intents, Micro-intents, and Canonical Queries or Query Rewriting terms. For keeping the article on track, I will give short definitions for these Search Engine Theoretical Terms.

- A canonical Query is the canonical version of the different query variations, it also can be called a “representative query”.

- Sub-intents are the second, third, or fourth and more intents after the first and the most dominant intent of the query.

- Micro-intents are the intents between the sub-intents, they can have lesser satisfying content or it can be hard to find the exact and valid answers, usually, forums and question-answer sites cover these queries.

- Query Rewriting is the process of changing the query on the search box for giving better results. It doesn’t change “visually” but Google may merge or variate the query that is given by the user for providing better results.

Andrei Broder, in his taxonomy of search paper, described the sub-intents for a given query as below in 2006.

Navigational – 15 %

- Transactional – 22%

- – Obtain 8%

- – Interact 6%

- – Entertain 4%

- – Download 4%

- Informational – 63%

- – List 3%

- – Locate 24%

- – Advice 2%

- – Undirected 31%

- – Directed 3%

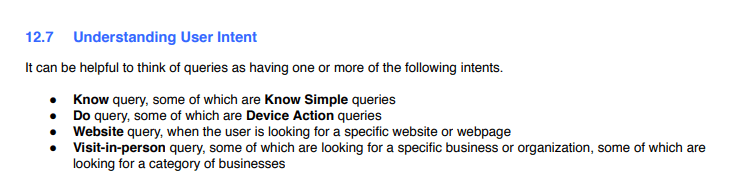

In 2006, Google tried to describe the sub-intents and user-navigational routes between web documents in this categorization according to the Andrei Broder, in the Google Quality Rater Guidelines, we also have four types of basic query types according to the search intent,

Search Intent Classification according to the Google Quality Rater Guidelines is below.

- Know Queries are informational queries.

- Do queries are the actional queries.

- Website queries are actually source-seeking queries.

- Visit-in-person queries are actually local queries.

Also, as a note, Website Queries are similar to the what Andrei Broder told in 2006, Andrei said that some of the users navigate (teleport) to the websites that they trust for the information they seek. Also, Danny Sullivan said that the most important part of the “URL” is the “domain name” since people only care about the information source. Also, we have some studies which show that if a brand removes the “brand name” from the title tags, it loses slightly CTR, and even sometimes Google puts the Brand Name into the title tags without asking the users.

In reality, there are much deeper query classification and search intent analysis from the Search Engine’s viewpoint. Google Trends’ “query categorization” actually is proof of that. In the PyTrend Guideline of the HolisticSEO.Digital, you may read all of the query categories in Google Trends to see which search intent and type have more weight than others. You may see some of the examples below to differentiate the same query according to the sub-intents and search types.

Movie Types and Query Categorization in the Google Trends for the given query are below.

Movies: 34

- Action & Adventure Films: 1097

- Martial Arts Films: 1101

- Superhero Films: 1100

- Western Films: 1099

- Animated Films: 1104

- Bollywood & South Asian Film: 360

- Classic Films: 1102

- Silent Films: 1098

- Comedy Films: 1095

- Cult & Indie Films: 1103

- Documentary Films: 1072

- Drama Films: 1094

- DVD & Video Shopping: 210

- DVD & Video Rentals: 1145

- Family Films: 1291

- Film & TV Awards: 1108

- Film Festivals: 1086

- Horror Films: 615

- Movie Memorabilia: 213

- Movie Reference: 1106

- Movie Reviews & Previews: 1107

- Musical Films: 1105

- Romance Films: 1310

- Science Fiction & Fantasy Films: 616

- Thriller, Crime & Mystery Films: 1096

All of these queries are being used in PyTrend to perform a better search behavior analysis. If you will search for a specific movie, it might have different degrees of search trend and demand according to the queries’ classification. You may see all of the “Google Trends query categories” from the link below:

https://trends.google.com/trends/api/explore/pickers/category?hl=en-US&tz=240

You may see an example to see how these query categories affect Google’s perception of the same entity from different angles, below.

pytrends = TrendReq(hl="en-US", tz=360)

query = ['Jennifer Love Hewitt']

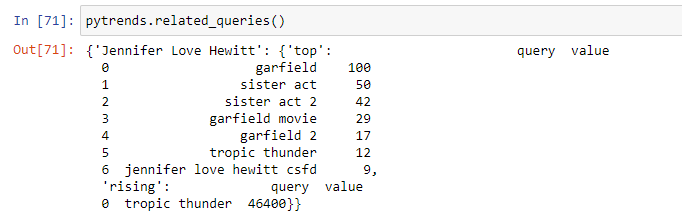

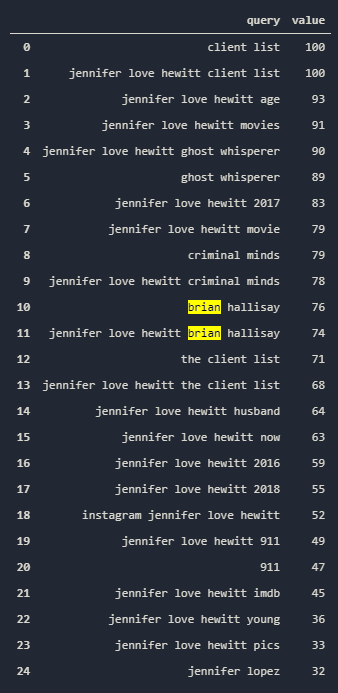

pytrends.build_payload(kw_list=query, cat=34, timeframe="today 12-m")You may see the result below, “cat=34” means that shows me only the results for “movies”.

pytrends.related_queries()

pytrends = TrendReq(hl="en-US", tz=360)

query = ['Jennifer Love Hewitt']

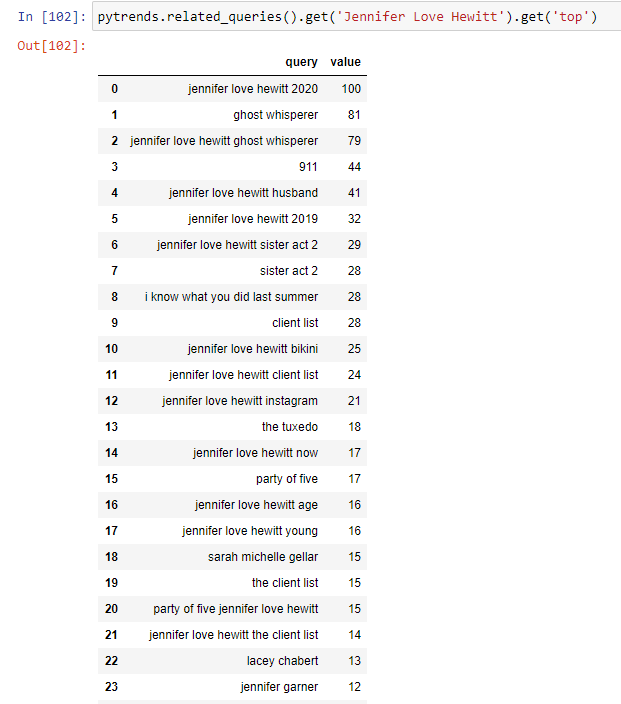

pytrends.build_payload(kw_list=query, cat=184, timeframe="today 12-m")In this example, we are seeking queries that are related to the “Celebrity News”, now the character of search intent will change tremendously.

pytrends.related_queries().get('Jennifer Love Hewitt').get('top')In this example, we have searched for “Celebrity News”, so the queries will be more variated.

You may see the difference between different query categories, in this angle, we simply can say that there are lots of Knowledge Domains and Query Characters, Search Intent Variations for Google.

So, all of this information comply with each other in terms of Semantic Search Engine Optimization and Entity-based Search Ecosystem. If a web entity is an authority for a given topic, it will increase the relevance and confidence scores for the given query variations so that it can be seen as an expert on the subject and can gain better search visibility over the Google Surfaces (blue links, featured snippets, people also ask questions, knowledge panels, local packs, image and video search results, news boxes, answer boxes and more.)

The relevance score is the relevancy of the query to the given entity while the confidence score is the confidence of the Search Engine about that relevancy. Creating an authoritative web entity or a brand for the given entities (products, events, services, cars, or movies), creating a comprehensive topical graph and conceptual hierarchy for all angles of the topic for every type of query with query-answer trees with satisfying page layout and user experience along with services/functions are the key for being the most relevant and quality source for the specific entity.

How Does Google Use the Entity Relations for Understanding the Authority of the Web Sites for Given Context?

Neural Matching and Hummingbird Algorithms are closely related to these terms. Google is a “user-centric contextual Search Engine” which means that Google uses the searcher’s opinions and expectations to understand the context of the words to find better matching search results. In this context, Google may see the different angles and mixing intents of a query, a search intent, a web document, and a matching entity attribute.

Lets’ continue with our example via “Jennifer Love Hewitt”.

knowledge_graph(key=key, query='Jennifer Love Hewitt')

OUTPUT>>>

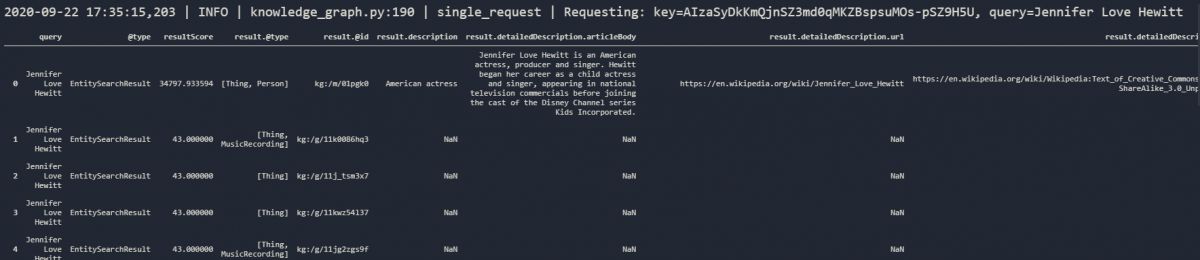

2020-09-22 17:35:15,203 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Jennifer Love HewittYou may see the entity results for the given entity below.

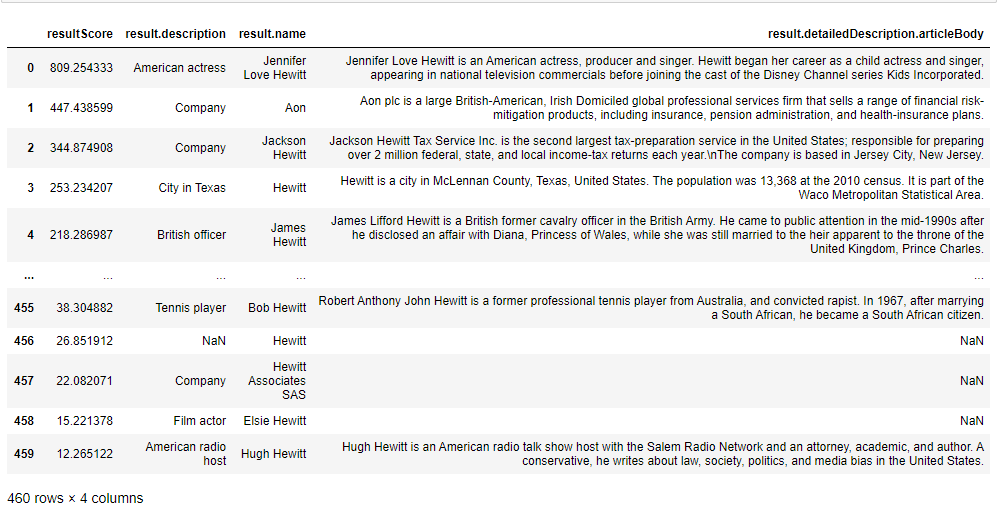

At a glance, we notice there are lots of results for the given entity, the first result with the highest “relevance” and the “confidence” score is of course for the Jennifer Love Hewitt herself, other results are the sub-entities of her such as music records, tv episodes or books. You also can see the importance of an entity for Google via the results’ scores and their amount. For Jennifer Love Hewitt, we may say that she is important for the Knowledge Graph API, but also her Knowledge Graph results have tons of missing points. It shows that Google didn’t fill in all of the missing facts about the entity.

Some of the reasons for a missing fact for a given entity are listed below.

- There is not enough search demand or search trend for the entity

- There is not enough news about the entity.

- There is not enough information on the entity in different online encyclopedias.

- There are not enough web documents for the given entity.

- There are not enough social media activities or historical data for the given entity.

Sometimes, it is not also about the amount of information, also “trustworthiness” and “controversies” of an entity can create a missing fact situation for the given entity. Or, even if the entity has a place in the Knowledge Base, the description, image, article or definition can vary from time to time or it can be missing.

You also may check the resulting amount with the Pandas built-in “shape” method to see the coverage of an entity. Also, these “NaN” sections show the importance of structured data for Search Engines.

jlh.shape[0]

OUTPUT>>>

14We have only 14 Results. This doesn’t mean that these 14 entities are the only relevant entities for the Jennifer Love Hewitt (!). These are just the only results that are relevant enough with the string of “Jennifer Love Hewitt”. That’s why for an entity profile analysis before starting to cover it, Advertools’ Knowledge Graph API should be used with also “PyTrend”. “PyTrend” can give the “relevant strings”, “relevant entities”, and “rising queries” for the given entity. On the other hand, Knowledge Graph API can give a deeper look for an important “phrase” with corresponding entities and relevance, confidence scores along with Knowledge Base information that covers entity descriptions, images, definitions, etc.

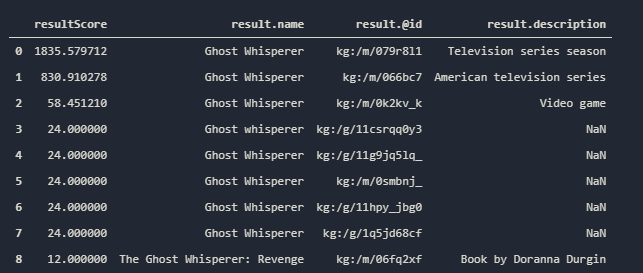

To prove the point, let’s choose another string that is relevant to the Jennifer Love Hewitt which is “Ghost Whisperer” which she played for five seasons.

knowledge_graph(key=key, query='Ghost Whisperer')

knowledge_graph(key=key, query='Ghost Whisperer')[['resultScore','result.name',"result.@id", "result.description"]]

OUTPUT>>>

2020-09-22 17:53:23,264 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Ghost Whisperer

You may see the result data frame below for the given string of “Ghost Whisperer”.

You may see that we also have Ghost Whisperer in the Knowledge Base, interesting to see that a season of Ghost Whisperer called Ghost Whisperer has a higher relevance score than the actual series in which the name is again Ghost Whisperer. Maybe, a content publisher should also pay attention to creating a distinction between these two different entities in his/her articles.

We also have some video games and other types of entities without any description, when we check that we also see that they are from the same TV Series, but they are actually “music records” for the different episodes. Since Knowledge Graph API only gives results for a string, let’s check the “related entities” for the Jennifer Love Hewitt as below.

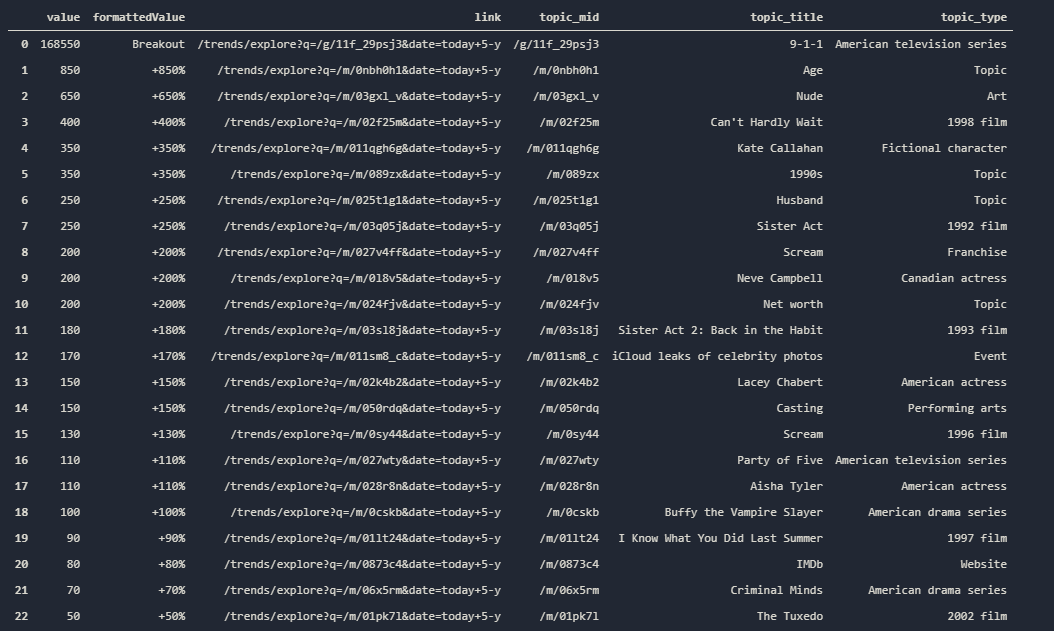

pytrends.build_payload('Jennifer Love Hewitt', cat=0, timeframe="today 5-y")

jlh_entities = pytrends.related_topics()

jlh_entities.get('Jennifer Love Hewitt').get('rising')- In the first line, we have chosen “cat=0” which means “all the categories” since we seek all related entities.

- We have wanted the data from the last 5 years, how much the time period is long, the data will be more consistent for our situation.

- We have called all the related entities.

- We have created a data frame with the help of the “get” method from a dictionary, with the values we want.

You may see the result below.

We see the entities that are related to Jennifer Love Hewitt in a data frame, we have entity ids, trend value, entity type, trend spike over the years, and entity names. Also, one should know how to read this data frame. For instance, we see that there are “Nude (art)” and “Age (Topic)”, and “Husband (Topic)” in our related entities. They are actually here because search engine users have similar search patterns for celebrities.

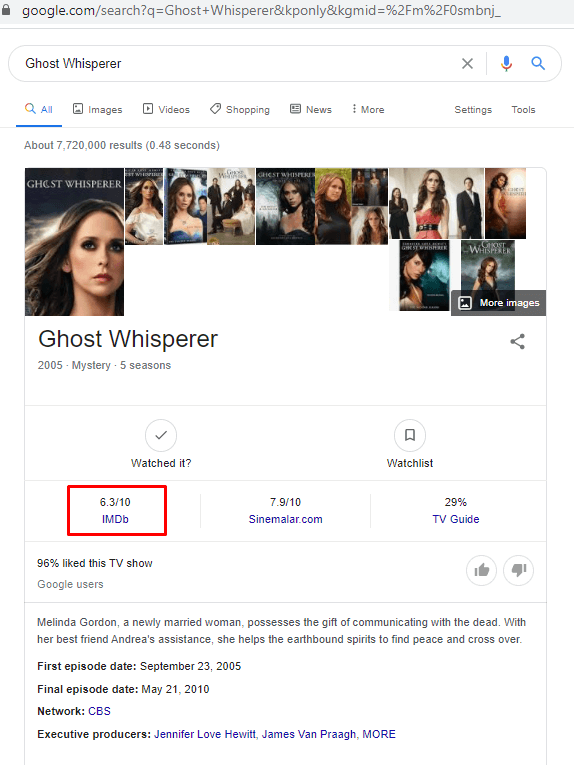

Since, these words and the words that mean these terms are searched a lot with the entity, they became related. IMDb is also here, because of the same situation but sometimes, even if there would not be a search behavior pattern for connecting these entities to each other, the Search Engine can connect these entities while scraping the web thanks to the web documents. For instance, if there are too much data in IMDb for the given entity, Google may think to relate these entities to each other or it can show the IMDb in the Knowledge Panel of the Actress as below.

We also have Tuxedo, Criminal Minds, I Know What You Did Last Summer movies that Jennifer played. But we also have “Jennifer Lopez” and some other American Actresses in the data frame, it has different reasons. One of them is that some of these actresses played together with Jennifer Love Hewitt, the other reason is actually a “phrase-based” reason, they share the same “name” along with the “same” entity type. So, the Search Engine relates them in a similar way. We also have a similar situation for “related queries”.

The most related search terms with Jennifer Love Hewitt for the last 5 years are below.

pytrends.build_payload('Jennifer Love Hewitt', cat=0, timeframe="today 5-y")

jlh_queries = pytrends.related_queries()

jlh_queries.get('Jennifer Love Hewitt').get('top')You may see the result below.

We have the most searched queries for the given entity in the last 5 years. We see the latest tv series of Jennifer Love Hewitt in the data frame, “911”. Since it is a new tv series, it doesn’t have a big place according to the oldest queries, yet. We also have “Brian Hallisay”, who is also in the “related entities” data frame. Because he played in the “Client List” movie with Jennifer Love Hewitt, also he is the husband of Jennifer Love Hewitt.

So, all stones fell into place. We have “husband” as a topic in the related entities because people searched too much for the “{spouse} + {jennifer love hewitt}” query pattern, we also have Brian Hallisay in the related entities and related queries together, because they are two different entities connected each other more than one way.

Now, before focusing on Advertools’ “Knowledge_graph()” function again, we also can call the latest rising queries for the given entity, so that we can understand how Google notices the new entities.

jlh_queries.get('Jennifer Love Hewitt').get('rising')The result is below.

As you may notice above, we have “9-1-1” mostly in every row, because it is the latest show of the Jennifer Love Hewitt, so it is naturally here. We also see that there are lots of historic queries such as “JLJ 90s” or “2019” and 2020″ along with attribution queries such as “children”, “age”, and “social media accounts”.

These phrases are helpful to see the what people seek most for the given entity, an SEO can fill these missing points for the Search Engine in a semantic structure so that the Search Engine can trust the content publisher on a given entity and fill its own Knowledge Base with these entities, entity relations, and attributes.

We have covered lots of SEO Theories until now, let’s use Advertools’ Knowledge Graph Function with PyTrend’s Related Entities Function, so we can cover all related entity graphs and understand the connections and the best possible semantic content structure and network for a possible web entity.

jlh_graph_list = jlh_entities['topic_title'].explode().to_list()

a = []

for x in jlh_graph_list:

b = knowledge_graph(key=key, query=x)

a.append(b)

jlh_graph_list_ = pd.concat(a)

jlh_graph_list_.head(50)

OUTPUT>>>

2020-09-22 21:40:49,705 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Jennifer Love Hewitt

2020-09-22 21:40:50,073 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Ghost Whisperer

2020-09-22 21:40:50,931 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Casting

2020-09-22 21:40:51,277 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=The Client List

2020-09-22 21:40:52,132 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Criminal Minds

2020-09-22 21:40:53,019 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Age

2020-09-22 21:40:53,868 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Brian Hallisay

2020-09-22 21:40:54,737 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Husband

2020-09-22 21:40:55,074 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=9-1-1

2020-09-22 21:40:55,918 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=IMDb

2020-09-22 21:40:56,821 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Jennifer Lopez

2020-09-22 21:40:57,692 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=The Tuxedo

2020-09-22 21:40:58,539 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=I Know What You Did Last Summer

2020-09-22 21:40:59,371 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Sarah Michelle Gellar

2020-09-22 21:41:00,320 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Celebrity

2020-09-22 21:41:01,159 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Jennifer Aniston

2020-09-22 21:41:02,030 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Net worth

2020-09-22 21:41:02,902 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Bikini

2020-09-22 21:41:03,811 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Party of Five

2020-09-22 21:41:04,690 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Lacey Chabert

2020-09-22 21:41:05,014 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Toplessness

2020-09-22 21:41:05,349 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Jennifer Lawrence

2020-09-22 21:41:05,708 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Sister Act 2: Back in the Habit

2020-09-22 21:41:06,540 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Nude

2020-09-22 21:41:07,410 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Can't Hardly WaitYou may see the code block’s explanation below.

- In the first line, we have chosen the “topic_title” column, because it includes all of the related entities’ names as strings.

- We have created a variable called “jlh_graph_list” and we have used the “explode()” method to turn a column, list-like row.

- We have used the “to_list” method to take them into an actual list.

- In the second line, we have created an empty list with the name “a”.

- In the third line, we have started for a loop. We have assigned every element of our list object which we created in the first line whose name is “jlh_graph_list” into a new variable whose name is “b”. During the assigned process, we have used Advertools’ “knowledge_graph()” function for every element of the “jlh_graph_list” list object.

- We have appended every result of our for loop into our “a” variable.

- We have concatenated every list element in the “jlh_graph_list” while assigning the result into the “jlh_graph_result_” variable.

- We have called the first 50 lines of our new united data frame which includes the most related entities with the Jennifer Love Hewitt (our subject entity) according to the Google Knowledge Graph API and Google Knowledge Base along with Google Trends Data.

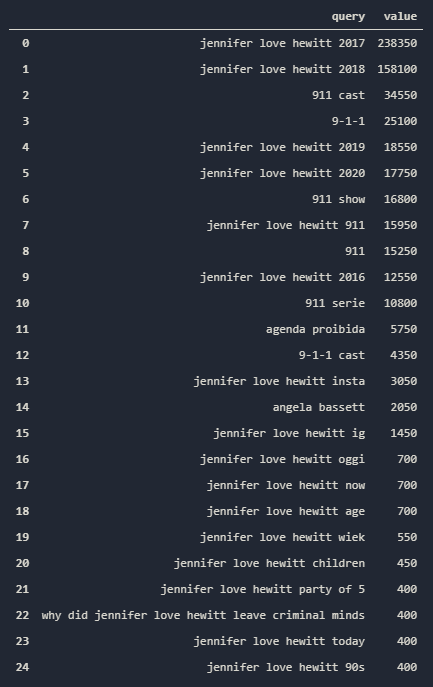

You may see the result of the code block below.

We have called all of the related entities for the Jennifer Love Hewitt and turned them into a data frame via Google Trends and Advertools. If you think that, Advertools’ “knowledge_graph()” function doesn’t give the all related entities and a topic’s cornerstones for entity-based semantic content structure planning, the “knowledge_graph()” function is based on Google Knowledge Graph Search API, and it is not for calling the related entities, it is being designed for the calling the most related entity for a given string along with other lesser relevant results.

But, for the same purpose, you may use the function below.

def topical_entities(query, to_csv=True):

import pytrends

from pytrends.request import TrendReq

from advertools import knowledge_graph

import pandas as pd

a = TrendReq(hl="en-US", tz=360)

a.build_payload(kw_list=[query], cat=184, timeframe="today 12-m")

b = a.related_topics()

c = b.get(query).get('top')

d = c['topic_title'].explode().to_list()

e = []

for f in d:

g = knowledge_graph(key=key, query=query)

e.append(g)

ı = pd.concat(e)

if to_csv == True:

return ı.to_csv('ı.csv')

else:

return ı

If you check the “topical_entities()” function you will see that it is actually a unification of Advertools’ “knowledge_graph()” function and also “Pytrend” along with Pandas. You may see an example of usage of the “topical_entities()” function.

Query = 'Hewitt'

topical_entities(Query, to_csv=True)

OUTPUT>>>

2020-09-26 20:30:51,441 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:52,302 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:53,148 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:54,000 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:54,859 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:55,716 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:56,562 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:57,415 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:58,261 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:59,091 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:30:59,953 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:00,791 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:01,627 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:02,468 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:03,337 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:04,199 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:05,043 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:05,912 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:06,756 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:07,596 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:08,443 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:09,288 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=Hewitt

2020-09-26 20:31:10,129 | INFO | knowledge_graph.py:190 | single_request | Requesting: key=AIzaSyDkKmQjnSZ3md0qMKZBspsuMOs-pSZ9H5U, query=HewittWe have created a CSV output with our related entities with the “Hewitt” string. Let’s read it via Pandas’ “read_csv()” method.

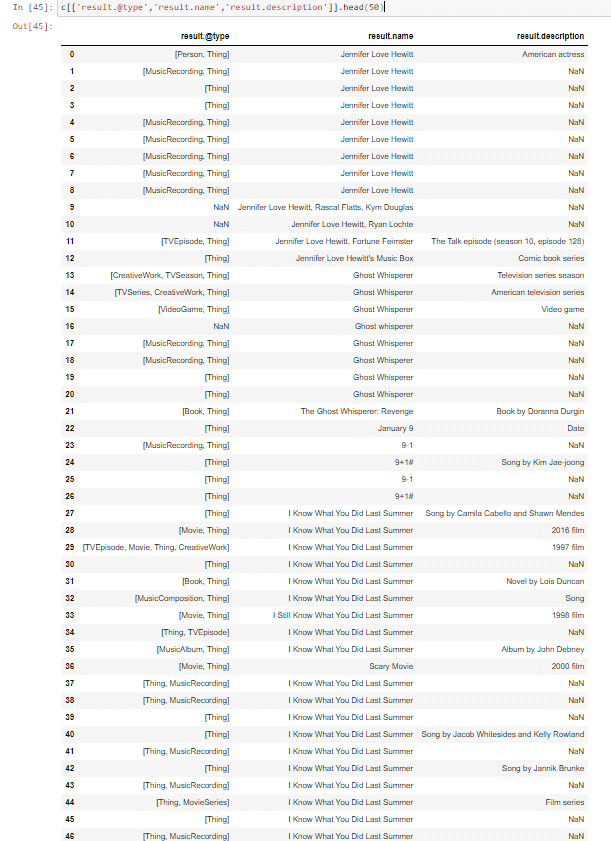

ı = pd.read_csv('ı.csv')

ı[['resultScore','result.description','result.name','result.detailedDescription.articleBody']]You may see the result below.

There are lots of things to cover here, but for the sake of the articles’ context, I will show one more custom pre-designed Python SEO Function for entity-based SEO. Imagine, you also can show all of the information and key points, connections and relations, definitions, and second features, and profiles all of those entities in a diagram. I will use “networkx” for it, but since there are too many entities here, I believe it won’t give a clear picture, but still, it can give insight.

import pandas as pd

c = pd.read_csv('../knowledge Graph API/i.csv')

a = c['result.detailedDescription.articleBody'][:5].explode().to_list()

if __name__ == "__main__":

text = str(a)

sentences = getSentences(text)

nlp_model = spacy.load('en_core_web_sm')

triples = []

print(text)

for sentence in sentences:

triples.append(processSentence(sentence))

printGraph(triples)You may see the “insightful entity map” below.

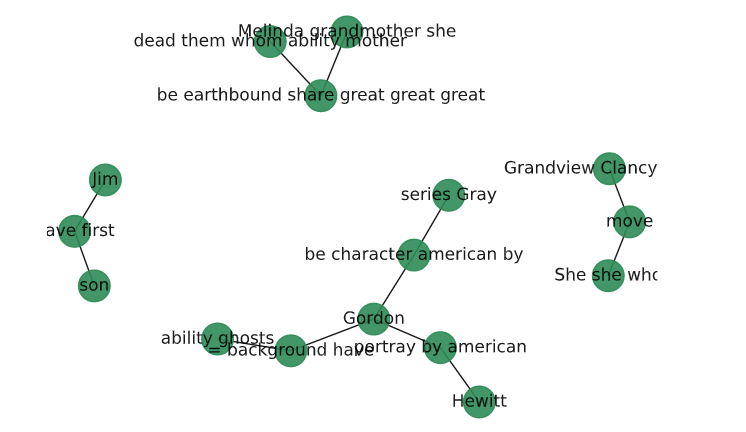

This is the knowledge graph of the first 5 entities in our data frame for Jennifer Love Hewitt. “Gordon” is the last name of “Melinda Gordon” who is portrayed by Jennifer Love Hewitt. Grandview is where she moves to live with her Fiancee Jim Clancy. She has taken her ability from her great and great grandmother, also the series has been managed by “John Gordon”. Also, Jim has a son during the series. If you can’t create the connections clearly, you should change the “tags” you are using. In this example, we are using “ROOT, “adj”, “attr”, “agent”, “amod” tags from Spacy. You may see all lists of tags from Spacy. Also, you may use the same methodology for other connected entities.

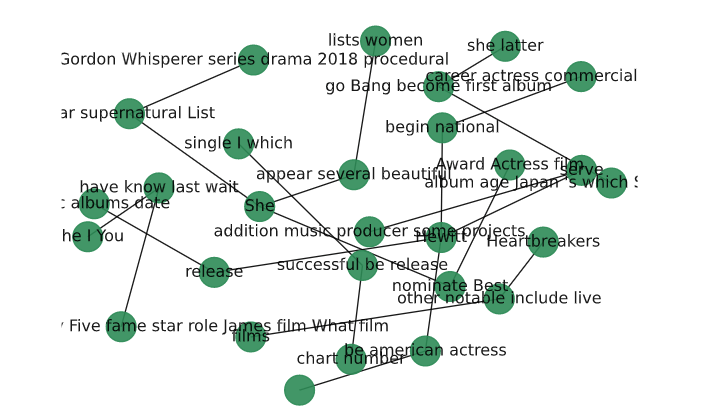

import wikipedia

entity_description = wikipedia.page('Jennifer Love Hewitt').content.split('\n')[:5]I have imported “Wikipedia” Python package and searched for the “Jennifer Love Hewitt” page’s content’s first 5 sentences. The graph for this information is below.

At the left top corner, you will see the “Gordon” and “Ghost Whisperer” as “drama. Also, you will see Jennifer Love Hewitt’s Albums and “Music Producer” career in the knowledge graph. “Heartbreakers” is one of the other artworks of Jennifer Love Hewitt along with the “Let’s Go Bang”.

If you put too many entities for creating a knowledge graph via Networkx, you will get the result below.

In NetworkX, you might use “g = networkx.spring_layout(G, k=1, iterations=100)” to create more distance between nodes, but since we have 460 Entities and their descriptions for this example, it still won’t create the perfect result.

An Example of Entity-based Search: How Does Google Use Entities to Understand the Web-entities’ Authority and Expertise?

After all those functions, explanations, and code blocks, you may need to remember the question of “How does Google Understand a Web Site’s Authority and Expertise for given Context via Entity Relations?”

Let’s imagine there are three different sites, one of them is solely about “Jennifer Love Hewitt” and another one is solely about “Actresses and Actors”, the third one is about “American Actresses”. Now, imagine that you have searched for the question “Who is Jennifer Love Hewitt?”, after this question, you will also probably want to know that “Which movies did Jennifer Love Hewitt play in?”, “Who is her husband?”, “How old is she?”, “Does she have children?”, “Does she have an active project?”.

Let’s say that, for these search intent groups, you have used the string of “Jennifer Hewitt 2020”. It is simple right? But, I assume that you remember Google’s extremely detailed “search intent” and “query profiling algorithms”. Now, let’s try to understand which one of these “Content Networks” have more advantage in terms of Entity Connections in a specific Knowledge Domain according to the “Thinking Patterns of Users” and Search Engines’ Entity-first Indexing Systems.

- Since there is a date in the query, it will apply the “Query Deserves Freshness” rule for the user.

- Jennifer Hewitt’s string is enough clear that it is an “Entity-seeking Query” for Jennifer Love Hewitt.