Digital PR and Local SEO worked together to improve the authority of the site and bring a significant increase in relevant traffic to Encazip.com within 3 to 6 months.

The general precept of this SEO project was:

“Every pixel, millisecond, byte, letter, and user matters for SEO”

Koray Tuğberk GÜBÜR

The project was led by Koray Tuğberk GÜBÜR of Holistic Digital who is the author of this case study.

This case study will demonstrate how clear lines of communication between software development and marketing teams can positively impact SEO results. The Encazip SEO Project achieved:

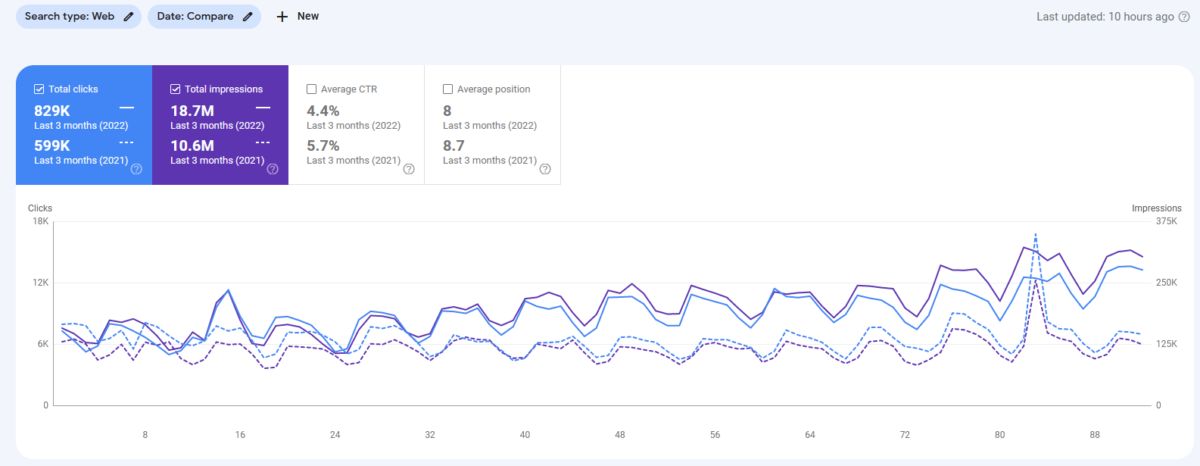

- 155% Organic Traffic increase in 6 months

- 110% Organic Traffic Increase in YoY Comparison for the same three months ( November to January)

To achieve this increase in Organic Traffic, the only targeted keywords were “Relevant and Possible Search Activities” with Semantic SEO Conception.

Holistic SEO Tutorial and Case Study Step by Step

Encazip.com is an energy consumption tariff comparison, modification, savings brokerage, an affiliate company. Encazip.com is a Turkish-based company, founded by localizing the know-how of the founders of uSwitch.com.

The idea of “Encazip.com” has been realized by a consortium under the leadership of Çağada KIRIM and backed by British investors, including Henry Mountbatten, The Earl of Medina. Electricity pricing and industrial or personal energy consumption is the primary target of the company. At the beginning of the SEO Project, the design, web page loading performance, branding, and content structure along with the education of the customer were looked at with a fresh perspective.

The “Everything matters in SEO” perspective was adopted by the customer.

“We acknowledge the importance of SEO with no doubt, however, we would rather approach this subject to be one of the foundations of our corporate culture. I believe we have achieved the establishment of SEO culture in encazip.com thanks to Koray, and I am looking forward to being a part of more case studies on this very important subject.”

Çağada Kırım, CEO, Encazip.com

Background of the Holistic SEO Case Study and Research for Search Engines

If Encazip.com’s team were not passionate about SEO, then it would not be possible to perform this case study at such a detailed level. Thanks and acknowledgments go to the whole team, especially Miss Yağmur Akyar, Mr. Erman Aydınlık, Mr. Nedim Taş, Mr. Oktay Kılınç, and Mr. Can Sayan. CEO Mr. Çağada Kırım, who brought the entire team together and organized it for SEO, was also a major factor in the success of the project.

Some parts of this SEO Case Study have been written by the Customer’s Team and will cover Technical SEO, Branding, Entitization, Content Marketing, Digital PR, and Web Page Loading Performance.

This screenshot has been taken on 9 June 2021 which is 7 days after the 2021 June Broad Core Algorithm Update of Google.

3.0 Page Speed Improvements: Every Millisecond Matters

According to Google’s RAIL model “Response”, “Animate”, “Idle”, “Load”, a developer-only has 10 milliseconds to move a pixel. To acquire “60 Frames per seconds”, we have 16 Milliseconds, but also the browser needs 6 milliseconds to move one frame over another, which leaves us only 10 Milliseconds.

And, Pagespeed is actually a “health” and “trust” issue. If a frame doesn’t move or change itself in 16 milliseconds, users will notice some “motion bugs”. If it stays static for more than 100 milliseconds, it means that something is wrong. If it takes more than 100 milliseconds, users will start to “stress”. According to Google, a slow web page can create more stress than a fight for human beings, and it affects daily life negatively.

This is the “core of my statements” for any first meeting with my clients’ development teams.

Response, animation, idle, and load (RAIL).

Below, you will see a quote from Nedim Taş who is the responsible developer for the front end of Encazip.com

“The more important a brand’s image, the more important SEO and Code Performance are. SEO and Front-end Development can work together to create a better brand image by taking users to the most usable and accessible website for their intents.”

Nedim Taş

There is another “core lesson” here. I have many clients that have more than 15 members for their IT team, but yet, these crowded IT and Developer teams can’t create the same productivity and effectiveness as just a 2 personal team. If you ask me what the difference is, I would say “SEO Passion and culture of the Company” and “growth hacking instinct”.

Now, we can look at what we have done for improving the web page loading performance of Encazip.com during the SEO Case Study and Project.

3.1 HTML Minification for Gaining 15 Kilobytes per Web Page

HTML Minification means deleting the HTML Inline Comments and Whitespaces of the HTML Document. This is one of the most essential page speed improvements but there are more benefits such as:

- Helps Search Engine crawlers explore the “link path” faster.

- Helps HTML digestion for Search Engine Crawlers and their Indexing Systems.

- It Lets users’ devices build the Document Object Model faster.

- It lessens the burden on the website’s server and lets users’ devices consume less bandwidth.

- A complex and big HTML document might prevent Search Engine Crawlers from loading the document or evaluating it for ranking purposes.

For this last point, we can look at the old warning message from the previous version of Google Search Console”: “HTML Size is too large”. This warning was valid only for news sites. Google didn’t move this warning to the new Google Search Console, because I guess it didn’t want to share “weak points” or “deficits” of its indexing system. But, we still have the same warning from “Bing Webmaster Tools”.

“HTML is too Large Warning” from Old Google Search Console’s News Website Section:

In the old Google Search Console, the “extraction failed” error happened if the HTML was larger than 450 KB.

“HTML is too Large” warning from Microsoft Bing’s Webmaster Tools

As a note, Microsoft Bing appears to be more open with information sharing about its algorithms and “desires” from webmasters than Google’s “BlackBox” attitude.

HTML Minification for Encazip.com was done in the first month of the project. But further down the line, we had to can this work as we had some “server incapabilities” during the migration from .NET to .NET Core.

So, even the simplest Pagespeed Improvement has lots of aspects and value for SEO, also bear in mind that it might not be as simple as you may think!

3.2 CSS and JavaScript Refactoring

All Developers know that “refactoring a CSS File” is actually harder than writing a CSS File from scratch. Some developers call this situation “Code Spaghetti”. If you experience this, you should create a clean and efficient new CSS and JavaScript file for the same layout and functionality.

A screenshot from “CSS specificity calculation”.

In this example, the “CSS and JavaScript Minification” took place at the same time as “CSS and JavaScript Refactoring” as there was already a plan for the website redesign.

(Editor’s Note: For more on this, see Koray’s article on “Advanced Page Speed Metrics”. He covers what to know and what to do to achieve an efficient Rendering Tree).

Before the JavaScript and CSS Refactoring process, there were more than 8 JS and CSS Files for only “page layout and functionality”. And, most of this code was not used during the web page loading process.

Test-driven development Methodology (TDD)

The total size of these web page assets was more than 550 KB.

At the end of this process, the development team decreased the total number of CSS and JS files to 3.

- Two of these 3 files were CSS and their total size was 14 KB.

- The JavaScript file that is being used for functionality was only 7 KB.

3.2.1 Why Have Two Different CSS Files?

Having the two different CSS Files lets Google cache and use just the necessary web page resources for its crawling routine. Googlebot and other Search Engine crawlers use “aggressive caching”, which means that even if you don’t cache something, Googlebot stores the necessary resources.

YoY Comparison for November, December, and January.

3.2.2 How does a Search Engine crawler know what to cache?

Thanks to aggressive caching of web page resources, a search engine uses less bandwidth from the website’s server and makes fewer requests, thus the Search Engine Crawler can crawl a website more quickly.

To determine how to crawl a site, search engines use Artificial Intelligence. If a website uses all its “CSS and JavaScript files” site-wide, it means that the Search Engine will have to cache all of these resources.

But, in this case, only the necessary CSS File for the necessary web pages was needed, thus we can facilitate “fewer requests” for the search engine crawler and also help it use only the essential files.

In our example, only three different types of a web pages were used according to their functionality and importance:

- Home Page

- Product and Service Pages

- Blog Pages

Thanks to the “intelligence website design”, the code necessities had been united for the “product, service, and blog pages”. Thus, fewer CSS files and code were used for managing the site.

- Encazip.com has “headfoot.css” which is only for the header and footer area of the website.

- A “homepage.css” file for just the homepage.

- “Subpage.css” for only the blog and product pages.

As you can see, Googlebot and other search engine crawlers can cache the “headfoot.css” easily because it affects the site-wide section of the web page. Also, “homepage.css” is only being used in the “homepage”, so for most of the crawling activity, search engine crawlers also can use the “subpage.css” file while saving itself from the “home page CSS codes”.

In short, the effect of this strategy was:

- We decreased the CSS-JS File size from 550+ KB to only 22-25 KB per web page.

- We decreased the request count for CSS-JS files from 8+ to only 3 per web page.

And lastly, “CSS and JS Minification”, have been used. You can see again that “Every millisecond and byte really does matter”.

With Authoritas’ Google Search Console Module, you can examine all the queries and their traffic productivity as above.

Text Compression on the Server-side and Advantage of Brotli

Originally, the company was using the “Gzip” algorithm for server-side compression. Many brands today are using “gzip” compression. Brotli was invented by Google. You can examine “Brotli’s Code” on Google’s Github Profile.

Brotli uses the LZ77 algorithm for lossless compression, for Brotli there is also a Google Working Group. Brotli performs 36% better than Gzip!

And, in the fourth month of the SEO Project, the team started to use Brotli for the text compression on the server side.

Compression technologies and file size.

3.3.1 Why is Server-side Compression Important?

Here is a breakdown of the importance of Brotli usage.

- The hardest thing about loading and rendering a web page is transmitting the files from a server over an internet connection.

- If you check the Chrome DevTools Network Panel, you will see that for every web page resource, the longest part is the “requesting a file” and “downloading the file” over a network connection.

- Text compression means compressing the files on the server side and conveying these files to the requestor.

- Since the file sizes are being decreased by compression, conveying these resources over a network connection is simpler.

- Thus, every web page resource will be loaded faster by the requestor which is the search engine crawler or the user.

- After loading the web page resources, the resources will be decompressed by the browser to use for rendering, parsing, and compiling the web page.

Basically, most of these articles include the “Time to First Byte Improvements”. By implementing Brotli, we have achieved an improved user experience, crawlability, crawl delay time and crawl efficiency of the website.

3.4 HTTP 2.1 Usage Instead of HTTP 1.1

The essential difference between HTTP 1.1 and HTTP 2.1 is the request count capacity per round-trip for a requester to a server. What is the reason for this situation? HTTP 1.1 keeps every request and response in plain text format, while HTTP 2.1 keeps every request and response in binary. Thanks to the binary format, HTTP 2.1 can convey more web page resources over a single TCP Connection.

During the web page rendering, a requester can take only the first 6 resources from a server. If the first 6 requests don’t include all the critical resources for the above-the-fold section, this means that the client will need a second round-trip for rendering the initial contact section of the web page.

A Schema for showing the HTTP 2.1’s Working Principle.

HTTP 2.1 adoption was a critical change for Encazip.com’s SEO project. Since the critical resource count and size for the fold section were already decreased, the “request round-trip need” was also decreased with the usage of HTTP 2.1.

Furthermore, Googlebot started to use HTTP 2.1 for its crawling purposes, thus our crawl efficiency improved.

3.4.1 HTTP 2.1 Server Push

HTTP 2 Server Push is the first reason for Encazip.com’s HTTP 2.1 migration from HTTP 1.1. After creating the “subpage.css” and “headfoot.css”, we used HTTP 2.1 Server Push for creating a faster initial contact with the users.

HTTP 2 Server Push lets a server “push a web page resource” to the requester even if the client doesn’t want it to. Thus, before the client makes the request from the server, the server will push the resource so that the connection and downloading process of the resource can happen faster.

Server Push’s working principle.

For the HTTP 2 Server Push, the team determined to include some resources from the above-the-fold section of the page, such as “logo, headfoot.css, subpage.css, and main.js”.

Please bear in mind, HTTP 2 Server Push also has some side effects.

- If you use HTTP 2 Server Push for too many resources, it will lose its efficacy

- The main purpose of HTTP 2 Server Push is to only use it for certain and critical resources

- The resources within the HTTP 2 Server Push can’t be cached

- HTTP 2 Server Push can create a little bit more server overhead than usual

Because of only the final point, we ended up using HTTP 2 Server Push only for a short time. Once we have made some back-end structure changes, Can Sayan plans to use it again?

“Lack of a caching system or a strong server… Long queries that slow down the response times… All of these affect the User Experience and also SEO. Thus, we are racing against milliseconds.”

Can Sayan, Backend Developer

3.5 Resource Loading Order and Prioritization

TCP Slow Start means that only the first 1460 bytes of an HTML Document can be read by the client. This is actually designed to protect servers. Thus, the most critical resources need to be at the top of the HTML document.

Our Resource Load Order is below with the HTML Tags and Browser Hints.

- <link rel=”preload” href=”/content/assets/image/promo/banner.avif” as=”image”>

- <link rel=”preload” href=”/content/assets/css/headfoot.css” as=”style”>

- <link rel=”preload” href=”/content/assets/font/NunitoVFBeta.woff2″ as=”font” crossorigin=”anonymous”>

- <link rel=”preload” href=”/content/assets/script/main.js” as=”script”>

- <link rel=”stylesheet” href=”/content/assets/css/headfoot.css”>

- <link rel=”stylesheet” href=”/content/assets/css/subpage.css?v=23578923562″>

You can see the translation of these resource load order’s logic below.

- “Banner.avif” is for the Largest Contentful Paint.

- “Headfoot.css” is the First Contentful Paint

- “NunitoVFBeta.woff2” is for the “FOUT” and “FOIT” effect.

- “Main.js” is for the functionality of the web page.

- “Subpage.css” is for the general layout of the Product and Service web pages.

And, you can see the profile of resource load prioritization below.

3.5.1 Cross-Browser Compatibility for Preload Usage

In the image above you will see some “duplicate requests”, these have been purposely left in. This is because, until January 2021, Firefox didn’t let SEOs and developers or Holistic SEOs use “preload”. Thus, if a user-agent included “Firefox”, it couldn’t use the “preload”, thus we have also put normal request links without preload.

Don’t worry, Google Chrome won’t request the same file twice!

3.5.2 What You Should Know About Preload

Preload does not work in Firefox, but lately, they have started to use Preload without implementing any kind of Firefox flag configuration. Here are other things you need to know while using preload.

- You can’t use preload for the resources that you have already “pushed with HTTP 2 Server Push” feature.

- If you try to use “preload” for everything, it will lose its meaning.

- Preload also “caches” the file for the browser so that returning clients can open the next pages faster.

- If you preload too many things, it can create a CPU bottleneck at the beginning of the web page loading process.

- A CPU bottleneck also can increase the Total Blocking Time, First Input Delay, and lastly “Time to Interactive”. Not an ideal scenario!

That’s why discussions with your development team are important. It can sound easy while saying “okay, we will just put ‘preload’ value to the ‘rel’ attribute”. But, it’s not that easy, everything needs to be examined repeatedly with a bad internet connection and mediocre mobile device.

3.5.3 Beyond Preload: Preconnect for Third-Party Trackers

Why didn’t we use DNS-Prefetch? Simply put, DNS-Prefects is only for “DNS-Resolution” for the third-part resources’ server while “Preconnect” is for performing the “DNS-Resolution”, “TLS Negotiation” and the “TCP Handshake”. TLS Negotiation and TCP Handshake are essential processes for loading a resource from a server. Instead of DNS-Prefetch, we implemented “Preconnect” for third-party trackers for this reason.

Preconnect’s working principle

Some of the “preconnect” requests for Encazip.com are below.

- <link rel=”preconnect” href=”https://polyfill.io”>

- <link rel=”preconnect” href=”https://cdnjs.cloudflare.com”>

- <link rel=”preconnect” href=”https://unpkg.com”>

- <link rel=”preconnect” href=”https://www.googletagmanager.com”>

3.5.4 Loading CSS Files as Async

Loading CSS Files as “async” is an important but mostly ignored topic. Thus, it is recommended that you read the “Loading CSS Async” tutorial. To load a CSS File asynchronously, the browser needs to be manipulated with the “media=all” and “media=print” attribute and value pairs. All the CSS files are actually render-blocking, it means that while they are being loaded, a browser cannot also render the web page as with JavaScript files. For JavaScript, we have the “async” attribute, but we don’t have anything for “CSS” files.

Thus, we wanted to use “CSS Async loading” for Encazip.com, but at first, it wasn’t necessary. Because the total CSS File amount was only 16 KB per web page. It is so small that it couldn’t block the rendering.

CSS Async prevents blocking.

But, when we started to load more resources with the “preload” browser hint, CSS Files started to “block the rendering”. Thus, we wanted to re-plan it as we were only gaining 15-20 milliseconds per web page loading event.

But, we didn’t implement it in the end. Because, when we use the “CSS Async” feature for these CSS Files, it is creating a “flicker effect”. It means that the browser was rendering the web page without CSS first, then it implemented the CSS Effects, this was creating a “turbulent page loading experience” or in other words, a flickering effect.

Just for gaining 15-20 Milliseconds, we didn’t want to cause such stress for the user. That’s why as an SEO and developer, you need to balance things while making a website more crawlable for search engines and usable for users.

So, at the moment, we have left this topic for further discussion, maybe we can use CSS Async only for one of the resources. But, I want you to remember that CSS Async is a feature that relies on “JavaScript” rendering, and this can also affect SEO. (Editor’s Note: I recommend you to check the section for “Image Place Holders”).

3.5.5 Deferring All the Third Party non-Content Related Trackers

All the third-party and non-content relevant JavaScript files were deferred. A deferred JavaScript cannot be rendered until the “domInteractive” event.

And, there are two important things to consider when using the defer browser hint:

- If you use defer on the main JS file, you will probably not see its ‘initiator’ effects until it is installed.

- If you use defer too much, you may cause a CPU bottleneck at the end of the page load.

Be careful whilst using “defer” to ensure that you do not block the user!

In Encazip.com, before the SEO Project’s launch, all the non-important JavaScript files were being loaded before the important content-relevant CSS and JavaScript files.

We have changed the loading order for the resources, so the most important web page resources load first and have deferred the non-important ones.

N/B: Deferring third-party trackers can cause slightly different user tracking reports since they won’t be able to track the user from the first moment.

3.5.6 Using Async for Only the Necessary JavaScript File

As with using the “defer” attribute, using the “async” attribute is an important weapon at your disposal for creating the best possible user experience. In the case of Encazip.com, we have used the “async” feature for only the “Main.js” file since it was the only file that was focused on the “content” and “functionality”.

And, from the previous section, you can remember that you shouldn’t defer the most important and functional JS file.

3.5.7 Loading the Polyfill JS Only for Legacy Browsers

Polyfill is used for ensuring compatibility of these modern JavaScript methods and files with legacy browsers such as Internet Explorer. And, as an SEO, you always need to think about the user profile 100%. Since, there are millions of people who use Internet Explorer (I don’t know why, but they are using it!)

Since we don’t have “Dynamic Rendering” and “Dynamic Serving” technology at the moment for Encazip.com, Nedim Taş prepared another step to prevent loading Polyfill JS for modern browsers. If the browser is a modern browser, Polyfill JS will not be loaded with its content, but the request will still be performed. If the browser is a legacy browser, it will be loaded with its content.

Thus, for most of the users, we have saved them tens of KB.

3.6 Aggressive Image Optimization with SRCSet and AVIF Extension

First, let me explain what aggressive image optimization is. How is it different from regular image compression? There are four different aspects of image optimization; “pixels, extensions, resolutions, and EXIF data.”

Pixel optimization in terms of “image capping” is actually a new term. Image capping has been implemented by Medium and Twitter before to decrease the image size by 35% while decreasing the request latency by 32%. Image capping means “decreasing the pixel count of image 1x scale, in other words, 1×1 pixel per dot. Since 2010, “super retina” devices started to become more and more popular. “Super retina devices” mean that they include more than one pixel per dot on the screen and this gives a device a chance for showing more detailed images with higher pixels.

Color and pixel differences based on devices. Above, you see “1 Pixel’s color profile”.

So, what is wrong with super-retina devices and 2x Scale or 3x Scale images?

- The human eye can’t actually see the details in 2x resolution or 3x resolution images.

- 2x resolution or 3x resolution images are bigger in terms of size.

For Pixel Optimization, you can use “different types of image pixel optimization algorithms”. Such as “NEAREST” or “BILINEAR” from PILLOW.

So, let me introduce you to other sections of aggressive image optimization for web page loading performance briefly.

Like “pixel optimization”, also “extensions are important”. This is common information now. But, most the SEOs, Developers, or Holistic SEOs are not aware of “AVIF”. Most people also know WebP. But, I can say that WebP is already outdated and outranked by AVIF.

Justin Schmitz is the inventor of AVIF.

EXIF Data (Exchangeable Image File) is an important aspect of SEO. I won’t go deep into this aspect, but you can watch the video of Matt Cutts from 2012.

As an aside: Google was sharing way too much information about their internal system before John Mueller. As an SEO, I can’t say that I like this change!

Exchangeable Image File includes the “light, camera, lens, geolocation, image title, description, ISO Number, Image Owner, and License information”. Some also call this IPTC Metadata (International Press Telecommunications Council).

For relevance, I recommend my clients use IPTC Metadata with minimum dimensions. But for performance, you need to clean them.

Resolution. For image optimization, unnecessarily big resolutions shouldn’t be used. If the website is not from the News niche, you probably won’t need big resolutions.

To use the best possible image extension based on user-agent (browser) differences and the best possible resolution based on the device differences, we have used “srcset”.

Below, you will see an example.

Remember, we already “preloaded” the AVIF image, and now we are just telling the browser where to show it if it can do so. And, “<figure>” is being used for Semantic HTML as we will discover in the future sections of this SEO Case Study.

P.S.: Do I really need to talk about “alt” tags? Or, Image URLs?

3.6.1 Intersection Observer for Image Lazy Loading

Intersection Observer is an API to load the images only if the image is close to the view screen or in the view screen. In the Intersection Observer, you can determine when or where to load and show an image. Basically, Intersection Observer API is the practical name of Lazy Loading. But, why didn’t we use the “load:lazy” attribute of Chrome? Or, why didn’t we use a third-party library for lazy loading?

- We didn’t use Chrome’s “loading” attribute and “lazy” value because it is not compatible with every browser. For cross-browser compatibility, we need to use Intersection Observer.

- We didn’t use the third-party libraries for lazy loading, because it would also load unnecessary codes from another third-party domain. And, maintaining your own custom library is way much better than adding another dependency to your toolset.

Below, you will see what percentage of the users’ browsers support Intersection Observer API which is 91.98%.

On the other hand, only 69.39% percent of the browsers support the “loading” attribute and the “lazy” value for it.

I recommend you check out Mozilla’s How to Create an Intersection Observer API tutorial.

Thanks to the Intersection Observer API, we increased the size of the “main.js” file just a little, but we gained control of the lazy loading’s default behaviors without any other dependencies and, of course, we have improved the initial loading time more than 50% thanks to lazy-loading. This 50% improvement was measured from the following relevant page speed metrics: First Paint, First Contentful Paint, Largest Contentful Paint, and Time to Interactive.

3.6.2 Image Placeholder for Better Speed Index and Largest Contentful Paint

Image placeholders are important for “visual progress” speed. To completely load the above-the-fold section of the web page in terms of “visual completeness”, placeholders provide a “smooth” and more “interactive” experience. Image placeholders are now being used in Encazip.com for improving “Speed Index” and “Largest Contentful Paint” timing.

But also, since image placeholder is a technology that relies on JavaScript, it also has some side effects such as not showing the image for the not-rendering crawling schemas of Googlebot. You can see the effect of image placeholders due to their JavaScript-based nature on Google’s SERPs.

And, this is what happens if a Search Engine doesn’t render your JS in a stable way.

— Koray Tuğberk GÜBÜR (@KorayGubur) January 1, 2021

Me: Using “placeholders” for better LCP and Speed Index.

Search Engine: Use “placeholder” instead of the actual image.

I believe this will be fixed within 2 weeks by the Search Engine.#seo #ux pic.twitter.com/8dIyIj5Vgq

As you can see, without the “rendering phase”, Google’s indexing engine can’t see the actual image, it doesn’t understand that the image is actually a placeholder. Thus, it shows this instead!

Thus, Google decided to not show the image after all because it was not in the “initial HTML”. Google thought that the image wasn’t important enough and also since they don’t render JavaScript every time, they couldn’t see the actual LCP Image continuously between crawl round-trips.

After a while, Google will show the image placeholder again, then the actual image, and then it will remove it again… This will continue as a loop. And, you should think of this as a reason for “ranking fluctuation” also.

And this will continue…

After 15 days, Googlebot fixed it. But, if you know Google, “fresh data” is always more important than “old data”. You should think of Google’s crawling behavior as a “loop”.

3.6.3 Using Image Height and Width Attributes for Cumulative Layout Shift

Image height and width are important for Image SEO and Visual Search. But besides Image SEO, this is also an important aspect for User Experience and thus for SEO. I won’t dive deep into Cumulative Layout Shift here, but suffice to know that every unexpected “layout shift” or “moving web page component” is a cause for Cumulative Layout Shift for the user.

To prevent this situation there are certain rules:

- Give height and width values to images.

- Do not use dynamic content injection.

- Late uploaded web fonts.

- Network approval before DOM Loading.

In this context, we have given height and width values for images so that the Cumulative Layout Shift can be decreased and Encazip.com can be ready for Google’s Page Experience Algorithm.

3.7 Web App Manifest Usage for Progressive Web Apps

Web App Manifest is the gateway for Progressive Web Applications. Simply put, a Web App Manifest is a file that defines the website as an application and lets a device download the website to local storage with certain icons, shortcuts, colors, and definitions. Thanks to Web App Manifest, a website can be opened without a browser like an app. That’s why it is called a Web App Manifest.

In Encazip.com, we have started to use Web App Manifest, also the “words” and “shortcuts” in the Web App Manifest can increase the user-retention while reinforcing your brand entity to Google.

And, you will see that we have a “prompt pop-up” for installing Encazip.com as a local app. Below, you will see that we have Encazip.com as a local app on my desktop screen.

3.8 Using Service Workers for Better HTML Payloads

Service Workers are another step for Progressive Web Applications. Thanks to service workers, a website can work offline. A service worker is actually local storage from the browser’s memory. A service worker can create a “cache within an array” and certain URLs are registered into this array. After these URLs and the resources within them are cached, the client doesn’t send requests to the server for these resources. And since they are in the local cache, website loading performance is improved for returning visitors.

With Service Workers, we have cached the most important resources for the “initial contact” with the user. But, to be honest, it’s not obvious what the limit of storage is for a service worker, so we have tried to use it carefully and sparingly.

3.9 Cleaning Unused Code from Third-party Trackers by Localizing

This section is actually debatable. In Technical SEO and Page Speed Improvements for creating the best possible time and cost balance for the SEO Project, I always focus on the most important points that will make the difference.

In this short animation below, you can see which third-party dependencies generally impact internet users in terms of data usage and page speed. Thus, “cleaning and localizing” the third-party trackers can decrease the page’s size enormously and also remove the need for connecting another outsourced service for the client.

But, Cleaning and Localizing the third-party resource has also some side effects:

- If you localize a third-party tracker, you won’t get the updates automatically.

- Localized third-party dependencies might not work perfectly due to sloppy cleaning.

- If the marketing team wants to use another feature from the dependent script, the process might need to be repeated.

Below, you will see the positive effects:

- Removing the Single Point of Failure possibility.

- You will only use the necessary portions of the dependent script for lesser code.

- You won’t need to connect to another outsourced service to complete the web page loading.

- It is sustainable if the development team can make this a habit.

In Encazip.com, because of these side-effects, we didn’t implement this yet, but it is in the future scope of the project. I’ve included it to show the lengths we will go to to get the best results and demonstrate the true “vision” and “perspective” of this case study.

3.10 Conflicting Document Type with Response Headers and HTML Files

Unfortunately, the majority of SEOs do not care about the Response Headers—and they should!

Response headers and their messages are actually more important than the actual HTML. So, any kind of message in the Response Header should not conflict with the information within the tags from the HTML Document.

In our case, Encazip.com was using the “Windows-1258” encoding for the “content-type” response header while using the “UTF-8” for the HTML Document. And, this gives a mixed signal to the browser and indirectly to the Search Engine crawlers about the web page’s content type. To remove such a mixed signal, we started to use only “UTF-8” within the HTML Document.

With Authoritas, you can add tasks and also solve OnPage and Technical SEO problems.

3.11 HTML Digestion and HTML-Based Improvements

“HTML Digestion” is a term from the “Search Off the Record” Podcast Series which is created and published by Googlers, Danny Sullivan, Garry Illyes, Martin Splitt, and John Mueller. They also call this “HTML Normalization”. According to Google, the “actual HTML” and the “indexed HTML” are not the same. Googlebot and Caffeine Indexing System of Google are extracting the HTML Structure from the actual document with the signals they collect.

There is a simple quote below from Garry Illyes about “HTML Normalization”.

If you have really broken HTML, then that’s kind of hard. So we push all the HTML through an HTML lexer. Again, search for the name. You can figure out what that is. But, basically, we normalize the HTML. And then, it’s much easier to process it. And then, there comes the hot stepper: h1, h2, h3, h4.

I know. All these header tags are also normalized through rendering. We try to understand the styling that was applied on the h tags, so we can determine the relative importance of the h tags compared to each other. Let’s see, what else we do there?

Do we also convert things, like PDFs or… Oh, yeah. Google Search can index many formats, not just text HTML, we can index PDFs, we can index spreadsheets, we can index Word document files, we can index… What else? Lotus files, for some reason.

Garry Illyes,

You can listen to the Search off the Record Podcast Series, a related episode.

Remember what happened during August, September, and November in Google’s indexing system? Everything went awry! Google removed the Request Indexing function, and it mixed the “canonicalized URLs”, etc…

Google’s confirmation for a series of bugs. Even the Google Search Console’s Coverage Report was not refreshed for days.

Thus, having a simple, error-free, understandable HTML is helpful. Thus, while redesigning the website, we also cleaned all the HTML code errors from Encazip.com.

P.S: HTML Code errors might make a browser work within “quirk mode” which is also harmful to web page loading performance even if it is just a small factor.

3.12 Semantic HTML Usage

Semantic HTML means that HTML tags can have context and meaning within a hierarchy. It gives more hints and makes it easier to understand a web page for the Search Engine Crawlers. Also, Semantic HTML is useful for screen readers and web users who have disabilities.

A schema for Semantic HTML’s logic.

In Encazip.com, at the beginning of the SEO Project, Semantic HTML was not used. But, with certain rules, we have used Semantic HTML. You can see some of the tags we prefer to use within the website.

- Header

- Footer

- Nav

- Main

- Headings

- Article

- Aside

- Section

- Ol and Li

- Picture

- Figure

- Quote

- Table

- Paragraph

Every “section” had at least and at the most only one “heading 2”. And every “visual transition” was also at the end of the section. In other words, the “visual design elements” of the website and the Semantic HTML are compatible with each other. This helps to “align the signals” unlike “bad and mixed signals”.

3.13 Decreasing the HTML DOM Size

The DOM Size is an important factor for “Reflow, Repaint Cost”. The Document Object Model is built from objects or nodes. Every additional node is an increase of +1 to the DOM Size. Google suggests having less than 1,500 Nodes in the Document Object Model. Because, having a large DOM Size makes it harder to layout, paint, or render processes for the browser.

You can see the DOM-Tree Analysis for Encazip.com’s Homepage.

In Encazip.com, we ended up with 570 nodes in the DOM. It is much better than Google’s suggested limit, but our main competitor has an average of 640 nodes. So, we are better, but not much better than our main competitor, at least for now.

With Authoritas, you can find the best experts and authors for your industry, for your PR and Marketing campaigns.

3.14 Font File Count and Size Decreasing

Font file optimization is completely another discipline in page speed science. Thus, I will just give a simple and short summary here.

On Encazip.com there were more than 5 font files per web page. And most of these fonts were not even used for every web page or even if they were being used, they were only for a small portion of the web page.

I always recommend brands use “less color” and “fewer fonts”. Because they are not really critical, but still costly for the users and crawlers of the search engines.

The first major issue is that all the font files did not have the Woff2 file extension. This meant that their size was unnecessarily large. The total size of the font files was more than 200 KB per page.

- At the end of the day, we have decreased the font file count to one.

- We have decreased the font file size to 44 KB.

- We have gained 4 requests and an average of 150 KB per web page.

With Authoritas, you can find the best expert Authors based also on “domains”.

3.15 Using Font Variables

Font variables are one of the advanced page speed topics. Imagine that you are unifying the “bold”, “italic” and “regular” versions of a font into a single file. Thanks to font variables we could use different font variations with only one request.

“Font-variation-setting” is for font-variable.

Thus, we have managed to stick to only one font and different styles. You can see the “Font-variable” codes below from our CSS File. (Other thanks to Mr. Nedim Taş for this!).

3.16 FOUT and FOIT

Flash of Unstyled Text and Flash of InvisibleText are other important terms for web font optimization. FOUT and FOIT are also important for Cumulative Layout Shift and sometimes, the Largest Contentful Paint if the LCP is textual content. To prevent FOUT and FOIT situations, we have preloaded the font file while using the “font-display:swap” CSS Feature within our CSS. Below, you can see the necessary code block.

3.17 Using Browser-Side Caching for Static Resources

The browser-side cache is for the static resources of the web pages. If a resource on the web page doesn’t change frequently, it means that it can be stored in the browser’s cache. To perform this, “cache-control:max-age” and “Etag” or “Entity Tag” HTTP Header should be used.

Working principle of browser-side cache.

In Encazip.com, we have used browser-side cache for some static resources, but some static resources’ browser caching is delayed due to some back-end infrastructure improvements. So there are some more incremental improvements to make here.

Structured Data Usage for Holistic SEO

Structured Data is one of the other important signals for the search engine. It shows the entities and their profile and connection with other entities to the search engine. Structured data can affect the relevance, SERP view, and the web page’s main intent in the eyes of search engines.

Encazip’s Organization Structure Data Visualization.

In Encazip.com, the structured data had not been implemented correctly, so we adopted three different structured data types for Encazip.com.

- Organization

- FAQ

- AggregateRating

Why did we use these types of structured data?.

- Organization structured data was used for creating an entity reputation and definition for Encazip.com. Soon after, Google started to show Encazip.com’s social media profiles on the SERP.

- FAQ structured data has been used for the blog and service/product pages. In the future, we plan to also add more sections to the FAQ structured data within the Schema.org guidelines.

- AggregateRating is for the business partners’ of Encazip.com, and it was united with the Organization’s structured data. The main purpose here was to show the web page’s activity on the SERP with the reviews and stars.

- Images in the Largest Contentful Paint HTML Element have been added to the FAQ Structured Data for better web page elements, and layout functionality signals.

5.0 Website Accessibility: Every User Matters

Website accessibility is one of the most important things that are relevant to SEO, UX, and most importantly humanity. As an SEO, I must say that an accessible website is actually a human right. Thus, I believe that making websites accessible is one of the best sides of SEO. (And, as a color-blind person, I give extra-special attention to this area).

5.1 Using Accessible Rich Internet Applications for being a Better Brand and Holistic SEO

Encazip.com is a ‘mostly’ accessible website. I say “mostly” because, to be honest, learning and implementing “Accessible Rich Internet Applications” is not easy. But, we have implemented “role”, “aria-labelledby”, and “aria-describedby” attributes with proper values.

Furthermore, I can also say that pages that are legible to a screen reader can be understood easier by a Search Engine since it doesn’t leave anything to chance and connects every web page component to each other. (I recommend you to think about this, also having Semantic HTML in mind).

Lastly, we also cared about “light and color differences” between web page components.

PS: Do we really need to talk about alt tags, in 2021?

Website Redesign Process for Holistic SEO: Every Pixel Matters

For designing a website, there are lots of dimensions. The layout of the web pages, component order of web pages, style of components, texts, images, links, categorization of pages, and more are affecting rankings.

If a web page cannot satisfy the search intent, it cannot be ranked well by Search Engines. If a website’s layout is not understandable and requires “learning” by users and also Search Engine’s Quality Evaluation Algorithms, it can harm the SEO Performance.

With Authoritas, you can filter the branded queries and non-brand queries for CTR and keyword profile analysis.

Google can understand a website’s quality and expertise from its design, layout, or web page components. By just changing the design, I have overcome some SEO performance plateaus before, this also includes changes as minor as color palettes. Google also has some patents about this, to show the detailed insights that Google might seek to extract from a website’s layout and design. I have chosen only four Search Engine patents, one is from Microsoft, and three are from Google.

6.1 Website Representation Vectors for SEO

Website representation vectors cluster websites according to their layout and design quality along with expertise signals. According to their percentage similarity, Google labels sites as expert, practitioner, or beginner by looking at texts, links, images, layout, and a combination of this and more.

6.2 Read Time Calculation

Detection and utilization of document reading speed

Google might use “markers” to try to understand how a user can read a document, and how much time it would take them to find the right portion of the document for specific information or query. It also tries to understand the language of the content and its layout for the users’ needs. This patent is from 2005, but it shows that at some point, Google’s Search Quality Team cared about this in the middle of 2000-2010. And, we all know that the above-the-fold section and phrases and entities from the upper section of the content are more important than the middle and bottom sections.

6.3 Visual Segmentation of Web Pages based on Gaps and Text Blocks

Document segmentation based on visual gaps

Google can use visual gaps, text blocks, headings, and some marks to understand the relationship of different blocks with each other. But, also if you leave too much gap between blocks it can affect the “completeness of the document” while increasing the scroll depth and also “read time”. So, having a “complete visual block” that follows another one within a hierarchy and harmony is important.

6.4 VIPS: a Vision-based Page Segmentation Algorithm:

Another patent, but this time it is from Microsoft. As you can see above, the Vision-based Page Segmentation Algorithm uses the Document Object Model and also visual signals to analyze the relationship of different web page segments with each other. You will see some rules from VIPs:

- If the DOM node is not a text node and it has no valid children, then this node cannot be divided and will be cut.

- If the DOM node has only one valid child and the child is not a text node, then divide this node.

- If the DOM node is the root node of the sub-DOM tree (corresponding to the block), and there is only one sub-DOM tree corresponding to this block, divide this node.

Why do you think that I have shared these rules? Because all of these are similar to what Google’s Lighthouse does for determining the Largest Contentful Paint. And, LCP “div” can also be used for understanding the actual purpose of the web page. Of course, it is not a “directive”, it is just a hint, but that’s why LCP is important for search engines. It means that the initial contact section of a page with a fast LCP Score can satisfy the search intent faster.

I won’t go further in this section but know this, Google also has patents for page segmentation based on “function blocks and linguistic features”. It also checks the code blocks to understand which section is for what, whilst annotating the language style on these code blocks.

Classifying functions of web blocks based on linguistic features

In Encazip.com, while designing the new website, the designers created a modern, useful web page layout and visual aesthetic for web users. During the design process, we also talked about the implications of mobile-only indexing, mobile-first indexing, search intent, visual consistency, and Chris Goward’s “LIFT Model” for page layouts.

The LIFT Model is also important for me because it lets me optimize a web page based on the dominant search intent along with sub-intents. With a proper hierarchy, everything coalesces nicely with a clear signal. While designing the website, we also talked about DOM size, the need for CSS code, styling of heading elements, and semantic HTML usage along with AIRA necessity. (Again, in this section, Mr. Nedim did an excellent job!).

This image shows Google’s Indexing System’s working style with “aligning ranking signals”.

So, web page layout is an important ranking factor. Along with E-A-T, it affects the user experience and conversion rate, it is also directly related to the web page’s loading performance. To be a good well-rounded SEO, one should be able to harmoniously manage these different aspects of an SEO project.

7.0 Kibana and ElasticSearch Usage for Log Analysis

At the beginning of the Encazip.com SEO Case Study, we didn’t perform any kind of log analysis and actually, we didn’t need it. But for the future stages of the SEO Project, we plan to use Kibana and ElasticSearch.

If you are looking for more on this, Jean Christopher has a great article in the Search Engine Journal about how to use Kibana and ElasticSearch for SEO Log Analysis.

With this in mind, we have started to prepare our log analysis environment. Of course, if you want you can read log files with a custom Python script or a paid service such as JetOctopus, or OnCrawl, it is up to you.

Authoritas also has a nice real-time log analysis tool in Alpha that uses a small JS snippet that you can insert into your website. It detects bots and then sends the data to the Authoritas servers where it is then analyzed in Kibana and ElasticSearch. It’s very fast to load (page impact 10-20 ms) as it loads over UDP rather than TCP/IP. Furthermore, it only works at the moment on sites running PHP. As it’s loaded with the page, it won’t pick up any 5XX server errors, but it will help you track bots in real-time, and find 3XX, and 4XX status code issues and bad bots hitting your site. If you have difficulty getting access to your server logs, then this could be a simple and easy step.

8.0 Branding, Digital PR, and Entitization of Encazip.com: Every Mention Matters

Encazip.com is also a good example of an entity. I won’t go deep about entities here, but there are four key differences between an entity and a phrase.

- The entity has a meaning, but the keyword has not.

- The entity does not have a sound, a keyword has.

- Entities have attributes, but keywords are not so.

- The entity is about understanding concepts, keywords are about matching the string.

Entitization means the process of giving a brand an actual meaning, a vision, attributes, and connection with other concepts to a search engine. Being an entity will help improve your rankings. Google can evaluate a “source” beyond its own domain. To perform this, you need to implement entity-based Search Engine Optimization.

Device-based Position and CTR Relationship.

But, in the Encazip.com SEO Project, my general strategy failed. The best way to become an entity and get your entity ID is actually opening a profile page within a popular source for the Google Knowledge panel such as Wikipedia or Wiki Fandom.

Knowledge Panel Source Sites for Google.

Source: Kalicube.com – Data: Authoritas SERPs API.

In the Encazip.com SEO Project, also “entitization” was not just about the “brand”. Also, “sponsor”, “founder” and “manager” should be entities. The reliability, news, mentions, and relevance within the energy industry are also important for a search engine. Imagine that Google suggests a website on the SERP that the founder, owner, or manager of the site’s brand is actually a criminal. It wouldn’t be a reliable “source” right?

To create more E-A-T, we also used Mr. Çağada Kırım’s scientific articles and background on the energy field, we have opened some Wikipedia Pages for the Mountbatten Family’s members, because Encazip.com is also owned by Mountbatten Family members as partners.

But, using Wikipedia pages was not the best way to proceed with entitization. If you open a Wikipedia page for a brand, in a short time, you will get an entity ID. I have done this before for VavaCars, and you can see their entity ID and Knowledge Graph search result below.

The image above is a visual description of “how to query within Google’s Knowledge Graph for entities with Python”. You can see the entity ID of VavaCars with the entity definition and link for the Wikipedia page that I created.

In Encazip.com, we did not manage to successfully create a Wikipedia page, so how did we solve the problem of becoming an entity? Through increasing the volume of mentions, news stories, and third-party definitive articles about the site.

Going deeper into this process is beyond the scope of this case study, but in doing so, we also increased the latent search demand for the brand’s site. It was also useful in increasing the SEO performance and organic rankings since it is a direct “ranking factor”.

If users search for you, Google will promote you on the SERP for the related concepts and terms. Look at the 22nd of December from Google Trends for the search trends related to Encazip.com:

It was 100. And, look how December 22nd affected the “Average Position” and also how it was a cornerstone for this SEO Case Study. After December 22nd, Google decided to use Encazip.com for broader queries with more solid expertise, relevance, and authority.

And, thanks to all this heavy branding and intensive news, mentions, and search demand Google has recorded Encazip as an entity in its Knowledge Base. The screenshot below is from Google Trends. If you can see a search term as also a “topic” it means that they are an entity.

Google Trends shows the topic and phrase for “encazip”.

If you choose a topic in Google Trends, you will see all related search activity for the entity. And, during all the branding work, press releases, and more, we always cared about the context. We always used the “Encazip.com” phrase with the most relevant and industry-centric concepts. Google calls this “annotation text” within its patents. It means that the sentence’s sentiment and annotations will create relevance between concepts.

Becoming an entity is not enough, but it’s a good start! You should also create relevant annotations and connections between your brand entity and the industry so that you can become an authority.

Encazip’s entity ID for Google’s Knowledge Base is “2F11cmtxkff9”. An entity’s ID can be seen within the URL of Google Trends.

8.1 Social Media’s Effect on Becoming an Entity and Entity-based SEO

During the Encazip.com SEO Project, we also used social media actively. My general principle for Social Media is actually using “hashtags”, “images” and also mainstream social media accounts for giving constant activity signals to search engines.

We know that Google Discovery acts in great parallel to Social Media activity, even without an official statement. We also know that Google indexes social media posts by dividing them into hashtags, videos, posts, and images.

Furthermore, we know that Google wants to see a brand’s social media accounts in the organization’s structured data. Furthermore, we also know that Google has placed these links in the knowledge panels of entities, and even placed special places on social media links in the “Update the Knowledge Panel” section.

You can compare the SERP for two competitors’ social media searches. Result count and SERP features are signals for prominence and activity.

We have some old explanations from Matt Cutts about “social media links” and how they try to interpret them for search quality, and from Google’s old changelogs, we know that they scrape social media accounts and posts to understand the web better. Between 2010-2015, social media activity was an important ranking factor, even back then there were “post services” as a black hat method.(Editor’s Note: Not that anyone we know used them of course ;-)).

Below, you can see my general ten rules and suggestions for Encazip.com for social media activity.

- Always, be more active than the competitors.

- Always, have more followers and connections than followers.

- Create new hashtags with long-tail keywords.

- Use hashtags within a hierarchy, such as “#brandname, #maintopic, #subtopic”.

- Always try to fetch the latest and most popular hashtags for every mainstream social media platform.

- Try to appear in Google’s Twitter, TikTok, or Instagram short video carousels.

- Have more indexed content on Google and Bing within the social media mainstream platforms than your competitors.

- Use original images with links to the main content.

- Syndicate the content distribution with social media platforms along with content-sharing platforms such as Quora, Reddit, and Medium.

- Consolidate the ranking signals of the social media post and platforms with the brand entity’s main source which is the website.

The tenth point is actually the main purpose of my social media activity within all SEO Projects, and it can be acquired via links, mentions, image logos, and entity-based connections.

During the SEO Case Study, Encazip.com was active on Instagram, Facebook, Linkedin, Quora, Reddit, Medium, Twitter, Facebook, and YouTube with hierarchical and derived hashtags with keywords.

8.2 Local Search and GMB Listing’s Effect on Entities in the SERPs

As a holistic SEO, it’s not just the technical, coding side or content side that is important, the local search activity is as important as the social media arena. Google unifies every ranking and relevance signal along with quality signals from the different verticals of search and web.

In this context, we can clearly say that the Local Search quality of an entity is also an active SEO factor for web search results. Thus, Encazip.com has performed a “review marketing” campaign with honest reviews that were requested from the customers. The company’s social media posts with custom-designed images were being posted to the Google My Business posts.

After many positive reviews and lots of related questions which were answered by Encazip.com’s experts, Encazip.com started to be grouped with the biggest energy companies on the Google My Business panel within the “People Also Search” feature. This was clearly a quality score increase and a relevance signal increase which was great.

In other words, from all search verticals and web platforms, we created a high activity level, with strong quality and authority signals while consolidating them for the search engines’ algorithms.

9.0 Protecting the Site Migration Route

Encazip.com’s old domain was “Cazipenerji.com”. It has been migrated to Encazip.com, but during the project, the old 301 redirects expired because the registration for the old domain expired! (Fortunately, Mr. Cagada Kırım noticed this problem before me and he bought the old domain again and redirected it to Encazip.com).

This is important to take advantage of the old domain’s brand authority and relevance for the queries that it has historical data. This section is also related to the “Uncertainty Principle of Search Engines”. It takes time to convince search engines’ algorithms, if you cancel a site migration, it can hurt your brand’s reliability, thus this is also another important step to get right.

10.0 Authoritative, Semantic SEO for Content Marketing: Every Letter Matters

In Encazip.com, I implemented the Semantic SEO principles. I used the “ontology” and “taxonomy” for all the relevant topical graphs under a logical hierarchy and structure. For content creation, we have educated the authors and taught them Natural Language Processing rules, terms, and their importance. In this process, I also should pinpoint the importance of educating the customer’s team.

If you don’t educate your customer, you will be over-exhausted and you will compromise the quality of the content. To prevent this unwanted situation, a holistic SEO should educate his customer. In this context, I recommend you to read some important Google Research Papers below:

- Translating Web Search Queries into Natural Language Questions

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Siamese Multi-depth Transformer-based Hierarchical Encoder for Long-Form Document Matching

And, lastly, I recommend you to read anything Bill Slawski and Shaun Anderson write 😉

Lastly, if you are a true SEO nerd, then you can read my Topical Authority Lecture with 4 SEO Projects with a summary of Search Engine concepts and theories.

During the SEO Project, we have written, redesigned, repurposed, reformatted, and republished more than 60 articles. Also, we have started to create new content hubs by complying with Google’s entity taxonomies.

Since the day we started working with Mr. Koray, his work has always had positive effects on encazip.com.

He also taught our team a lot about both SEO and technical SEO. Our work, with the guidance of Mr. Koray, has always ended well.

He contributed a lot to encazip.com to become what it is today.

Yağmur Akyar

10.1 Different Contexts within Semantic Topical Graphs

An entity has different contexts. Google calls this Dynamic Organization of Content. A brand can be an authoritative source for an entity’s context. Such as ‘electricity prices. But, there is also a connection between electricity production and electricity prices. Also, electricity production is connected to electricity definition and science. Thus, for calculation, definition, production, consumption, science, and scientists, Encazip.com started a comprehensive content production process based on semantic search features.

In the image above, you can see a Dynamic Content Organization for the entity of electricity.

P.S: And, Do I need to talk about keyword gaps or etc… in 2021?

10.2 Image Search and Visual Content Creation

In images, there are two types of entities according to the Google patents, one is the object entity, and another one is the “attribution entity”. We also used EXIF Data” and IPTC for image SEO. Images are designed as unique or chosen as unique. The brand’s logo is being used as a watermark. We have determined Search Engine Friendly URLs and alt tags for the images. I also specified how an image should be selected for the article or a subsection of an article. To determine this, I used Google’s Vision AI and other Search Engines’ image search features and tools.

In this section, I must also say that Microsoft Bing’s Advanced Image Search capacity helped me. Unlike Google, they index every image on a web page. And, they have a faster “snap and search” infrastructure for image search.

I recommend you read the documents below, to understand this section better.

- Ranking Image Search Results Using Machine Learning Models

- Facial Recognition with Social Network Aiding

Below, you can see why I talk positively about Bing’s Image Search capacity, if you read the second article above, you will see this more clearly.

10.3 Image Sitemap, Image Structured Data and Representative Images for Landing Pages

Google announced its sitemap syntax in June 2005 and improved the sitemap understanding, syntax, and tag structure over time, for instance, in 2011 Google announced that they can understand the hreflang from Sitemap Files. In this context, Image Sitemap files can have different tags such as “caption”, “geo_location”, “title”, and “license”. Image Sitemaps or images in the sitemap files are one of the useful communication surfaces with the search engine for helping its algorithms to understand the role, content, and meaning of the image for a web page.

In this context, all the representative images and also the Largest Contentful Paint Elements of the web pages are added to the sitemap files. In other words, a regular sitemap file has been turned into a complex sitemap with URLs and also images. Below, you can see a complex sitemap example for Encazip.com that includes images and URLs at the same time in the sitemap file.

As a second step for better communication with the search engine, the images on the web page have been added to the FAQ Structured Data. The object and subject entities within the images, texts, colors, and any visual communication element can strengthen the context of the content within the FAQ Structured Data. Thus, not just the first image, but all images are added to the FAQ Structured Data as JSON-LD. Below, you can see an example.

To differentiate these two image sections from each other, different subfolder names and paths are used. As a final step, to support web search via image search, and increase the quality, usability, and click satisfaction signals, the search engine’s overall selections for specific queries in terms of image search are used. In other words, if someone searches for a query and Google show certain types of images, we have analyzed these images’ object and subject entities to use them within our featured images too.

Below, you can see an example. For the query “Electricity Prices”, you can use a “table with prices” as an image (I have used an HTML table which is more clear for a Search Engine) or you can add a “bill that shows the electricity prices”. As a result, from 60+ rank, the web page has ranked as 6th for image search.

And, for topical authority analysis, it is not just about “text content”, it is about “all of the content”. Thus, every gain from every vertical of search, whether it is textual, visual or vocal is a contributor to winning the broad core algorithm updates and dominating a topic, network of search intent and queries from a certain type of context.

11.0 Importance of Clear Communication & Passion for SEO on the Customer-side

To be honest, Encazip.com is the most easy-going, problem-free SEO Case Study that I have ever performed until now. Because the customer’s team is very positive and passionate about SEO. I know that I have spent double or triple the energy that I spent for Encazip.com on other SEO Projects that produced less efficiency. But, what was the difference?

The difference is the mindset. A Polish proverb says that “You can lead a horse to water, but you can’t make him drink”. And, SEO Project Management is on the same page with this proverb. That’s why I always try to be careful while choosing my customers. SEO is not just a “one-person job” anymore, it needs to pervade company culture.

In my opinion, the customer’s character, mindset, and perspective on SEO are the main factors that govern an SEO Project’s success.

(As a confession: In the old days (before 1 August 2018, aka Medic update), I was a blackhat SEO, thus I didn’t need my customers to love or know SEO, but after a while, Google fixed lots of gaps in its algorithm while changing my perspective too. That’s why I have learned to code, understand UX web design, and much more!).

And, that’s why creating an SEO Case Study with an uneducated customer is like “Making the camel jump over a ditch!”, or with the Turkish version, “deveye hendek atlatmak”.

11.1 Importance of Educating the Customer in Advanced SEO Concepts

How can you educate the customer? If you want to talk about just simple and easy SEO terms, it won’t help you to create SEO success stories. That’s why my biggest priority for improving the customer’s comprehensiveness for SEO is paying attention to the “smallest details”.

You can track the share of voice with Authoritas.

That’s why the main headline of this SEO Case Study is “Every Pixel, Millisecond, Byte, Letter, and User Matters for SEO”.

From the technical side, you should focus on “bytes” and “milliseconds” with the IT and Developer team, while focusing on “UX, Content, and Branding” with your marketing and editorial teams.

“Our work with the Holistic SEO Approach was at a new level of difficulty for us. Every SEO meeting was like an education and ended with a to-do list that included a lot of hard work.”

Erman Aydınlık

12.0 Importance of Broad Core Algorithm Update Strategy for SEO

Broad Core Algorithm Updates are the algorithmic updates for the core features of the Google Search Engine. Google announces its broad core algorithm updates officially with some extra details such as update rolling out time, and update rolling out finishing time. Before the Medic Update (1st August 2018 Google Update), all the Broad Core Algorithm Updates are called “Phantom Updates”. Because these updates are not officially announced, the SEO Community calls them “Phantom Updates” while Google calls them “Quality Updates”. Since Broad Core Algorithm Updates affect the crawl budget, authority, and quality assignment of a source (domain) on the web in the eyes of the Google Search Engine, having a solid Broad Core Algorithm Update Strategy will help an SEO to manage the SEO Project more effectively and time-efficient.

To use Broad Core Algorithm Updates as an SEO Strategy, I have written a concrete SEO Case Study with Hangikredi.com.

During the Encazip.com SEO Case Study and Project, there were two Broad Core Algorithm Updates, one is the December 2020 Broad Core Algorithm Update, and the latter one is the June 2021 Broad Core Algorithm Update. Encazip.com has won both of the Broad Core Algorithm Updates of Google, and in the next two sections, you will see how Google compares competing sources on the web with each other while deciding which one should be ranked for the determined topic, and niche.

12.1 Effects of December Broad Core Algorithm Update of Google on Encazip.com and its Competitors

When you put so much effort into planning and executing such a comprehensive SEO program, then you need to ensure you have a variety of SEO tools and software at your disposal to help you coordinate teams and activity, and to manage and report on SEO performance. I use a combination of tools including Authoritas and Ahrefs.

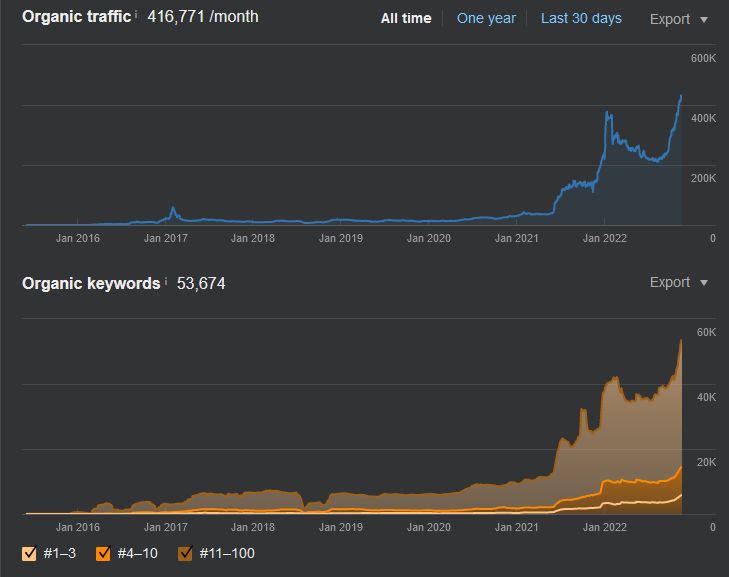

Encazip.com was impacted by the December Broad Core Algorithm Update of Google. But, in this section, I will show a comparative analysis based on the Ahrefs data charts including Encazip and its competitors.

Its first competitor lost most of its traffic.

Below, you will see the second competitor’s graphic.

And, this is the last year’s trend for Encazip.com

Every pixel, millisecond, byte, letter, and user is behind this difference!

Koray Tuğberk GÜBÜR

12.2 Effects of June 2021 Broad Core Algorithm Update of Google on Encazip.com and Competitors

Google recently announced another Broad Core Algorithm Update on June 2nd, 2021. Before the June Broad Core Algorithm Update was announced, Google was switching between sources in its SERPs and this was affecting the traffic of Encazip.com.

During these “source switching periods”, I tried to publish and update more content while supporting the site with press releases, and social media and accelerating the delayed improvements. Search engines always try to differentiate the noise from data, and when they try to gather meaningful data from the SERP, feeding them more positive trust, activity, and quality signals is helpful. In this context, you can check the effects of the June 2021 Broad Core Algorithm Update and its consistency with the December 2020 Broad Core Algorithm Update in terms of the direction of the decisions of the search engine.

The first competitor, Akillitarife.com’s traffic can be seen below. They increased the overall query count, but their traffic continues to decrease, it is an indicator that there is not enough contextual relevance between queries and the source.

The second competitor, Gazelektrik.com also increased the overall query count, but the traffic continues to decrease, and you can see how these two main competitors’ graphs are very close to each other, which means that they have been clustered together by the Search Engine.

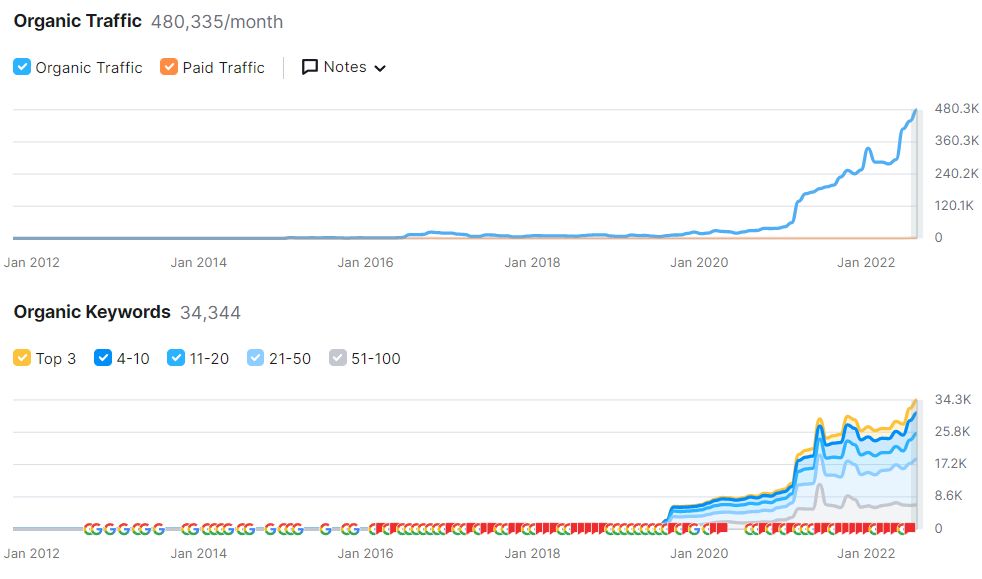

Below, you will see Encazip.com’s organic traffic change for the June 2021 Google Broad Core Algorithm Update which includes a 100% organic traffic increase. (Editor’s Note: Don’t graphs like this make your day? ;-))

A Broad Core Algorithm Update Strategy for every SEO Project should be improved and reinforced. Brands and Organizations might tend to forget the effect of Broad Core Algorithm Update’s strong effects, after two or three months. In this case, an SEO should make every member of the client remember how important it is, and how to create trust, quality, and activity signals for the search engine.

12.3 Effects of July 2021 Broad Core Algorithm Update of Google on Encazip.com and Competitors

Google finished rolling out the Broad Core Algorithm Update on the 13th of July. Also, another spam update that focuses on the affiliate links took place on the 27th of July. Encazip.com has tripled its organic traffic after the July 2021 Broad Core Algorithm Update. In other words, the consistent signals from the search engine became more obvious and strongly reflected. Below you will see the Encazip.com, Akillitarife.com, and Gazelektrik.com’s change graphics during the 2021 July Broad Core Algorithm Update.

Encazip.com’s organic performance change after the 2021 July Broad Core Algorithm Update:

The same change can be seen from Ahrefs too.

Akillitarife.com’s organic performance change during the 2021 July Broad Core Algorithm update of Google can be seen with the negative impact as below.

Gazelektrik.com’s change can be seen below.

Organic performance change graphic of Gazelektrik.com during the 2021 July Broad Core Algorithm Update from Ahrefs. The last situation of Encazip.com’s organic performance, and positive changes thanks to the reliability of the brand can be seen below.

From 700 daily clicks to 10.000 clicks.

For every Broad Core Algorithm Update, every trending query span, and unconfirmed, or link, spam, and pagespeed-related update improved the organic performance of Encazip.com because for every update, the SEO project has been improved holistically. With two broad core algorithm updates, two spam updates, and page experience algorithm updates, along with countless unconfirmed and unannounced updates, the search engine has favored Encazip.com on the SERP with higher confidence for click satisfaction due to the always-on multi-angled SEO improvements.

Effects of November 2021 Broad Core Algorithm Update, Content Spam Update, and Competitors

The November 2021 Broad Core Algorithm Update, the November 2021 Content Spam Update, the following 2021 November Local Search Update, and many other changes continue to affect Encazip.com’s SEO performance along with its competitors. Since August, in these 3 months, lots of things have changed at Encazip.com. In this chapter, these SEO-related changes and the ongoing search engine updates’ effects will be discussed.

Below, Encazip.com’s SEO Performance change during and after the November 2021 Broad Core Algorithm Update can be seen from SEMRush.

The Ahrefs organic search performance graphic for Encazip.com can be seen below.

Encazip.com has been affected positively by the November 2021 Broad Core Algorithm Update, and Content Spam Update along with the Local Search Update. The website has reached the maximum query and organic search performance.

The changes that have been done during this timeline can be found below.

Website Migration to ReactJS and NextJS

During the last 3 months, Encazip.com has performed two different types of site migrations. A site migration can be done in three different ways.

- Site migration without URL Change.

- Site Migration with URL Change.

- Site Migration with Framework, Back-end Structure Change

- Site Migration with Design Change

Website Migration to ReactJS and NextJS represents a Framework and back-end structure change. During the site migration, I determined basic terms and rules for the development and project management teams.

- Do not change the website structure, design, or URL tree before a core algorithm update.

- Do not change the content, design, and framework at the same time.

- Perform the migration during a “non-trending” season without risks.

- Be sure that image, text, and link elements are visible on the web page even if the JS is not rendered.

- Be sure that the request count, size, and request origins are fewer than before.

- Do not increase the size of DOM elements.

- Do not lose the previous improvements.

- Proper Structured Data implementation is lost.

After the July 2021 Broad Core Algorithm Update, I have given the positive signal for the website migration. And, since during the summer the searches were less volume, it was a safe zone for a migration. During the website migration for the framework, the mistakes, and obstacles below are experienced.

- The Virtual DOM is not used despite the NextJS advantages.

- The framework migration isn’t performed on time due to technical problems.

- The request size and count were bigger than before.

- Code-splitting gainings are lost.

- The image loading prioritization and place-holders are lost.

- The DOM Size was larger than before.

The biggest potential benefit of the NextJS and ReactJS migration is using the Virtual DOM. You can see how fast a Virtual DOM exercise is below.

And, this is from Encazip.com.

Basically, from the Virtual DOM, I was able to open 4 different web pages in 6 seconds. It was only 1 for Encazip.com. Another problem is that during the framework migration, some of the technical SEO earnings were lost. Thus, another Technical SEO sprint has been started.